The Dental Enhancement Program (DEP) was designed to strengthen the preparedness of graduating dentistry students for the Board Licensure Examination for Dentists (BLED) by enhancing their performance in both theoretical and practical components and boosting their exam-related self-confidence. This study aimed to evaluate the effectiveness of the DEP in improving student outcomes. Specifically, it assessed students' performance before and after the program and compared pre-test and post-test results across the theoretical and practical phases of the examination.

MethodsA quasi-experimental pre-test/post-test research design was employed in this study. The participants included all 79 graduating dentistry students who took part in the DEP. The data were derived from the students' scores in the pre-test and post-test of both the theoretical and practical phases of the examination. To determine the effectiveness of the DEP, the pre-test and post-test scores were compared and analyzed using a T-test.

ResultsGraduating dental students demonstrated statistically significant improvement following the Dental Enhancement Program (DEP). In the first-semester cohort, theoretical scores increased from 1.52 ± 0.45 to 3.74 ± 0.39 (t = −28.04, p < 0.05), while practical scores rose from 2.15 ± 0.25 to 3.18 ± 0.17 (t = −22.67, p < 0.05). Similarly, in the second-semester cohort, theoretical performance improved from 1.03 ± 0.07 to 2.76 ± 0.44 (t = −24.17, p < 0.05), and practical scores increased from 2.26 ± 0.25 to 3.50 ± 0.19 (t = −23.85, p < 0.05). These results indicate significant enhancement in both theoretical and practical competencies following the intervention.

ConclusionThe DEP effectively enhanced students' academic and clinical performance, supporting its value in preparing dental graduates for professional examinations.

El Programa de Mejora Dental (PMD) fue diseñado para fortalecer la preparación de los estudiantes de odontología próximos a graduarse para el Examen de Licencia Profesional para Odontólogos (ELPO), mejorando su desempeño en los componentes teóricos y prácticos, así como aumentando su autoconfianza relacionada con el examen. Este estudio tuvo como objetivo evaluar la eficacia del PMD en la mejora de los resultados estudiantiles. Específicamente, se evaluó el rendimiento de los estudiantes antes y después del programa, y se compararon los resultados del pretest y postest en las fases teórica y práctica del examen.

MétodosSe utilizó un diseño de investigación cuasi-experimental con pretest y postest. Participaron los 79 estudiantes de odontología en proceso de graduación que tomaron parte en el PMD. Los datos se obtuvieron a partir de las puntuaciones de los estudiantes en los pretests y postests de las fases teórica y práctica del examen. Para determinar la efectividad del PMD, se compararon y analizaron las puntuaciones mediante la prueba T.

ResultadosLos estudiantes de odontología próximos a graduarse mostraron una mejora estadísticamente significativa tras participar en el PMD. En el primer semestre, las puntuaciones teóricas aumentaron de una media de 1.52 ± 0.45 a 3.74 ± 0.39 (t = −28.04, p < 0.05), mientras que las puntuaciones prácticas subieron de 2.15 ± 0.25 a 3.18 ± 0.17 (t = −22.67, p < 0.05). De forma similar, en el segundo semestre, el desempeño teórico mejoró de 1.03 ± 0.07 a 2.76 ± 0.44 (t = −24.17, p < 0.05), y las puntuaciones prácticas pasaron de 2.26 ± 0.25 a 3.50 ± 0.19 (t = −23.85, p < 0.05). Estos resultados confirman una mejora significativa en las competencias teóricas y prácticas tras la intervención.

ConclusiónEl PMD mejoró efectivamente el rendimiento académico y clínico de los estudiantes, lo que respalda su valor como estrategia de preparación para los exámenes profesionales de odontología.

In the Dentistry program, students must successfully complete both preclinical and clinical requirements to qualify for graduation. Preclinical training includes foundational coursework in general education, biomedical sciences, and core dental subjects. During this phase, students develop theoretical knowledge and manual dexterity through simulated exercises on mannequins. Clinical training, by contrast, involves the application of these skills in real-world scenarios, including simulations on live patients and the completion of comprehensive examinations that assess students' knowledge, clinical competency, and preparedness for the dental licensure examination. These comprehensive examinations, which integrate content across multiple dental disciplines, are critical in evaluating a student's readiness for professional practice. Graduating students are typically expected to complete a series of theoretical and practical assessments, including simulated versions of the Board Licensure Examination for Dentists (BLED). Despite the integration of review sessions, many students continue to encounter difficulties in passing these comprehensive exams, as evidenced by the consistently higher number of licensure examinees relative to graduates.

In response to these challenges, the Dental Enhancement Program (DEP) was introduced as a strategic intervention for graduating students. The DEP is designed to strengthen theoretical knowledge, refine clinical skills, and boost students' confidence in undertaking the BLED. This initiative is in line with the Commission on Higher Education (CHED) Memorandum Order No. 03, Series of 2018, which emphasizes the importance of maintaining high academic standards and regularly evaluating dental curricula to ensure alignment with evolving public health needs and advancements in dental education.1 The effectiveness of the DEP was evaluated using a program theory-based framework. This evaluation approach determines whether the program's design is aligned with its intended outcomes and examines how specific components influence these outcomes under defined conditions.2,3 More broadly, educational program evaluation entails the systematic collection and analysis of data to monitor program quality, assess effectiveness, and identify areas for improvement.4,5 The DEP is anchored in the Theory of Involvement, which posits that student success is significantly influenced by both the quantity and quality of time spent engaging in academic tasks and interacting with peers and faculty. Central to this theory is the role of mentorship, particularly individualized mentoring which facilitates student engagement, monitors academic progress, and fosters persistence, all of which are critical for success in high-stakes licensure examinations.6

Mentorship programs not only improve academic performance but also contribute to students' professional identity formation and career readiness. Within the DEP, mentorship was implemented as a structured guidance process focusing on professional development, confidence building, and clinical decision-making support. This often involved one-on-one or small-group discussions with experienced faculty members, allowing for tailored feedback and personalized strategies for improvement. Another integral component of the DEP is tutoring, conceptualized as a structured and supportive relationship between a more experienced individual and a less experienced learner. Tutoring targeted the direct reinforcement of academic knowledge and technical skills through structured review sessions, problem-solving exercises, and targeted feedback on performance. The tutoring process includes coaching, assessment, facilitation, guidance, role modeling, and psychosocial support, fostering student empowerment, trust, and personal growth.7 This model aligns with Vygotsky's Theory of the Zone of Proximal Development, which emphasizes that learning is most effective when students are supported by more capable peers or instructors, enabling them to reach higher levels of cognitive development.8 While these components occasionally overlapped in practice particularly when mentors reinforced academic topics during mentoring sessions, they were designed as complementary but distinct elements of the DEP. Together, these approaches create a dual support system: mentorship addresses the affective and professional growth dimensions, while tutoring reinforces cognitive mastery and technical competence.

The DEP integrates mentoring, tutoring, and structured review sessions to prepare students for the theoretical and practical components of the dental licensure examination. Literature consistently highlights the positive impact of such academic support programs on student outcomes. Clinical exposure, specialty training, and structured preparatory programs have been found to improve students' confidence, competence, and readiness for professional practice.9,10 Furthermore, mentoring and tutoring have been linked to improved academic performance, increased graduation rates, and enhanced employability.11,12 Effective programs are those that are clearly goal-oriented and subject to continuous, evidence-based evaluation.12 Mentorship, in particular, is increasingly recognized as a key element of medical and dental education, supporting students' professional development and personal growth.13,14 These theoretical and empirical foundations underpin the present study, which evaluates the impact of the DEP on the academic performance of graduating dental students. The study aims to assess the DEP's effectiveness in preparing students for the licensure examination by addressing the following research questions: 1) What is the level of performance of graduating dentistry students in the pre-test for both the theoretical and practical phases of the examination?; 2) What is the level of performance in the post-test for both phases?; and 3) Is there a statistically significant difference between the pre-test and post-test performance levels in the theoretical and practical phases of the examination?

Materials and methodsStudy design and participantsA quasi-experimental research design was employed to assess the effectiveness of a dental enhancement program (DEP) on the academic and clinical performance of graduating students from a dental school. The study included 79 final-year dental students aged 22–26 years, all of whom had completed the required pre-clinical and clinical coursework. The sample comprised 37 students (15 males, 22 females) from the first-semester cohort and 42 students (14 males, 28 females) from the second-semester cohort. Cohort allocation was determined naturally by enrollment status, and no additional randomization was undertaken.

Ethics approvalEthical approval for the study was granted by the University Research Ethics Committee (REC Code: 2022–017). Written informed consent was obtained from all participants prior to their inclusion in the study. The consent form provided comprehensive information regarding the study's objectives, procedures, and potential benefits. Although the DEP is part of the clinical dentistry curriculum, participation in the research component was entirely voluntary. Only students who provided informed consent were enrolled in the study.

Data collectionData were collected through both theoretical (written) and practical examinations, replicating the procedures used in the BLED.

Theoretical examinationThe written examination was administered over three consecutive days and covered nine major subject areas:

- 1.

General and Oral Anatomy and Physiology

- 2.

General and Oral Pathology; General and Oral Microscopic Anatomy and Microbiology

- 3.

Restorative Dentistry, Public Health, and Community Dentistry

- 4.

Anesthesiology and Pharmacology

- 5.

Prosthetic Dentistry and Dental Materials

- 6.

Roentgenology, Oral Diagnosis, and Oral Surgery

- 7.

Pediatric Dentistry and Orthodontics

- 8.

Periodontics and Endodontics

- 9.

Dental Jurisprudence, Ethics, and Practice Management

Each day, examinees completed tests in three subjects, with each subject allocated a two-hour examination consisting of 100 multiple-choice questions. Responses were recorded on standardized answer sheets and submitted to the review coordinator upon completion. The theoretical exam used a perfect score of 100 per subject, with performance levels interpreted based on the following scale, aligned with the 75% passing threshold and is represented on a five-point Likert scale ranging from 1.00 to 5.00. A score between 90 and 100 corresponds to a Likert range of 4.25 to 5.00, classified as Excellent. Scores from 85 to 89 fall within a Likert range of 3.40 to 4.24, indicating Very Satisfactory performance. Scores between 80 and 84 correspond to a Likert range of 2.60 to 3.39 and are rated as Satisfactory. A score range of 75 to 79 maps to a Likert range of 1.80 to 2.59, representing a Fair performance level. Finally, scores from 0 to 74 correspond to a Likert range of 1.00 to 1.79 and are categorized as Poor. This mapping ensures a consistent interpretation of performance levels for both descriptive and statistical analyses.

Practical examinationThe practical component included four specific exercises:

- 1.

Restorative Tooth Preparations (Class I and Class II) – A two-hour assessment requiring cavity preparation on designated teeth using typodonts.

- 2.

Fixed Partial Denture Preparation (PFM) – A two-hour task involving the preparation of specific abutment teeth for porcelain-fused-to-metal fixed partial dentures.

- 3.

Removable Partial Denture Design and Work Authorization – A two-hour activity where students designed a removable partial denture and completed a wax pattern on a pre-fabricated cast. A corresponding work authorization form was also completed.

- 4.

Complete Denture Fabrication using a Semi-Adjustable Articulator (SAA) – An extended eight-hour assessment conducted from 7:00 AM to 3:00 PM, followed by mentoring sessions and live demonstrations in the presence of the patients.

After each assessment, typodonts, phantom jaws, and other materials were collected by the review coordinator. For the complete denture exercise, performance evaluations and grading were completed before score sheets were returned to the students.

A validated scoring rubric, adapted from the Professional Regulation Commission (PRC), was employed to assess the practical examinations. The scores were recorded on a score sheet detailing points allocated to each evaluation criterion. Examiners, who also served as mentors, documented total scores for each student, which were then converted into performance levels using area-specific scales. Each practical exercise had a defined perfect score and corresponding rating scale:

Class I and II Cavity Preparation (Perfect score: 25) was rated as Very Good (19–25), Good (13–18), Fair (7–12), or Poor (1–6).

Fixed Partial Denture Preparation (Perfect score: 135) used the ranges Very Good (103–135), Good (70–102), Fair (37–69), and Poor (1–36).

Removable Partial Denture Design and Work Authorization (Perfect score: 75) classified scores as Very Good (65–75), Good (46–64), Fair (27–45), and Poor (1–26).

Complete Denture Fabrication (Perfect score: 60) was similarly rated Very Good (46–60), Good (31–45), Fair (16–30), and Poor (1–15).

The overall level of performance for the practical examination was assessed using a four-point Likert scale based on the total possible score of 295. This scale translates the students' raw scores into performance categories as follows: Scores ranging from 221 to 295 correspond to a Likert scale value between 3.26 and 4.00 and are classified as Very Good performance. This indicates a high level of competence across all practical areas. Scores between 147 and 220 fall within the Likert range of 2.57 to 3.25 and are interpreted as Good, reflecting solid performance with room for improvement. Scores from 73 to 146 correspond to a Likert value of 1.76 to 2.50, representing a Fair level of performance, suggesting basic competency but with significant gaps that need to be addressed. Scores between 1 and 72 fall within the Likert range of 1.00 to 1.75, indicating a Poor performance level, which highlights major deficiencies in practical skills requiring substantial improvement. This scoring system allows for standardized interpretation of total practical exam scores by mapping raw scores to descriptive performance levels through the Likert scale framework.

Intervention programThe DEP was designed collaboratively by faculty members from multiple dental disciplines, following three guiding principles: (1) alignment with the competencies assessed in the BLED, (2) incorporation of historical performance trends and common deficiencies identified in previous licensure examinations, and (3) adaptability to students' diverse academic and clinical backgrounds. Pre-test results were analyzed to identify both individual and cohort-level weaknesses, which informed the selection of lecture topics, practical exercises, and mentoring strategies. This approach ensured that the program addressed known licensure exam challenges while remaining responsive to the specific needs of the participating students.

The DEP was implemented over a three-month (12-week) period and structured into three distinct phases:

Phase 1: Pre-Test Examination - Theoretical and practical pre-tests, modeled after the BLED format, were conducted over five days (three days for theoretical exams, two days for practical assessments) to establish baseline strengths, weaknesses, and areas for improvement.

Phase 2: Intervention - Students engaged in approximately 6–8 h per week of structured activities, totaling 80–96 h. Time allocation was divided into 50% theoretical review sessions, 35% practical training, and 15% individual mentoring/tutoring. Theoretical sessions included four-hour in-depth lectures and two hours of discussion and rationalization of board-style questions, delivered synchronously online. Practical sessions featured two-hour lectures on keyboard exercises and tutorials led by four instructors. One-on-one mentoring targeted professional development, confidence building, and clinical decision-making, while tutoring reinforced academic knowledge and technical skills through problem-solving and performance feedback.

Phase 3: Post-Test Examination - Theoretical and practical post-tests were administered to evaluate learning gains and performance improvements following the intervention.

Statistical analysisData were analyzed using the Statistical Package for the Social Sciences software version 28 (IBM SPSS Statistics for Windows, Version 28.0, IBM Corp., Armonk, NY, USA). Pre-test and post-test scores were compared using paired-sample t-tests. Prior to analysis, the normality of score distributions was assessed using the Shapiro–Wilk test, confirming that the assumption of normality was met and supporting the use of parametric analysis.

ResultsPerformance of graduating dentistry students in the pre-test of theoretical and practical examination phases (First semester)As presented in Table 1, the mean performance score of graduating dentistry students in the theoretical pre-test was 1.52, indicating an overall poor level of achievement. Among the cohort, 66.67% scored within the poor range, while 33.33% achieved fair scores. The highest performance was noted in General and Oral Anatomy and Physiology, Prosthetic Dentistry and Dental Materials, followed by Endodontics and Periodontics. Despite these comparatively stronger areas, the overall poor performance may be attributed to a greater emphasis placed by students on practical components over theoretical preparation. Furthermore, reliance on outdated review materials, rather than current resources such as updated textbooks and online reviewers, likely contributed to knowledge gaps. The lowest scores were recorded in General and Oral Pathology; General and Oral Microscopic Anatomy and Microbiology, and Dental Jurisprudence, Ethics, and Practice Management, subjects typically covered in the early preclinical years, suggesting issues with long-term content retention.

Pre-test and post-test performance of graduating dentistry students during the first semester.

| Subject Area / Exercise | Pre-test Mean ± SD | Pre-test Interpretation | Post-test Mean ± SD | Post-test Interpretation |

|---|---|---|---|---|

| THEORETICAL PHASE | ||||

| Gen. & Oral Anatomy & Physiology | 2.22 ± 1.32 | Fair | 4.35 ± 1.09 | Excellent |

| Gen. & Oral Pathology & Microbiology | 1.00 ± 0.00 | Poor | 4.62 ± 0.86 | Excellent |

| Restorative Dentistry & Community Dentistry | 1.38 ± 0.95 | Poor | 4.68 ± 0.75 | Excellent |

| Prosthetic Dentistry & Dental Materials | 2.22 ± 1.49 | Fair | 3.97 ± 1.04 | Very Satisfactory |

| Roentgenology, Oral Diagnosis & Surgery | 1.32 ± 0.71 | Poor | 2.73 ± 1.02 | Satisfactory |

| Anesthesiology & Pharmacology | 1.24 ± 0.64 | Poor | 4.22 ± 0.98 | Excellent |

| Ortho-Pedo | 1.08 ± 0.28 | Poor | 3.41 ± 1.14 | Very Satisfactory |

| Endo-Perio | 2.19 ± 1.35 | Fair | 3.38 ± 1.30 | Satisfactory |

| Dental Juris, Ethics & Practice Management | 1.00 ± 0.00 | Poor | 2.27 ± 1.19 | Fair |

| Sub-mean | 1.52 ± 0.45 | Poor | 3.74 ± 0.39 | Very Satisfactory |

| PRACTICAL PHASE | ||||

| Class I and Class II | 2.38 ± 0.49 | Fair | 3.16 ± 0.37 | Good |

| FPD | 1.78 ± 0.42 | Fair | 3.00 ± 0.00 | Good |

| RPD | 2.05 ± 0.57 | Fair | 2.57 ± 0.50 | Good |

| CD | 2.38 ± 0.55 | Fair | 4.00 ± 0.00 | Very Good |

| Sub-mean | 2.15 ± 0.25 | Fair | 3.18 ± 0.17 | Good |

In the practical examination pre-test, students attained a mean score of 2.15, indicating a fair level of performance. As shown in Table 1, student outcomes across all practical components, Class I and II cavity preparation, Fixed Partial Denture preparation, Removable Partial Denture, and Complete Denture, fell within the fair range. These results highlight the need for a focused intervention to strengthen clinical competencies and reinforce practical skills through the DEP.

Performance of graduating dentistry students in the post-test of theoretical and practical examination phases (First semester)Following the implementation of the DEP, students demonstrated substantial improvement in their academic and clinical performance. As detailed in Table 1, the mean post-test score for the theoretical phase was 3.74, corresponding to a very satisfactory performance level. Among the students, 44.44% achieved excellent ratings, 22.22% very satisfactory, another 22.22% satisfactory, and 11.11% fair. Notably, no students scored within the poor range. Highest performance scores were observed in Restorative Dentistry, Public Health and Community Dentistry, General and Oral Pathology; General and Oral Microscopic Anatomy and Microbiology, General and Oral Anatomy and Physiology, and Anesthesiology and Pharmacology. The lowest, albeit still satisfactory, performance was in Dental Jurisprudence, Ethics, and Practice Management.

For the practical examination post-test, the mean score increased to 3.18, reflecting a good level of performance. As seen in Table 1, students demonstrated good outcomes in Class I and II cavity preparation, Fixed Partial Denture preparation, and Removable Partial Denture, while performance in the Complete Denture component reached a very good level. These findings confirm the positive impact of the DEP in elevating both theoretical knowledge and practical proficiency among graduating dentistry students.

Comparison of pre-test and post-test performance of graduating dentistry students in theoretical and practical examinations: First semesterA significant improvement was observed in the overall performance of graduating dentistry students in the theoretical examination phase following participation in the dental enhancement program. As illustrated in Fig. 1, the mean post-test scores were markedly higher than the pre-test scores, indicating a shift from poor to very satisfactory performance levels. Notable improvements were recorded in subject areas such as Restorative Dentistry; Public Health and Community Dentistry; General and Oral Pathology; General and Oral Microscopic Anatomy and Microbiology; General and Oral Anatomy and Physiology; and Anesthesiology and Pharmacology. Conversely, the lowest post-test scores were observed in Dental Jurisprudence, Ethics, and Practice Management.

Statistical analysis using a paired samples t-test confirmed that the difference in scores was highly significant (t = −28.04, p < 0.05). The mean score increased from 1.52 ± 0.45 in the pre-test to 3.74 ± 0.39 in the post-test, demonstrating the effectiveness of the program in enhancing theoretical knowledge and examination preparedness.

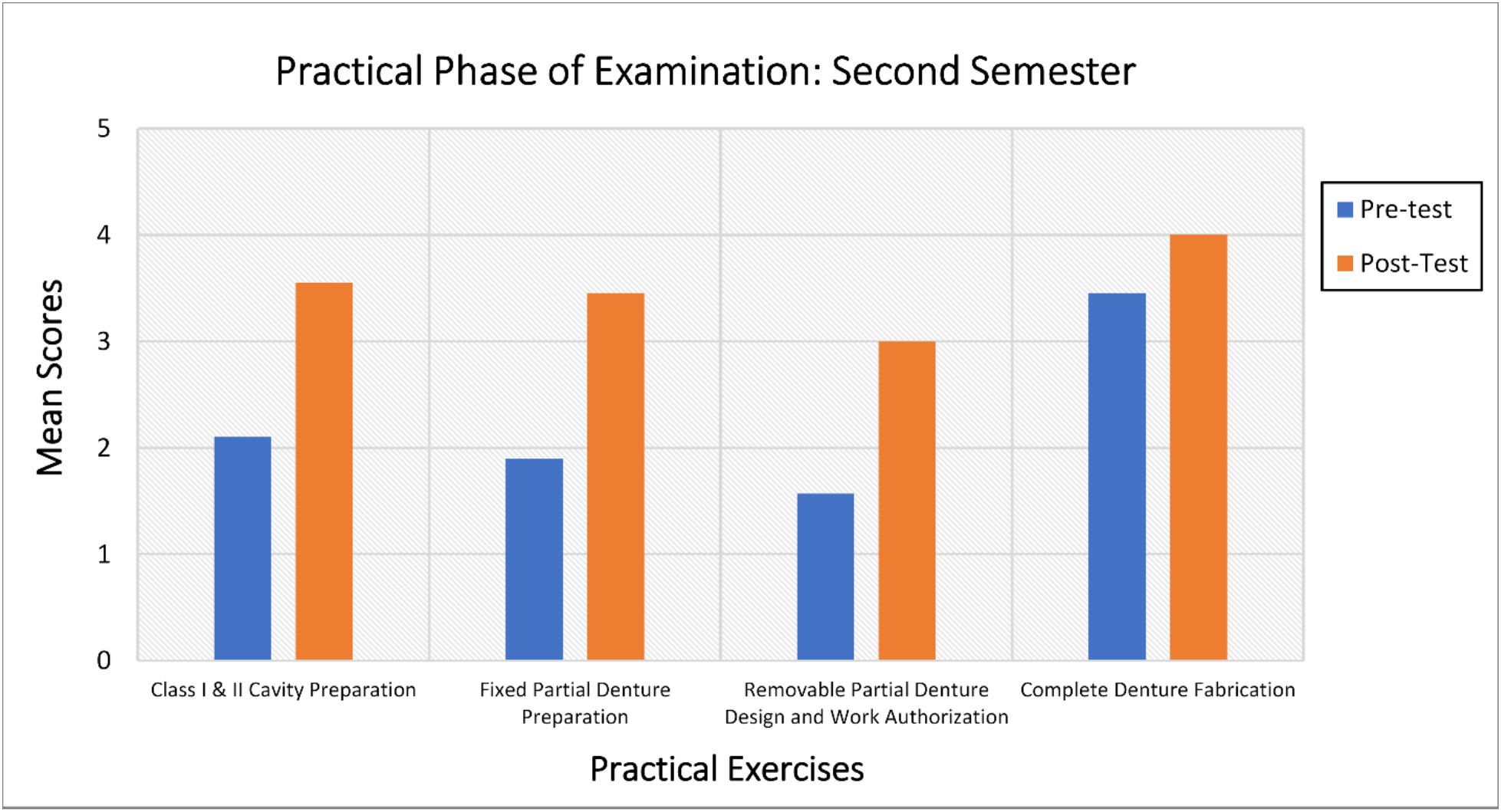

Similarly, a substantial improvement was observed in the practical examination phase, as shown in Fig. 2. Students demonstrated good performance in Class I and II cavity preparation, Fixed Partial Denture preparation, and Removable Partial Denture exercises, and achieved very good performance in the Complete Denture task. The paired samples t-test indicated a statistically significant increase in mean scores from 2.15 ± 0.25 in the pre-test to 3.18 ± 0.17 in the post-test (t = −22.67, p < 0.05).

These findings highlight the effectiveness of the DEP in improving both theoretical understanding and practical competencies, thereby supporting its continued integration into the dental curriculum as a means to better prepare students for licensure examinations.

Performance of graduating dentistry students in the pre-test of theoretical and practical examination phases (Second semester)As presented in Table 2, the overall mean performance of graduating dentistry students in the theoretical pre-test was 1.03, indicating a poor level of achievement. Students underperformed across all subject areas, with marginally higher scores noted in Endodontics and Periodontics. This slight improvement may be attributed to the recency with which these subjects were studied, supporting better recall and comprehension. The overall low performance can be explained by clinical instructors' observations that many students prioritized completing outstanding clinical cases over preparing for the theoretical component of the examination. Furthermore, the review coordinator's report highlighted a common trend: students focused more on practical assessments at the expense of theoretical review.

Pre-test and post-test performance of graduating dentistry students during the second semester.

| Subject Area / Exercise | Pre-test Mean ± SD | Pre-test Interpretation | Post-test Mean ± SD | Post-test Interpretation |

|---|---|---|---|---|

| THEORETICAL PHASE | ||||

| Gen. & Oral Anatomy & Physiology | 1.00 ± 0.00 | Poor | 2.69 ± 1.28 | Satisfactory |

| Gen. & Oral Pathology & Microbiology | 1.00 ± 0.00 | Poor | 4.67 ± 0.69 | Excellent |

| Restorative Dentistry & Community Dentistry | 1.00 ± 0.00 | Poor | 1.38 ± 0.73 | Poor |

| Prosthetic Dentistry & Dental Materials | 1.00 ± 0.00 | Poor | 4.19 ± 1.06 | Very Satisfactory |

| Roentgenology, Oral Diagnosis & Surgery | 1.00 ± 0.00 | Poor | 2.36 ± 1.12 | Fair |

| Anesthesiology & Pharmacology | 1.00 ± 0.00 | Poor | 1.33 ± 0.61 | Poor |

| Ortho-Pedo | 1.00 ± 0.00 | Poor | 2.79 ± 1.37 | Satisfactory |

| Endo-Perio | 1.29 ± 0.67 | Poor | 2.79 ± 1.09 | Satisfactory |

| Dental Juris, Ethics & Practice Management | 1.00 ± 0.00 | Poor | 2.69 ± 1.33 | Satisfactory |

| Sub-mean | 1.03 ± 0.07 | Poor | 2.76 ± 0.44 | Satisfactory |

| PRACTICAL PHASE | ||||

| Class I and II | 2.10 ± 0.58 | Fair | 3.55 ± 0.50 | Very Good |

| FPD | 1.90 ± 0.43 | Fair | 3.45 ± 0.50 | Very Good |

| RPD | 1.57 ± 0.55 | Poor | 3.00 ± 0.00 | Good |

| CD | 3.45 ± 0.55 | Very Good | 4.00 ± 0.00 | Very Good |

| Sub-mean | 2.26 ± 0.25 | Fair | 3.50 ± 0.19 | Very Good |

In the practical phase pre-test, the students achieved a mean score of 2.26, interpreted as a fair level of performance. As shown in Table 2, students performed fairly in Class I and II cavity preparation and Fixed Partial Denture preparation, poorly in Removable Partial Denture, and very well in Complete Denture. These results suggest an uneven skillset and underline the necessity for targeted interventions through the DEP to raise performance standards across all clinical domains.

Performance of graduating dentistry students in the post-test of theoretical and practical examination phases (Second semester)Following participation in the DEP, the overall mean performance of students in the theoretical post-test improved to 2.76, corresponding to a satisfactory level of achievement. The distribution of student performance levels was as follows: 11.11% attained an excellent rating, 11.11% very satisfactory, 44.44% satisfactory, 11.11% fair, and 22.22% poor. As detailed in Table 2, the strongest performance was observed in General and Oral Pathology, General and Oral Microscopic Anatomy and Microbiology, followed by Prosthetic Dentistry and Dental Materials. Conversely, the lowest scores were recorded in Restorative Dentistry, Public Health and Community Dentistry, and Anesthesiology and Pharmacology.

The decline in performance in these latter subjects may be attributed to the significant time gap since they were last studied, leading to knowledge attrition. Additionally, the heightened focus on clinical requirements in recent academic years may have diverted attention away from foundational theoretical concepts. These concepts, although less emphasized during clinical training, were featured prominently in the examination. While the post-test results indicate a positive impact of the DEP, the overall satisfactory rating highlights the need for further refinement of the program, specifically, a more comprehensive and subject-specific review strategy to enhance theoretical competency.

In contrast, the students' performance in the practical post-test phase was markedly higher, with a mean score of 3.50, reflecting a very good level of proficiency. As shown in Table 2, students achieved very good outcomes in Class I and II cavity preparation, Fixed Partial Denture preparation, and Complete Denture, and good performance in Removable Partial Denture. These improvements underscore the effectiveness of the DEP in developing hands-on clinical competencies and preparing students for practical board examinations.

Comparison of pre-test and post-test performance of graduating dentistry students in theoretical and practical examinations: Second semesterA marked improvement was observed in the theoretical examination performance of graduating dentistry students following participation in the DEP during the second semester. As shown in Fig. 3, post-test scores were significantly higher than pre-test scores, indicating a transition from poor to satisfactory performance. The highest gains in the post-test were seen in General and Oral Pathology; General and Oral Microscopic Anatomy and Microbiology; and Prosthetic Dentistry and Dental Materials. The lowest post-test scores were recorded in Restorative Dentistry; Public Health and Community Dentistry; and Anesthesiology and Pharmacology.

Statistical analysis using a paired samples t-test confirmed the significance of this improvement (t = −24.17, p < 0.05). The mean score rose from 1.03 ± 0.07 in the pre-test to 2.76 ± 0.44 in the post-test, demonstrating the positive impact of the DEP in enhancing theoretical knowledge and exam readiness among the students.

Similarly, significant progress was also noted in the practical examination phase, as illustrated in Fig. 4. Post-test results showed very good performance in Class I and II cavity preparation, Fixed Partial Denture preparation, and Complete Denture procedures, while performance in Removable Partial Denture was rated as good. The paired samples t-test revealed a statistically significant increase in mean scores from 2.26 ± 0.25 in the pre-test to 3.50 ± 0.19 in the post-test (t = −23.85, p < 0.05).

This substantial improvement in both theoretical and practical examination performance affirms the effectiveness of the DEP in preparing students for licensure-level assessments, thereby supporting its continued implementation in the dental curriculum to improve academic and clinical competencies.

DiscussionThe aim of this study was to assess the effectiveness of the Dental Enhancement Program (DEP) in improving the performance of graduating dentistry students in both the theoretical and practical components of their examinations. Baseline pre-test results revealed generally poor performance in the theoretical phase and fair performance in the practical phase, underscoring the need for structured, targeted interventions to enhance student competencies across both domains.

Both the first and second semester cohorts demonstrated statistically significant improvements in theoretical and practical examination scores following participation in the DEP. However, when comparing the magnitude of improvement between semesters, some distinctions emerge. In the first semester, theoretical scores increased markedly from a mean of 1.52 ± 0.45 pre-test to 3.74 ± 0.39 post-test, representing a large absolute gain of 2.22 points. Practical scores also improved significantly, rising from 2.15 ± 0.25 to 3.18 ± 0.17 an increase of 1.03 points. In contrast, the second semester showed a smaller absolute gain in theoretical scores, improving from 1.03 ± 0.07 to 2.76 ± 0.44, a difference of 1.73 points. However, the practical scores in the second semester increased from 2.26 ± 0.25 to 3.50 ± 0.19, an increase of 1.24 points, which is higher than the practical improvement observed in the first semester. These results suggest that the first semester cohort experienced greater gains in theoretical knowledge, while the second semester cohort showed relatively greater improvements in practical skills. This may reflect differences in curriculum focus, students' prior exposure to content, or learning preferences at different stages of the program. Additionally, the second semester's lower baseline theoretical scores indicate potential challenges with retention or recall of earlier material, which could have limited the magnitude of improvement despite significant gains. While theoretical scores improved significantly, some content areas, particularly those taught earlier in the curriculum e.g., Restorative Dentistry, Anesthesiology, and Pharmacology, showed relatively smaller gains. This may reflect long-term knowledge attrition due to the time gap between original instruction and review, highlighting the need for periodic refresher modules throughout the curriculum to support retention.

Recognizing these semester-specific trends can guide the refinement of the DEP, such as enhancing theoretical content reinforcement for the second semester and further supporting practical skill development in the first semester, thereby balancing improvements across domains. Research has consistently shown that students who participate in tutorial programs tend to achieve higher success and retention rates, as these programs not only enhance general study skills but also foster better teacher-student engagement.15 Similarly, a study found a significant positive impact of tutoring on academic performance.16 The improved performance in the post-test practical exam further validates the effectiveness of the mentoring intervention. This aligns with a study in the nursing sector, which demonstrated significant positive effects of mentoring across various domains, particularly in leadership and resource optimization.17 These findings are consistent with another study which highlighted the benefits of mentoring for both mentees and mentors, including professional development and opportunities for reflection.18 Other studies also support the role of mentoring in providing academic and professional assistance, emphasizing competence, achievement, and emotional and professional support.6,12

The significant improvement in both theoretical and practical exam performance from pre-test to post-test suggests that the DEP effectively prepared students for their exams, underscoring its value in enhancing academic outcomes. This is consistent with a study which found a positive correlation between perceived teacher feedback and academic performance.19 Similarly, a study on middle school students showed that developmental assessment was constructive, motivating, and beneficial for self-evaluation, ultimately leading to improved learning outcomes.20 The effectiveness of mentoring in academia is further supported by a study that mentoring positively impacts career advancement, scholarly confidence, and goal setting.21 These results also align with findings that enhancement programs significantly contribute to students' academic success.22

Mentorship programs, particularly for students who are below average and need additional support, have been shown to improve academic performance.23 This study also supports the notion that mentoring and comprehensive review programs benefit practitioners at various stages of their careers, not just during transitional periods, as they continue to develop confidence, knowledge, and skills.14 On the other hand, students participating in a problem-based learning tutorial sessions appear to exhibit problem-solving and analytical thinking skills and personal and interpersonal attributes.24 The Mentor-Mentee Program (MMP) provided essential moral, psychological, and professional support, helping students prepare for exams and improving communication, socialization, and networking skills.25 The program's success was attributed to active participation and strong mentor-mentee rapport, with the online implementation yielding positive outcomes. A separate study examining the impact of a mentorship program on academic performance found a significant increase in exam scores, particularly among female students and those who had scored less than 50% on pre-program assessments.23 This program was especially effective in improving the academic performance of students requiring additional support and guidance. Similarly, a study underscores the use of tailored mentorship programs that recognize the nuanced effects on students' satisfaction, professional development, and personal growth. As a reciprocal process, effective mentorship cultivates a collaborative environment that benefits both mentors and mentees.26

The DEP was intentionally designed to accommodate students with varying academic backgrounds and learning needs. Its content development process involved a multi-disciplinary faculty team who aligned topics with the competencies tested in the BLED, addressed recurring deficiencies identified from past licensure performance reports, and incorporated insights from pre-test diagnostic results to tailor both cohort-wide and individual learning interventions. This targeted approach enabled the program to deliver both breadth and depth, while providing adaptive support for underperforming students. Despite this carefully structured design, faculty observations and performance trends revealed that a significant number of students devoted more time and effort to preparing for practical components than for theoretical study. While this focus likely contributed to strong gains in practical performance, it may have limited the potential improvements in theoretical outcomes for certain participants. This disparity underscores the challenge of balancing student attention across different assessment domains, particularly when practical skills are perceived as more immediate or tangible. To address this, future iterations of the DEP could integrate theory-based discussions within practical sessions, implement balanced performance targets, or introduce structured reflection exercises that reinforce theoretical concepts alongside hands-on practice. Such strategies may help ensure that students develop both the practical proficiency and the theoretical understanding necessary for success in licensure examinations.

Entering professional practice presents significant challenges for new practitioners, as this transitional period involves applying the knowledge, skills, and attitudes acquired during their education. This stage can be both stressful and challenging, making the guidance and support of a mentor crucial for developing confidence and competence.27 A study demonstrated that academic mentoring is effective in enhancing student outcomes and providing specialized support, with mentor and mentee motivation being key elements of a successful program.28 Similarly, another study showed that mentoring and coaching are beneficial to undergraduates by enhancing their academic engagement, confidence, and capabilities.29 Students whose mentors fostered an environment that encouraged the exploration of individual strengths and capabilities reported stronger learning and development. This is highlighted in a study that mentoring primarily aims at facilitating the learning and development of individuals. It is a process that can lead to significant changes for both the individual and potentially their organization. While mentoring often involves the transfer of experience and knowledge from mentor to mentee, it is fundamentally a mentee-driven process. This ensures that the development is tailored to the mentee's specific needs, with the mentee setting the agenda to focus on long-term personal development goals.30

It is also important to recognize that the DEP's effectiveness was measured using a simulated licensure examination rather than actual BLED performance. Although the simulation closely mirrored the real exam in terms of structure, timing, and content scope, this approach limits the ability to establish a direct correlation between DEP participation and actual licensure outcomes. While improvements in theoretical and practical scores suggest enhanced preparedness, the true predictive value of these gains for licensure success remains uncertain. Longitudinal follow-up of graduates' performance on the BLED would provide stronger evidence regarding the DEP's long-term effectiveness, helping to determine whether the program translates simulated improvements into meaningful professional competency and licensure attainment.

One key limitation of this study is its quasi-experimental design without a control group, which restricts the ability to establish clear cause-and-effect relationships. The absence of randomization means that various factors such as students' prior Grade Point Average or GPA, previous clinical or externship experience, differences in faculty mentorship, peer-organized study groups, inter-rater grading variability, and familiarity with the test stations may have influenced the results. These confounding variables are often present in real-world academic environments, but they make it challenging to isolate the effect of the intervention itself. Future research employing randomized controlled designs, coupled with standardized grading calibration and more consistent learning opportunities, would help address these issues and provide stronger evidence of the DEP's efficacy. In addition to p-values, reporting confidence intervals and effect sizes would offer a more complete understanding of the magnitude of improvement attributable to the DEP. This would also facilitate meaningful comparisons with similar enhancement initiatives in medical and dental education. While comparable programs have demonstrated short-term success, the novelty and long-term impact of the DEP could be strengthened by incorporating continuous assessment points, embedding spaced repetition for earlier-taught topics, and conducting longitudinal follow-up of graduates to track sustained competence and licensure performance. Such measures would not only clarify the program's lasting value but also provide critical insight into how these interventions translate into real-world professional outcomes.

In summary, the findings of this study, supported by evidence from related research, demonstrate that the DEP, through its integrated review sessions, tutorials, and mentoring, effectively enhances the performance of graduating dentistry students in both the theoretical and practical components of their examinations. The program appears particularly effective in enhancing practical skills; however, targeted refinements aimed at strengthening theoretical retention, combined with long-term follow-up, could further maximize its impact on licensure readiness and professional competence. Incorporating periodic refresher modules or spaced-repetition strategies earlier in the curriculum may help sustain knowledge retention and ensure that students are equally well-prepared across all domains of the licensure examination.

ConclusionThe Dental Enhancement Program (DEP) significantly improved both theoretical and practical examination performance of graduating dental students. The statistically significant increases in post-test scores across multiple domains demonstrate the DEP's effectiveness in reinforcing academic knowledge and clinical competencies. These findings support the continued implementation of enhancement programs to better prepare dental students for licensure examinations and professional practice.

Based on these results, it is recommended that future iterations of the DEP intensify focus on content areas where students showed the lowest pre-test scores or minimal improvement, ensuring targeted and effective review sessions. Regular updates and revisions of examination questions and review materials should be maintained to remain aligned with the Professional Regulation Commission's guidelines. Furthermore, follow-up studies should be conducted to correlate DEP participation with actual licensure examination outcomes to assess real-world efficacy.

Looking ahead, advancements in educational technology present promising avenues to complement and potentially augment traditional mentorship and tutoring components of enhancement programs. Specifically, the development and integration of personalized Artificial Intelligence (AI)-powered learning assistants could offer scalable, adaptive, and individualized support tailored to each student's unique strengths and weaknesses. These AI systems could provide real-time feedback, customize learning pathways, automate performance tracking, and recommend targeted study resources, thereby addressing persistent challenges in theoretical knowledge acquisition identified in this study. Incorporating AI-driven tools alongside human mentorship could optimize learning efficiency, reduce resource burdens, and enhance accessibility, especially in resource-limited settings.

In summary, the DEP offers a robust framework for improving dental students' preparedness for licensure examinations. Future program enhancements that incorporate evidence-based pedagogical strategies and emerging AI technologies hold substantial potential to further elevate student outcomes and contribute to the advancement of dental education.

Authors' contributionsJMA and SSD contributed to conception and design, data analysis, and interpretation. SSD performed data collection and drafted the manuscript. JMA reviewed and revised the manuscript. All authors read and approved the final version of the manuscript.

Availability of data and materialsData of this study are not available to the public due to confidentiality considerations, but the instruments used here are available. For any request of data, one may contact the corresponding author.

Ethics approval and consent to participateEthical approval was obtained from the Research Ethics Committee of the Research Innovation Extension and Community Outreach, University of Baguio (REC Code: 2022–017).

Participation was voluntary, and all participants had the right to withdraw from the study at any time. They signed the informed consent form before participation.

Declaration of generative AI and AI-assisted technologies in the writing processDuring the preparation of this work, the authors used ChatGPT to refine language, improve clarity, and correct spelling and grammatical errors. After using this tool, the authors reviewed and edited the content as needed and took full responsibility for the content of the publication.

Assessment of the dental enhancement program for graduating dentistry students.

FundingThis study was supported by the Research Innovation Extension and Community Outreach, and the School of Dentistry of the University of Baguio.

The authors declare no conflict of interest.

The authors gratefully acknowledge Mr. Oliver Richard C. Celi for his assistance with the statistical analysis, and Dr. Joy Lane N. Cuntig and Dr. Aida A. Dapiawen for their valuable suggestions in the development and validation of the scoring rubrics. This study was supported by the Research Innovation Extension and Community Outreach, and the School of Dentistry of the University of Baguio. The authors also extend their sincere appreciation to the participants for their time and effort in taking part in this study.