This study provides an evidence-based integrated appraisal of artificial intelligence (AI)-generated deepfakes by integrating a cross-disciplinary literature synthesis with original opinion-poll evidence from seven European countries. A SWOT matrix distils convergent concerns—weaponised disinformation, privacy erosion, and the detection arms race—alongside under-explored opportunities in education, therapy, and creative industries. To test whether these scholarly themes resonate with citizens, a computer-assisted web survey (N = 7,083) measured perceived risks and benefits across 10 specific scenarios for each theme. Correspondence analysis and Bonferroni-adjusted means reveal a pronounced age gradient for benefits, whereas risk perceptions vary by country—younger cohorts are noticeably less alarmed only in Sweden, France, and Czechia. Geographically, Dutch, German, British, and Italian publics prove the most enthusiastic: the United Kingdom (UK) couples similar enthusiasm with markedly higher risk vigilance, whereas Czech and Swedish respondents remain consistently sceptical, underscoring a broad, though imperfect, west/south versus central/north divide. The Netherlands, Germany, the UK, and Italy value pro-social applications (i.e., realistic crisis drills, public-interest campaigns, and therapeutic ‘mental-health’ avatars), with the Netherlands topping four benefit items and Italy favouring commercial/entertainment uses such as virtual brand ambassadors. By contrast, Czech and Swedish respondents assign uniformly low benefit scores. Juxtaposed with risk perceptions, the UK and Czechia register the greatest vigilance, Sweden the most relaxed, and others intermediate. Divergence seems associated with digital literacy levels and regulatory maturity. The survey reveals a statistically and practically significant gap between perceived risks and benefits: across all seven countries, respondents, on average, rate risks higher than advantages. Regression estimates indicate that advancing age, lower household income, and gender (woman) enlarge this gap—primarily by undermining perceived benefits—whereas tertiary education and residence in certain western or southern European countries—notably, Germany and Italy—are associated with more balanced appraisals. This study concludes that layered governance, interoperable detection standards, and targeted literacy programmes are urgently required.

Several critical factors drive the rapid proliferation of deepfakes and generative artificial intelligence (AI) technologies. First, the technological breakthroughs in generative adversarial networks (GANs) and deep learning have substantially lowered the impediments to creating hyper-realistic audio-visual forgeries, enabling even novice users to produce advanced deepfakes (Morris, 2024; Domenteanu et al., 2024). The abundance of data on social media platforms provides abundant material for these algorithms to learn and refine, further enhancing the generated content’s perceived authenticity (Morris, 2024). Additionally, the broad accessibility of generative AI tools has democratised the production of deepfakes, allowing them to be employed for both benign and malicious ends, including misinformation, identity fraud, and political manipulation (Paterson, 2024; Singh & Dhiman, 2023). The societal ramifications of deepfakes are intensified by the swift dissemination mechanisms of social media, wherein fabricated videos can rapidly reach millions of users, thereby influencing public perception and eroding trust in information (The Rise of Deepfake Technology, 2023; Boté-Vericad & Vállez, 2022). The ethical and legal complications posed by deepfakes are exacerbated by the challenge of detecting and regulating such material, as existing detection methods struggle to keep up with evolving deepfake techniques (Supriya et al., 2024; Hao, 2024). Moreover, the geopolitical climate—shaped by events such as the COVID-19 pandemic and Russia-Ukraine conflict—has accelerated the use of deepfakes for propaganda and misinformation, further fuelling their proliferation (Domenteanu et al., 2024). The convergence of these technological, social, and political elements emphasises the complex domain of deepfakes and generative AI, demanding a wide-ranging response involving technological innovation, regulatory measures, and public awareness to mitigate detrimental effects (Paterson, 2024; Ferrara, 2024).

Deepfakes—emerging from advanced AI and deep learning—endanger democracy, cybersecurity, social trust, and personal rights while generating multiple ethical and legal issues. Research suggests that these fabricated media forms undermine democratic processes by spreading misinformation and influencing public opinion, notably during election campaigns (Diakopoulos & Johnson, 2021), as observed in 11 countries in 2023 (Łabuz & Nehring, 2024). Their threat is amplified in developing regions, where slower technological progression and limited public knowledge heighten the harmful effects (Noor et al., 2024). Moreover, deepfakes compromise individuals by creating convincing yet misleading portrayals, including insertion into pornographic material (Fehring & Bonaci, 2023; Öhman, 2020). Their impact extends to cybersecurity, as they can alter public perception and endanger institutional stability, thereby spurring the demand for effective detection tools and legal frameworks (Fehring & Bonaci, 2023). The Political Deepfakes Incidents Database documents their prevalence and significance and offers valuable insights to policymakers and researchers (Walker et al., 2024). Although AI may enhance political discourse, manipulative deepfakes precipitate ethical dilemmas and cast doubt on the authenticity of democratic processes (Battista, 2024; Flattery & Miller, 2024; Pawelec, 2022). Consequently, mitigating these challenges requires an inclusive approach that integrates technological safeguards, public education, and legal interventions to uphold democratic values and social confidence (Noor et al., 2024; Fehring & Bonaci, 2023).

Deepfakes, as a communication innovation, carry meaningful advantages for society, creativity, and positive applications, despite their controversial aspects. They help generate lifelike synthetic media, which can serve multiple beneficial purposes. For example, deepfakes can foster improvements in creative industries by helping filmmakers and artists produce otherwise impractical or prohibitively expensive content, stimulating breakthroughs in media production (Whittaker et al., 2023). In education, deepfakes can create immersive and engaging experiences (e.g. historical re-enactments and language learning tools) that enhance learning outcomes and broaden access (Wazid et al., 2024). Additionally, they can safeguard intellectual property through systems such as FORGE, which can mislead intruders attempting to steal sensitive data, thereby bolstering cybersecurity (Alanazi et al., 2024). On a societal level, deepfakes can facilitate community-led initiatives and awareness campaigns to encourage unity and understanding (Machin-Mastromatteo, 2023). Moreover, digital health communities can employ them to tailor motivational content that inspires healthy behaviours and community participation (Nguyen et al., 2023). While they pose risks such as misinformation and privacy breaches, responsible frameworks and ethical standards can harness deepfakes’ potential to produce various social benefits (Alanazi et al., 2024; Wazid et al., 2024; cf. Cavedon-Taylor, 2024). Consequently, despite inherent challenges, deepfakes’ capacity to stimulate novel ideas and yield beneficial results underscores their relevance in modern society.

Scholarship on deepfakes remains divided across various fields, such as law, political science, media and communication studies, and computer science, creating a gap that calls for a unifying framework to synthesise these differing approaches. As existing empirical research on public perceptions of deepfakes is scarce, particularly on a multi-country European scale, this study aims to address three core research questions (RQs), while primarily aiming to construct an integrated framework that (a) consolidates disparate disciplinary insights on deepfakes and (b) assesses their real-world resonance through original, cross-national survey evidence, thereby supplying a coherent evidentiary foundation for scholarship and policy.

RQ1: How have deepfakes been studied across social sciences, humanities, and technical fields? By examining diverse approaches and focuses, we can establish a structured overview of the academic literature on deepfakes.

RQ2: What are the strengths, weaknesses, opportunities, and threats identified by different disciplines? Where do they converge or diverge? A comparative SWOT analysis can offer insight into each domain’s major contributions and blind spots, revealing overlapping concerns and unique perspectives.

RQ3: What do European citizens believe about deepfakes and generative AI, and how do demographic, cultural, and literacy factors shape these perceptions? This empirical component highlights the importance of understanding not merely expert debates but also real-world beliefs and experiences. By integrating fragmented scholarship and presenting novel primary data in the form of empirical findings from our own statistically representative population sample in seven European countries, this study offers a cohesive, cross-disciplinary exploration of deepfake technologies, stressing the urgent need for informed policy decisions, ethical regulations, and public education initiatives that address the complexity of these evolving challenges.

This article’s structure presents a strategic flow designed to link the literature review, comparative assessments, and empirical findings into a coherent whole. Section 1 offers a detailed literature review, highlighting foundational studies and disciplinary perspectives on deepfakes. Section 2 introduces a SWOT analysis that identifies central strengths, weaknesses, opportunities, and threats, drawing together the insights gathered from diverse academic fields. Section 3 presents the empirical findings derived from original opinion-poll data in seven European countries, offering an intricate view of how Europeans perceive and experience deepfakes and generative AI. Concluding remarks reflect on policy, ethical implications, and broader social consequences, thereby delivering practical guidance for stakeholders. Collectively, these sections situate deepfakes within a structured analytical framework, elucidate empirical realities, and propose strategies to inform future inquiry and decision-making within the European context.

Literature review and categorisationThis section systematically reviews and categorises existing scholarly research on deepfakes, emphasising each field’s unique perspectives. Subsections highlight major debates, landmark studies, and thematic gaps.

The discourse surrounding deepfakes in Security Studies and International Relations features considerable debates, notable research, and unresolved questions, particularly regarding national security (Sayler & Harris, 2023), disinformation campaigns, and political manipulation (MacCarthaigh & McKeown, 2021). Deepfakes—synthetic videos created with advanced AI—threaten state stability by enabling misleading content that inflames social tensions or sways public sentiment (Navarro Martínez et al., 2024). Agarwal et al. (2004) caution that forging videos of world leaders could prompt constitutional crises or civil unrest. Likewise, Taylor (2021) highlights the anxiety among Western democracies regarding deepfakes’ potential to compromise electoral integrity and undermine defence strategies. These synthetic media tools influence the balance of power by shaping deterrence, facilitating false-flag operations, and enabling espionage. Chang et al. (2022) emphasise how warped information can be harnessed to aid military leaders, illustrating how deepfakes might achieve similar disruptive outcomes. Meanwhile, Landon-Murray et al. (2019) address the ethical complexities of disinformation in U.S. foreign policy, noting how deepfakes intensify concerns in the ‘post-truth’ age. Adding to this body of work, scholars have examined the role of deepfakes in the broader sphere of misinformation and political manipulation, underscoring that these threats have spread rapidly since deepfakes emerged publicly in 2017 (Carvajal & Iliadis, 2020; Frankovits & Mirsky, 2023). Despite these advances, gaps persist in clarifying the full effect on media producers and the measures required to limit these threats, especially in hybrid warfare and diplomatic contexts.

In parallel, research focusing on hybrid warfare and diplomacy points out the asymmetric risks posed by deepfakes, highlighting how adversaries exploit synthetic media to destabilise political climates and erode trust in digital networks (Veerasamy & Pieterse, 2022). Beretas (2020a; 2020b) frames deepfakes as a critical component of cyber hybrid warfare, stressing their capacity to undermine societal cohesion. Chemerys highlights the urgency of strengthening media literacy so that the public can recognise and reject manipulative content disseminated through deepfake channels (Chemerys, 2024). Building on the technological side, Katarya and Lal (2020) spotlight GANs as the driving force behind deepfakes, proposing SSTNet as a leading detection model while also noting that document and signature forgeries remain under-examined. Weikmann and Lecheler (2023) observe that while deepfakes are acknowledged as a looming challenge for fact-checkers, other forms of misleading material still pose immediate hurdles, suggesting that current countermeasures are not yet fully equipped for synthetic media. Patel et al. (2023) emphasise the need to deepen understanding of deepfake generation processes so that optimised detection tools can be deployed effectively. Collectively, these studies affirm that increased collaboration among policymakers, technologists, and civil society groups is essential for crafting robust detection methods and digital awareness initiatives. Consequently, stakeholders can more effectively address deepfakes’ corrosive impact on security, diplomacy, and public trust (Ali et al., 2022).

The emergence of deepfakes has sparked significant debates within legal science, around the regulation of audio-visual manipulation and the challenges posed by advanced AI technologies. Deepfakes, which are hyper-realistic synthetic media created via deep learning, raise concerns over privacy rights, intellectual property, defamation, and the integrity of information in the digital age (Mustak et al., 2023; Milliere, 2022). Their legal implications are profound, as they can spread misinformation, influence elections, and enable non-consensual pornography, affecting social relationships, democracy, and the rule of law (Sloot & Wagensveld, 2022; Karasavva & Noorbhai, 2021). The European Union’s (EU) evolving regulatory framework, including the AI Act and the Digital Service Act, aims to assign responsibilities to Very Large Online Platforms and establish AI standards (Sloot & Wagensveld, 2022). However, these measures are deemed insufficient, prompting calls for amendments to privacy and data laws, plus revised free speech policies to limit the harm of deepfakes (Sloot & Wagensveld, 2022). Landmark studies emphasise the necessity for robust detection and prevention methods, along with ethical guidelines for media and entertainment usage (Wang et al., 2022a; Lees et al., 2021; Lu & Yuan, 2024). Despite efforts, research gaps persist around legal definitions, enforcement, and support for victims (Karasavva & Noorbhai, 2021; Vizoso et al., 2021).

Regulating deepfakes is a complex and ongoing issue, prompting debates over definitions, enforcement, and other hurdles posed by AI-generated synthetic media. These ultra-realistic forgeries threaten multiple sectors, including criminal justice, where they can taint evidence and erode institutional trust (Sandoval et al., 2024). The absence of a universal legal definition hampers regulatory consistency, as shown by varying U.S. state laws that focus on factors such as AI and falsified media (Meneses, 2024). Similar shortcomings emerge in Canadian policy, which fails to address deepfake pornography effectively and calls for explicit language on non-consensual synthetic material (Karasavva & Noorbhai, 2021). Rapid dissemination through social media intensifies these threats, resulting in marketplace deceptions and personal harms such as identity theft (Mustak et al., 2023). Suggested solutions include privacy law reforms, constraints on certain expressions, and pre-emptive rules on deepfake technologies (Sloot & Wagensveld, 2022). Beyond these policy concerns, therapeutic contexts such as grief counselling present additional ethical and legal issues tied to reality distortion (Hoek et al., 2024). As calls for enhanced detection frameworks mount, researchers emphasise that these systems help curb misinformation and misuse (Rathoure et al., 2024). In sum, the legal regulation of deepfakes demands an approach that balances innovation with protective safeguards.

The EU’s response to deepfakes intersects with privacy, intellectual property, defamation, and the wider regulatory landscape, including the GDPR, AI Act, and DSA. The AI Act attempts to define deepfakes and mandate transparency but faces critiques for overlooking certain scenarios, such as non-consensual pornography, leaving gaps in enforcement (Łabuz, 2024; 2023). Privacy breaches remain central, as the unauthorised use of personal data can lead to reputational harm and emotional distress. Existing rules, such as Article 1032 of the Civil Code and the Personal Information Protection Law, provide limited clarity, complicating protection efforts (Han, 2024). Moreover, deepfakes undermine democratic norms by diminishing trust; however, current EU measures are deemed inadequate (Karunian, 2024). Developer accountability has been proposed to limit harm, directing attention toward ethical and governance-based strategies (Pawelec, 2024; Rini & Cohen, 2022). While the AI Act represents a pioneering step, experts suggest stronger definitions and improved oversight to tackle AI-driven synthetic media (Hacker, 2023). Likewise, the DSA obligates Very Large Online Platforms to control harmful media, though its effectiveness remains uncertain (Birrer & Just, 2024). Subsequently, cooperation among regulators, technologists, and civil society is key to ensuring that EU laws evolve alongside fast-paced technological advancements.

From a Science and Technology Studies (STS) viewpoint, deepfakes offer varied opportunities and concerns, reflecting the dynamic relationship between technology and society. On the one hand, they can energise fields such as entertainment and education by enabling realistic simulations and re-enactments, enhancing creative expression and learning experiences (Stewart & Williams, 2000). On the other hand, their hyper-realistic form can be exploited to spread disinformation and propaganda, contributing to public confusion and undermining trust in institutions (Tahir et al., 2021). This mixed potential illustrates how social values shape—and are shaped by—new technologies. Limited public knowledge about deepfakes—often influenced by age, gender, and education—further amplifies the risk of misuse, indicating a growing need for digital literacy initiatives (Seibert et al., 2024; Tahir et al., 2021; Fallis, 2020; Habgood-Coote, 2023). In this context, an STS emphasis on co-evolution reminds us that technological development is non-deterministic, shaped by cultural, ethical, and political factors (Boerwinkel et al., 2014; Keulartz et al., 2004). By recognising deepfakes as more than mere technical artefacts, scholars encourage participatory mechanisms that include diverse stakeholders, thereby addressing gaps in current STS and ethical frameworks. Such measures can strengthen both societal understanding and regulatory approaches, reducing the risks while harnessing beneficial applications of deepfake technologies (Sabanovic, 2010).

Socio-technical imaginaries around deepfakes reflect both promising and troubling narratives, shaping how the public perceives their potential uses. For instance, human-computer interaction can benefit from the technology, as seen in design tasks where deepfake personas improve user engagement, though perceptual glitches still pose challenges (Kaate et al., 2024). Similarly, digital resurrection in campaigns such as ‘Listen to My Voice’ demonstrated the ability of deepfakes to evoke strong emotional reactions and raise social awareness, even if these efforts sparked ethical questions about autonomy and posthumous consent (Lowenstein et al., 2024). Balancing these positives, deepfakes remain closely linked to misinformation and the erosion of trust in digital content, prompting the development of countermeasures to minimise harm (Lyu, 2024). A general lack of familiarity with deepfakes—especially among women—reinforces negative perceptions, calling attention to the influence of sociodemographic factors in shaping attitudes (Seibert et al., 2024). Emotional responses, such as fear or interest, play a pivotal role in these imaginaries, as demonstrated in the Irish anti-fracking context (Hughes, 2024). Deepfakes can also offer possibilities for activism or self-expression; however, concerns still arise over identity control and surveillance (Doğan Akkaya, 2024). These contrasting visions highlight the importance of ethical guidelines to steer deepfake innovation toward beneficial societal outcomes.

Deepfakes, emerging from deep learning and generative AI, present a dual scenario for the economy by introducing novel markets for AI-driven synthetic content and simultaneously posing significant challenges for creative industries (Akbar et al., 2023). On the positive side, this technology has the potential to revolutionise audio-visual production, lowering costs and enabling realistic media creation across entertainment, advertising, and education (Milliere, 2022; Kietzmann, Mills, & Plangger, 2021). Such possibilities could spark fresh economic growth, as new markets for AI-generated content evolve. However, the unauthorised use of creators’ works for training data raises serious copyright and ownership issues, prompting lawsuits that may constrain generative AI to the public domain or specially licensed content (Samuelson, 2023). This tension could dampen innovation, limiting the economic benefits of emerging AI applications. Beyond intellectual property disputes, deepfakes can damage media credibility, undermining trust and leading to economic fallout in fields that rely on reputable content, such as journalism and advertising (Weikmann et al., 2024). Even when viewers do not fully accept these synthetic clips as real, they can harm individual and corporate reputations, resulting in financial losses (Harris, 2021). While certain studies remain optimistic about the creative potential of deepfakes, the overall economic balance hinges on carefully managing the risks and opportunities (Lu & Yuan, 2024; Patel et al., 2023).

Tackling deepfakes in business and cybersecurity introduces a blend of promise and peril, attesting to the need for robust detection and strategic planning. From a corporate perspective, these hyper-realistic media tools can elevate brand engagement through personalised marketing while simultaneously risking severe brand harm if misused (Mustak et al., 2023). In the cybersecurity realm, advanced methods such as SecureVision combine big data analytics and deep learning to identify and neutralise synthetic content (Kumar & Kundu, 2024). Complementary measures, such as perceptual-aware perturbations and decoy tactics, aim to thwart adversarial manipulation, demonstrating the growing sophistication of counter-technologies (Jointly Defending DeepFake, 2023; Wang et al., 2022b; Ding et al., 2023). Blockchain and distributed ledgers also feature in emerging strategies to validate content authenticity, showing that innovative solutions can help protect high-profile individuals from deepfake impersonations (Gambin et al., 2024; Boháček & Farid, 2022). The financial stakes are high: reputational damage and consumer mistrust may trigger significant losses, while advanced detection systems demand ongoing investment (Domenteanu et al., 2024). Against this backdrop, the public and private sectors weigh costs and benefits, assessing how deepfakes may be harnessed responsibly for digital forensics or creative content (Wang, 2023; Lyu, 2024). Ultimately, striking a balance between capitalising on deepfake innovations and safeguarding against their misuse is essential for sustaining economic growth and securing online environments.

From a sociological angle, deepfakes hold implications for social norms, collective trust, and the ways communities share and process information. In particular, they can amplify societal polarisation by disseminating false narratives that reinforce existing prejudices or sow discord (Verma, 2024; Wan, 2023). Although some argue that the credibility of a video hinges more on its source than its content (Harris, 2021), deepfakes may still disturb viewers psychologically, influencing memory formation and social interactions. This effect is seen when moviegoers find their shared viewing experiences altered by synthetic edits (Murphy et al., 2023). Complicating matters, deepfakes often trigger a third-person effect, where individuals believe they themselves are less likely to be misled than others (Ahmed, 2023a). Meanwhile, the entertainment value of these highly realistic fabrications can normalise deception, potentially dulling ethical concerns among the general public (Wan, 2023). Sociologists also point out that deepfakes undermine collective trust in audio-visual evidence, placing fresh burdens on institutions—such as news outlets—that rely on reliable video material (Shin & Lee, 2022; Whyte, 2020). Even if deepfakes are not always more convincing than other types of disinformation (Hameleers et al., 2022), their capacity to spread quickly on social media intensifies the danger they pose to communal beliefs and behaviours (Karpinska-Krakowiak & Eisend, 2024). Consequently, regulatory measures, technical defences, and digital literacy campaigns have emerged as essential avenues for mitigating these societal risks (McCosker, 2022; Mustak et al., 2023).

Media and communication studies examine deepfakes in terms of framing, virality, and moral panic (Brooks, 2021; Godulla, Hoffmann, & Seibert, 2021). Public discourse often paints them as a dire threat to democratic institutions, given their capacity to fabricate video or audio content that seems genuine (Fehring & Bonaci, 2023; Cover, 2022). This portrayal can heighten alarm, especially as society grapples with the blurred line between reality and fabrication (Broinowski, 2022; Momeni, 2024). Viral circulation on social platforms magnifies the impact, allowing manipulated clips to reach vast audiences swiftly, as observed during the 2020 U.S. Presidential Election (Prochaska et al., 2023; Sorell, 2023). Audiences typically struggle to identify altered material, which can produce public confusion and even incite real-world consequences (Momeni, 2024; Hameleers et al., 2023a). Despite this bleak narrative, some scholars call for a more measured view, noting that deepfakes may be harnessed for creative or educational ends (Cover, 2022; Broinowski, 2022). Ultimately, human agency shapes how these tools are used; however, fragmented research and limited theoretical models still make it difficult to fully grasp deepfakes’ cultural impact (Vasist & Krishnan, 2022). Media literacy programmes, clear ethical guidelines, and tighter oversight represent potential remedies (Holzschuh, 2023; Beridze & Butcher, 2019). By balancing vigilance with an openness to positive applications, media specialists aim to address the complexities of deepfake technologies (Shahodat, 2022).

Psychological research focuses on the cognitive processes that affect individuals’ detection and resistance to deepfake manipulation (Ask et al., 2023). Analytical thinkers tend to identify fabricated content more readily, assigning lower credibility to suspicious videos and images (Pehlivanoglu et al., 2024; Hameleers et al., 2023b). Similarly, cognitive flexibility—defined as the ability to adapt thinking processes—enables better detection accuracy and a more reliable judgement of one’s own performance (The role of cognitive styles and cognitive flexibility, 2023). Familiarity with the subject matter also boosts detection rates: individuals are more adept at spotting distortions involving celebrities or topics they already know (Allen et al., 2023; 2022). Simultaneously, a ‘truth bias’ leads several people to assume that the incoming information is accurate, making them vulnerable to manipulation (Pehlivanoglu et al., 2024). Emotional reactions can heighten susceptibility; for instance, unsettling content featuring deceased personalities may trigger discomfort, further clouding judgement (Soto-Sanfiel & Wu, 2024). Real-world conditions such as low-quality footage or divided attention amplify the likelihood of missing deepfake cues, hinting that experimental data may underestimate genuine vulnerability (Josephs et al., 2023; Artifact magnification, 2023). Technological solutions, such as artefact magnification, can raise both detection accuracy and confidence, highlighting the combined importance of cognitive skills and supportive tools (Josephs et al., 2023).

Additional psychological considerations involve confirmation bias, motivated reasoning, and the emotional resonance of deepfakes. Individuals often seek information that aligns with their beliefs, ignoring contradictory or unsettling details (Dickinson, 2024). In the context of deepfakes, this bias can solidify harmful impressions, especially when the fabricated content resonates with viewers’ existing worldviews (Hameleers et al., 2023a, 2023b). Emotional investment heightens these effects, as strong reactions to ideologically charged or personally offensive materials can magnify confirmation bias (Dickinson, 2024). Consequently, political figures may be disaited more easily, and public trust in legitimate institutions can erode, especially if the manipulated content appears sufficiently realistic (Ruiter, 2021). Interestingly, some scholars argue that the threat posed by deepfakes is moderated by audiences’ trust in the content’s source: viewers may dismiss or doubt suspicious material if they have faith in its origin (Harris, 2021). However, third-person perception still emerges, with individuals believing that others are more susceptible than they are themselves (Ahmed, 2023b). Media literacy initiatives, focused on revealing how cheap and accessible deepfake technologies are, can help lower the veneer of authenticity and reduce undue influence (Shin & Lee, 2022). Overall, understanding these cognitive biases and emotional triggers highlights the urgency of interventions aimed at mitigating deepfakes’ potentially disruptive psychological impact.

Deepfakes, driven by advanced AI methods such as GANs and diffusion models, have prompted significant debates in Computer Science and AI ethics because of their capacity to generate extremely convincing yet fabricated content (Laurier et al., 2024; Hao, 2024; Amerini et al., 2024; Chowdhury & Lubna, 2020). Detection techniques play a critical role in limiting the harm these creations can cause (Buo, 2020). Researchers have tested various strategies, including Convolutional Neural Networks (CNNs), to detect the fine-grained artefacts often hidden within deepfake media (Amerini et al., 2024; Guarnera et al., 2020). Optical flow-based CNNs assess motion inconsistencies in video sequences, effectively tackling cross-forgery scenarios (Amerini et al., 2019; Caldelli et al., 2021). Anomaly detection methods, such as self-adversarial variational autoencoders, help differentiate normal from anomalous latent variables, improving the recognition of manipulated material (Wang et al., 2020). Other innovations include dynamic prototype networks, which generate interpretable explanations for temporal anomalies in deepfake videos (Trinh et al., 2021). Model performance benefits further from data augmentation and transfer learning, yielding high precision and recall in real-world applications (Iqbal et al., 2023). However, the ongoing arms race between more sophisticated generation algorithms and detection tools still necessitates continued research to outpace malicious adaptation (Laurier et al., 2024; Hao, 2024).

Beyond detection, computer scientists have also focused on ethical frameworks to guide the responsible development and use of deepfake-generating AI. Such guidelines address a broad spectrum of ethical dilemmas, ranging from questions of privacy and authenticity to issues of fairness and broader societal impact. Ali and Aysan (2024) call for adaptive governance models that respond to the shifting ethical landscape of AI, highlighting the need for domain-specific oversight in education, healthcare, and industry. A separate scoping review by Hagendorff (2024) enumerates 378 normative concerns, emphasising the significance of fairness, content safety, and user privacy. In healthcare, Oniani et al. (2023) propose the ‘GREAT PLEA’ principles—governability, reliability, equity, accountability, traceability, privacy, lawfulness, empathy, and autonomy—to steer the ethical adoption of AI in clinical environments (Oniani et al., 2023). On the legislative front, the EU’s AI Act aims to protect fundamental rights while setting international standards for AI governance (Gasser, 2023). Floridi’s (2019) work on the Commission’s guidelines for trustworthy AI adds further impetus to these endeavours, asserting that AI should advance human welfare and environmental stewardship. However, operationalising these frameworks remains challenging, as highlighted by Chen et al. (2023), who argue for interdisciplinary methods and inclusive user testing to embed morality into AI systems.

The listed outcomes of the research studies represent a foundation for further sub-operationalising the initial RQ3 into a set of six sub-RQs as follows:

RQ1: Does a consistent age gradient emerge across Europe in perceptions of the risks and benefits of deepfakes?

RQ2: Do European countries cluster in similar positions within the correspondence–analysis space based on their average risk and benefit assessments?

RQ3: Are there significant cross-national differences in perceptions of specific deepfake scenarios—political misinformation, legal evidence, and creative applications—and, if so, do countries rating a scenario as especially risky also view it as less beneficial?

RQ4: What thematic interdependencies can be identified among individual risk- and benefit-related perceptions of deepfake technologies as revealed by covariance analysis?

RQ5: Is there a statistically and practically significant difference between the perceived risks and perceived benefits of deepfake technologies among respondents?

RQ6: To what extent do demographic characteristics (age, gender, country of residence, educational attainment, and income level) explain variation in the difference between perceived risks and benefits of deepfake technologies?

Comparative SWOT analysisThis section presents a comparative SWOT analysis of deepfakes, drawing on insights from various disciplines such as Security Studies, International Relations, Law, Sociology, Media Studies, Psychology, and Computer Science. The aim is to reconcile different perspectives and highlight convergences, divergences, and gaps. Four criteria guide this comparative lens. First, each field emphasises primary concerns: national security, individual privacy, economic impact, or broader sociocultural issues. Second, proposed solutions range from regulatory approaches and technological innovations to educational and ethical frameworks. Third, conceptualisations of risk vary between immediate or long-term, targeted or global, and incremental or disruptive scenarios. Finally, forms of benefits cover creative industries, political engagement, activism, and novel art or entertainment applications. By applying these criteria, this analysis seeks to draw out the strengths and weaknesses of existing scholarship, pinpointing how each discipline addresses deepfake challenges and opportunities. The resulting synthesis uncovers areas of synergy, for instance, where legal strategies can inform technical solutions, as well as points of contention, such as differing views on whether social trust or national security should take precedence. Overall, this comparative approach highlights how deepfakes cut across multiple policy, cultural, and technological arenas, demanding a more unified understanding and a coordinated response.

Strengths across disciplinesSecurity Studies and International Relations highlight well-defined threat models that illuminate how deepfakes can manipulate public opinion, erode trust, and undermine global stability. They offer structured frameworks for analysing malicious uses, including disinformation campaigns and espionage. This clarity helps in prioritising immediate risks and informing governmental and intergovernmental strategies. Legal scholarship, meanwhile, excels in outlining potential avenues for regulation and liability, drawing on existing doctrines of defamation, privacy, and intellectual property. These fields also propose reforms to adapt current legislation and introduce responsibilities for content-hosting platforms. The synergy between these domains materialises in discussions of deterrence and enforcement: when legal guidelines reinforce security imperatives, states and multilateral bodies have clearer pathways to prosecute perpetrators or impose sanctions. Hence, the strengths in these areas lie in their focus on comprehensive risk assessments, potential litigation strategies, and the capacity to integrate measures across jurisdictions. They support the urgency of robust institutional frameworks—both to preserve national interests and to protect civil rights. Consequently, they point toward practical approaches that address immediate threats without entirely stifling technological innovation.

Sociology and Media Studies excel at diagnosing how deepfakes shape social norms, collective trust, and media consumption practices. Their research highlights how false narratives become viral, how audiences may respond to manipulated content, and how moral panic can emerge. Such an understanding is crucial in designing public-awareness campaigns that speak directly to community values. Psychology brings a granular view of cognitive processes by revealing how biases—such as truth bias, motivated reasoning, and confirmation bias—amplify or mitigate susceptibility to deepfake content. This knowledge can guide interventions that improve individual resilience, such as targeted media literacy training for users who are especially prone to manipulation. Meanwhile, Computer Science research stands out for its robust detection methodologies, employing advanced algorithms and anomaly detection systems to spot synthetic content. This technical depth can bolster legal and policy measures by providing reliable means to identify illicit manipulations. Together, these fields demonstrate strong analytical capabilities and practical solutions. Sociology and Media Studies offer insights into public perception and cultural contexts, Psychology elucidates why some people are more affected than others, and Computer Science offers concrete techniques for thwarting malicious actors. These strengths collectively build a multifaceted skill set for understanding, detecting, and mitigating deepfake threats.

Weaknesses: fragmentation and limited scopeA recurring weakness across disciplines stems from their siloed nature. Security Studies might stress national-security related, geopolitical risks without fully capturing the human security aspect, that is, the personal harms experienced by individuals, such as non-consensual pornography or identity theft. Similarly, legal discussions can be narrowly focused on legislative loopholes and specific definitions, sometimes overlooking the everyday psychological toll of deepfake victimisation. Sociology and Media Studies risk underestimating the technical intricacies of generating and detecting synthetic content, limiting their capacity to propose concrete countermeasures. Furthermore, Computer Science research, while advanced in detection strategies, may overlook ethical and legal implications. These disciplinary blind spots testify to limited scope and fragmented research agendas that fail to connect the dots between, for instance, how psychological vulnerabilities can fuel security breaches or how legal frameworks might need to adapt to the evolving technical landscape. Interdisciplinary knowledge exchange is still sporadic, leaving several aspects of deepfakes unaddressed—especially regarding the experiences of marginalised communities, who may lack digital literacy in being exposed to complex manipulations. By operating in compartments, each discipline struggles to craft holistic approaches that can manage the broad range of threats posed by deepfakes.

Opportunities: innovative uses, collaborative governance, and enhanced public awarenessDespite significant concerns, deepfakes present opportunities to innovate in areas such as entertainment, education, and business. Sociologists and media scholars suggest that if used responsibly, these technologies could enrich interactive learning, enhance creative storytelling, and open new forms of artistic expression. Computer Science research indicates that advanced AI systems can accelerate scientific visualisation or training simulations, benefiting sectors ranging from healthcare to architecture. Meanwhile, economic analysis points to emerging markets for AI-driven content creation, stimulating job growth, and technological entrepreneurship. These positive potentials create compelling arguments for measured support of deepfake development, provided safeguards are in place. Moreover, opportunities manifest in the realm of social impact: community-driven campaigns leveraging deepfakes can highlight social issues, reach broader audiences, and provoke meaningful public discourse. Additionally, legal and policy frameworks might be fine-tuned to support ethical innovation by introducing flexible guidelines that protect against harm without stifling creativity or progress. In sum, deepfakes offer novel prospects for industry expansion, public engagement, and technological evolution—an outlook that can guide balanced policymaking focused on long-term benefits.

Another key opportunity across disciplines lies in forging collaborative governance models. International Relations research highlights the necessity of treaties or multinational agreements to prevent the misuse of deepfakes in propaganda and warfare. When combined with legal proposals for oversight, clear jurisdictional boundaries, and accountability for platforms, a global regulatory framework could emerge. These efforts would be strengthened by sociological and psychological studies identifying exactly where mis/disinformation thrives and how best to provide accurate counsel. Moreover, the synergy of computer scientists, social scientists, and policymakers can drive comprehensive public-awareness campaigns, teaching digital literacy through multifaceted interventions. Harnessing each discipline’s expertise could also result in a standardised approach to labelling AI-generated content, enabling users to make informed decisions. An integrated coalition of academic researchers, industry stakeholders, government bodies, and civil society organisations may develop consistent guidelines and detection tools. This collaborative environment would not only curtail malicious actors but also nurture ethical, beneficial uses of deepfakes. By ensuring people understand the technology’s full potential and pitfalls, it becomes easier to discourage exploitation while simultaneously encouraging positive innovation.

Threats: eroding trust and heightened risks, arms race, and ethical quagmiresFrom a broad perspective, deepfakes pose threats to democratic institutions, collective trust, and personal autonomy. Security analysts warn of state-sponsored disinformation that can destabilise regions, while legal experts caution that legislation is still insufficient or unevenly applied, leading to enforcement gaps. Sociologists point to the risk of moral panic, where the public may become overly suspicious of genuine media because of the fear of manipulation, further polarising societies. Media researchers suggest that virally spreading deepfakes can cause lasting reputational harm while simultaneously fuelling cynicism about all digital content. Technological arms races raise another concern: as deepfakes become more sophisticated, detection lags, compelling continuous research investment. There is also a risk of regulatory overreach, whereby policymakers enact broad restrictions that hamper legitimate creativity or stifle free expression. In a commercial sense, deepfake-related fraud undercuts consumer trust, potentially leading to significant economic ramifications. These multifaceted threats could converge into a perfect storm of social fragmentation, legal uncertainty, and inflated security costs. Thus, safeguarding against these hazards requires a careful balance of technological advancement, targeted regulation, and vigilant public education.

The accelerating arms race between deepfake generation tools and detection methodologies intensifies the threats faced by users and institutions alike. Every time a more advanced algorithm emerges, detection models must quickly adapt, and the cycle repeats itself. This constant cat-and-mouse dynamic forces stakeholders to invest heavily in research and development, potentially diverting resources from other critical areas. Ethical dilemmas add another layer of complexity. Even beyond malicious misuse, the sheer realism of deepfakes can blur the boundaries between consensual creations and exploitative manipulations, raising questions about identity rights and moral responsibility. If the technology becomes more widely accessible, unscrupulous individuals may produce deepfake content targeting personal relationships or private spheres, such as familial disputes, with potentially devastating consequences. Furthermore, overdependence on automated detection can erode human vigilance, creating a false sense of security. If a detection system fails, it might be harder for humans to step in. Ultimately, these challenges underscore that threats are neither purely technical nor purely ethical; they encompass a broad range of dangers that compound across social, political, and individual domains, requiring cohesive, multi-layered responses.

Comparative analysis: similarities and differencesWhen synthesising insights across disciplines, clear similarities and differences emerge. A shared recognition is that deepfakes hold both disruptive and transformative potential, prompting calls for strong detection strategies and legal guidelines. However, each field prioritises differently: Security Studies focuses on national stability and disinformation, while media scholarship is more concerned with cultural impact, moral panic, and framing effects. Legal researchers examine concepts such as liability and regulatory gaps, whereas psychologists investigate cognitive susceptibility and emotional responses. Meanwhile, Computer Science concentrates on technological fixes, including detection models and anomaly identification. Some convergences include the universal emphasis on digital literacy, public awareness, and ethical guidelines as key elements of any solution. Divergences are seen in the scale of concerns—global for security experts, individual for psychologists—and in the pace of recommended interventions, with policymakers sometimes demanding immediate action while academic researchers advocate deeper empirical exploration. Overall, the synergy among disciplines rests on the acceptance that no single approach can fully address deepfakes. Only a tightly woven strategy, incorporating legal measures, technological breakthroughs, social education, and ethical considerations, can minimise the risks and harness potential benefits.

Empirical study: public perceptions in seven European countriesData and research objectivesThe study was conducted in partnership with Ipsos as part of an international survey spanning seven European countries. Its primary aim was to gauge public attitudes toward deepfake audio-visual content and, more broadly, AI. Data were collected through Computer-Assisted Web Interviewing with pre-recruited online panels; for the Czech sample, we used the Ipsos Populace.cz panel. Fieldwork was conducted from 10 to 17 February 2025 via a structured questionnaire that the respondents completed in roughly ten minutes. The final dataset comprises 7,083 respondents drawn from nationally representative samples in the Czech Republic (N = 1,005), the United Kingdom (UK) (N = 1,013), Germany (N = 1,000), France (N = 1,022), Italy (N = 1,014), Sweden (N = 1,011), and the Netherlands (N = 1,018). Each country’s sample shows a balanced gender distribution: Czechia includes 493 men and 512 women, the UK 482 men and 531 women, Germany 493 men and 507 women, France 500 men and 522 women, Italy 497 men and 517 women, Sweden 513 men and 498 women, and the Netherlands 502 men and 516 women. The samples are likewise well balanced across age, education, monthly income, and settlement size.

Selecting Sweden, Italy, Germany, the Netherlands, France, Czechia, and the UK maximises structural variance within a compact European sample. The set spans the continent’s cardinal points (Nordic Sweden, Mediterranean Italy, western Germany–the Netherlands–France, east-central Czechia, Atlantic UK), blends large (Germany, France, UK, Italy) and medium populations, contrasts a post-communist EU member with long-standing liberal democracies inside the EU and its leading external counterpart, and captures marked differences in economic models, technological capacity, educational outcomes, and dominant religious traditions. Such heterogeneity offers a rigorous basis for probing how institutional, socio-economic, and cultural contexts shape public attitudes while keeping the broader regional framework constant.

Methods and analysisThe first analytical step centres on the composite variable Deepfakes Risk Perceptions, derived from the respondents’ evaluations of ten deepfake scenarios on a five-point Likert scale. Summing the item scores yields an index from 10 (lowest overall risk) to 50 (highest), providing a panoramic measure of concern about potential harms, ranging from misuse in media and politics to legal proceedings and social contexts.

Ten items on the questionnaire concerning risks (R) related to AI were as follows:

- 1.

R1: A deepfake video depicts a prominent world leader announcing an unverified military action, potentially escalating global tensions.

- 2.

R2: A deepfake is presented as evidence in court and may influence the outcome of a criminal or civil case.

- 3.

R3: A deepfake campaign ad falsely portrays a candidate as supporting a controversial policy, influencing voter opinions.

- 4.

R4: A deepfake video ‘resurrecting’ a deceased public figure is used for a public event without clear consent from their surviving relatives.

- 5.

R5: A government or media corporation uses AI-generated ‘news anchors’ (deepfakes) to shape public opinion on certain policies.

- 6.

R6: Companies deploy deepfake ‘brand ambassadors’ to personalise marketing, potentially distorting product claims.

- 7.

R7: Risks of Deepfakes—a viral deepfake on social media accuses a celebrity or influencer of unethical behaviour, sparking public outrage.

- 8.

R8: Deepfake content is deliberately designed to trigger emotional reactions (e.g., fear, anger) to manipulate public behaviour.

- 9.

R9: Deepfake technologies are evolving faster than detection methods, fuelling an ongoing ‘arms race’.

- 10.

R10: A lack of regulation allows deepfake creators to freely publish deceptive content without disclosure.

The second analytical stage introduces the composite variable Deepfakes Benefits Perceptions, capturing the respondents’ views on the potential advantages of deepfake technologies. This index aggregates ratings for ten benefit-oriented scenarios, each scored on a five-point Likert scale. Summing the item scores produces a scale from 10 (very low perceived benefit) to 50 (very high), enabling a clear quantification of how various social and demographic groups value technology’s positive applications.

Ten items on the questionnaire towards benefits (B) related to AI were:

- 1.

B1: Deepfakes are used in emergency preparedness exercises, creating realistic simulations for more effective training of military and diplomatic teams.

- 2.

B2: Deepfake technologies are used to protect witnesses by altering their facial identity and voice, allowing them to testify anonymously without fear of retaliation.

- 3.

B3: Deepfakes serve as interactive political-education tools, enabling students and citizens to virtually ‘interview’ AI-generated avatars of historical experts.

- 4.

B4: A deepfake ‘resurrection’ is created for educational/historical exhibits, bringing long-deceased figures to life for museum visitors with a clear disclosure that it is AI-generated.

- 5.

B5: Governments or NGOs conduct deepfake-based awareness campaigns on urgent social issues, using hyper-realistic scenarios to vividly illustrate potential future outcomes.

- 6.

B6: Companies use deepfake ‘virtual brand ambassadors’ for small businesses that cannot afford celebrity endorsements, levelling the marketing playing field.

- 7.

B7: Interactive media experiences feature deepfake hosts or performers who offer audiences highly personalised entertainment or educational content, enhancing social engagement.

- 8.

B8: Therapeutic deepfakes are developed to allow patients to role-play conversations with AI-generated support avatars or practice exposure therapy in a controlled setting.

- 9.

B9: Researchers develop open-source deepfake platforms for legitimate uses (e.g., film production, voice dubbing) with built-in ethical safeguards, supporting innovation while promoting responsible development.

- 10.

B10: Clear policies on labelling deepfake content are enacted, increasing transparency and user trust, enabling creative uses of AI-generated media without misleading the public.

This study applied a suite of quantitative techniques selected to match each research objective and to provide a reproducible account of how Europeans perceive the risks and benefits of deepfake technologies. We began with correspondence analysis, following the formulation of Hirschfeld (1935) and the later refinements of Benzécri (1973), to visualise the associations between countries and ordinal categories of risk and benefit. Prior chi-square tests confirmed that the contingency tables contained sufficient dependence for meaningful dimension reduction.

To compare nations on individual scenarios, we employed two-sided tests with Bonferroni‐adjusted thresholds (Armstrong, 2014), limiting the probability that multiple comparisons would yield spurious significance. The overall balance of attitudes was examined with paired-samples t tests, which revealed that the respondents consistently rated risks higher than advantages. Covariance analysis then probed the thematic structure of the 20 questionnaire items; by retaining the unstandardised units, it exposed both tight within-cluster ties and lighter, yet interpretable, cross-cluster linkages—most noticeably, those involving regulation and transparency. Finally, an ordinary least-squares General Linear Model (GLM) assessed the demographic drivers of the risk–benefit differential. Advancing age, lower income, and gender (woman) each widened the gap, while higher educational attainment narrowed it; country effects were visible as well, with Czech and UK publics registering the greatest divergence.

All computations were performed in IBM SPSS Statistics 30, with supplementary tabulation and graphing in Microsoft Excel, ensuring full transparency and ease of replication.

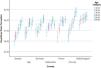

Deepfakes risk perceptionsFig. 1 charts perceived deepfake-related risk across seven European countries by age cohort. The y-axis denotes risk intensity, with higher values signifying greater concern. Perceptions differ both internationally and generationally. The respondents aged 16–24 generally register lower concern—most clearly in Sweden, France, and Czechia—whereas age effects are muted in Germany, Italy, and the UK. The UK shows the highest risk ratings across all cohorts, while Sweden and Italy record the lowest overall. Error bars illustrate within-group variability; uncertainty is greatest among the youngest and oldest participants. Collectively, the results indicate a generational divide in assessments of deepfake harm, plausibly linked to contrasts in digital literacy, media exposure, and trust in technology.

Fig. 2 positions countries and four Deepfakes Risk Perception categories in a correspondence map; shorter distances denote more similar response patterns. Dimension 1 (singular value = 0.086) accounts for 70.4 % of the total inertia, while Dimension 2 (singular value = 0.046) adds 20.4 %. Together, these axes capture about 91% of the variance, indicating that the two-dimensional display adequately summarises the data. Chi-square statistic of 72.271 with 48 degrees of freedom and a significance level of 0.013 confirms that the association between country and risk category is statistically meaningful.

Along Dimension 1, the chief axis of differentiation, Sweden (0.388), Czechia (0.332), and France (0.161) appear on the right-hand side, aligning with the lower-risk categories 11.00–20.00 and 21.00–30.00, which also carry positive scores. By contrast, the UK (-0.447), Italy (-0.313), and Germany (-1.141) plot to the left; the high-risk category 41.00+ (-0.672) sits nearby, indicating that the respondents in these countries are more likely to view deepfakes as highly risky. Dimension 2 captures less variance (≈ 20.4 %) but reveals a vertical split: the Netherlands registers a high positive loading (0.404), whereas France and Czechia fall below the horizontal axis. Among the risk groups, 11.00–20.00 is low on Dimension 2 (-0.524), whereas 31.00–40.00 shows a moderate positive value (0.177). The Netherlands, therefore, occupies a somewhat distinct position in the correspondence space.

Deepfakes benefits perceptionsFig. 3 charts Deepfakes Benefits Perceptions by age cohort in seven European countries. A consistent age gradient emerged: the respondents aged 16–24 and 26–34 assigned the highest-benefit scores—around 33 in Germany and above 34 in the UK—whereas those aged 55–65 and 66–99 clustered between 28 and 31. Although the steepness of this decline varies, every country shows the same downward trajectory. Although Czechia, France, and the Netherlands display a narrower range across cohorts, younger groups still lead. France’s overlapping confidence intervals suggest modest cohort contrasts, whereas Sweden and the Netherlands reveal the sharpest drop, with the oldest Swedes registering the lowest mean. In sum, perceived benefits of deepfakes diminish steadily with age, highlighting a pervasive intergenerational divide across Europe.

We conducted a correspondence analysis for Deepfakes Benefits Perceptions (Fig. 4), mirroring the procedure used for the risk index. The first dimension has a singular value of 0.116 and explains 0.014 units of inertia—87 % of the total—thus, it captures the dominant pattern linking countries to benefit perceptions. The second dimension, with a singular value of 0.045 and inertia of 0.002, contributes an additional 13 %—a smaller share of the overall variance—raising the cumulative proportion of explained inertia to 99.9 %, meaning two dimensions are sufficient to summarise the data structure.

On Dimension 1, the UK and Czechia sit on the positive side near the 41.00+ benefits category, indicating that the respondents in these countries hold the most favourable views of deepfakes’ creative and communicative potential; France also leans this way, though closer to the origin. Sweden, the Netherlands, and Italy fall on the negative side, alongside the 31.00–40.00 and 21.00–30.00 categories, reflecting a more cautious stance that likely emphasises ethical or social concerns. Germany, positioned close to zero on both axes, occupies a neutral midpoint between optimism and scepticism.

Taking up 13% of the inertia, Dimension 2 refines these distinctions: the Netherlands loads negatively on both axes, highlighting its distance from the highest-benefit category and accentuating its critical perspective, whereas Czechia loads positively on both, further confirming its optimistic outlook. Together, the coordinates revealed pronounced national contrasts in how the respondents assessed the value of deepfake technologies.

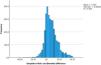

Difference between deepfakes-risk and deepfakes-benefit perceptionsFig. 5 depicts the distribution of individual risk–benefit differentials for the full sample (N = 6,956). The x-axis records each respondent’s composite risk score minus their composite benefit score; the y-axis represents frequency. Negative values—where benefits exceed risks—occur only sporadically, confirming their marginal presence. The histogram is positively skewed. Most observations clustered in the 0–10 range, indicating that the respondents generally judged deepfakes to be more hazardous than advantageous, though the margin was seldom extreme. The sample mean is 7.16, while the standard deviation of 9.38 reveals wide dispersion, signalling considerable diversity in individual assessments even as the aggregate leans toward heightened concern.

Correspondence Analysis of the Risk–Benefit Differential

We extended the correspondence–analysis procedure to the net difference between each respondent’s risk and benefit indices. To aid interpretation, the continuous differential was discretised into six ordered categories as follows:

- –

≤ -1.00: Respondents in this group actually perceive benefits as greater than risks – a small but noteworthy subset.

- –

0.00–2.00: Very balanced perception; risks are only slightly greater than benefits.

- –

3.00–6.00: Mild risk dominance; respondents see some imbalance, but not sharply.

- –

7.00–10.00: Moderate difference in favour of risks; growing concern.

- –

11.00–17.00: Substantial perceived risk dominance.

- –

18.00+: The most concerned group, respondents see deepfakes as far more risky than beneficial.

The model yields a total inertia of 0.016 and a statistically significant chi-square value (χ² = 113.368, p < 0.001, df = 36), validating the relevance of the association between countries and risk–benefit categories. The first two dimensions together explain 92 % of the total inertia (Dimension 1 = 47.7 %, Dimension 2 = 44.3 %), confirming that a two-dimensional map captures the essential structure of the data.

Fig. 6 portrays a two-dimensional correspondence map in which the horizontal axis (47.7 % of inertia) represents a continuum from ‘risk-dominant’ perceptions on the left to ‘benefit-sensitive’ or balanced views on the right, while the vertical axis (44.3 %) captures the degree of internal dispersion within each national sample. On the right-hand side, Italy and Sweden occupy positive positions on Dimension 1, adjacent to the bins in which benefits either equal or exceed risks (≤ –1 and 0–2). Their publics, therefore, appear relatively open to the constructive potential of deepfakes or, at a minimum, unconvinced that hazards decisively prevail. Germany sits close to the map’s centre, signalling a middle-ground stance – neither markedly alarmist nor overtly optimistic. Subsequently, France, the Netherlands, the UK and Czechia gravitate toward bins denoting moderate to substantial risk dominance (7–10 and 11–17). However, the two clusters differ vertically. The Netherlands plots high on Dimension 2, indicating sizeable within-country variation: alongside a sizeable cautious segment, a counter-group registers either significantly milder or significantly steeper differentials, resulting in a dissemination of opinion. The UK and Czechia fall low on Dimension 2, revealing tighter consensus: both publics coalesce around the judgement that deepfakes pose clearly greater dangers than advantages, with the UK aligning most closely to the 11–17 category and Czechia extending toward the extreme 18+ group.

Collectively, the map depicts the following three patterns: (1) Italy and Sweden exhibit the most benefit-aligned or balanced orientations; (2) Germany and France hover near neutrality; and (3) the Netherlands, the UK, and Czechia adopt strongly risk-centred outlooks, though the Dutch respondents display greater internal disagreement than their Czech and British counterparts.

Cross-national differencesSubsequently, our analysis focuses on the perceived risks associated with deepfake technologies across countries in the study. The aim is to identify which countries consider specific deepfake scenarios to be most risky and whether there are statistically significant differences in their assessments. Using average scores of Likert scale responses (1 – Very low risk, 2 – Low risk, 3 – Moderate risk, 4 – High risk, and 5 – Very high risk) and two-sided tests with Bonferroni correction (Armstrong, 2014), the analysis highlights where perceptions diverge most clearly. Bonferroni correction is a statistical method used in multiple comparisons (e.g., when comparing multiple countries with each other) to reduce the risk of false-positive results (so-called ‘Type I error’, i.e., incorrect rejection of the null hypothesis). Table 1 presents the output of the analysis for risk perceptions. The letters next to the mean values indicate which countries have significantly lower ratings, highlighting where concerns are notably higher.

Cross-National Comparison of Deepfake Risk Perception.

| Risks of Deepfakes | Country | ||||||

|---|---|---|---|---|---|---|---|

| Czechia | United Kingdom | Germany | France | Italy | Sweden | Netherlands | |

| (A) | (B) | (C) | (D) | (E) | (F) | (G) | |

| World leader announces unverified military action | 4.188C D E F | 4.161D E F | 4.044F | 3.945F | 3.946F | 3.798 | 4.067F |

| Deepfake used as court evidence | 3.958C D E F G | 4.014C D E F G | 3.618 | 3.569 | 3.718F | 3.550 | 3.653 |

| Campaign ad misrepresents candidate | 4.056F | 4.183D E F G | 4.089F | 4.051F | 3.995 | 3.909 | 4.029 |

| “Resurrected” public figure used without consent | 3.710C D E F G | 3.692C D E F G | 3.427 | 3.455 | 3.478 | 3.483 | 3.383 |

| AI-generated “news anchors” shape opinion | 3.910F G | 3.905F G | 3.809F | 3.893F G | 3.823F | 3.599 | 3.737 |

| Deepfake “brand ambassadors” distort claims | 3.676 | 3.786 | 3.663 | 3.772 | 3.745 | 3.720 | 3.681 |

| Viral deepfake accuses celebrity | 3.896 | 4.084A F | 4.013F | 4.114A E F G | 3.966 | 3.863 | 3.972 |

| Emotion-triggering deepfake manipulates behaviour | 4.145E F | 4.145E F | 4.087F | 4.063 | 4.016 | 3.940 | 4.040 |

| Technology outpaces detection (“arms race”) | 3.978 | 4.103C E F | 3.951 | 4.056E F | 3.852 | 3.854 | 4.013E F |

| Lack of regulation enables deception | 4.023 | 4.234A F | 4.151A F | 4.222A F | 4.228A F | 4.013 | 4.188A F |

| Results are based on two-sided tests assuming equal variances. For each significant pair, the key of the smaller category appears in the category with the larger mean.Significance level for upper case letters (A, B, C): .051 | |||||||

For the scenario in which a deepfake video shows a world leader announcing an unverified military action, the Czech respondents (M = 4.188) perceived significantly more risk than those in Germany (4.044), France (3.945), Italy (3.946), and Sweden (3.798). Citizens in the UK (4.161) also judged this threat more severe than participants in France, Italy, and Sweden, while Sweden registered the lowest concern overall. When deepfakes were presented as courtroom evidence, Czechia (3.958) and the UK (4.014) rated the danger markedly higher than Germany (3.618), France (3.569), Italy (3.718), Sweden (3.550), and the Netherlands (3.653). In campaign ads that falsely portray a candidate, the UK (4.183) showed the greatest alarm, scoring the risk significantly above France (4.051), Italy (3.995), Sweden (3.909), and the Netherlands (4.029). Germany (4.089), Czechia (4.056), and France (4.051) also rated this scenario higher than Sweden. For the ‘digital resurrection’ of a deceased public figure, Czechia (3.710) and the UK (3.692) expressed significantly more worry than Germany (3.427), France (3.455), Italy (3.478), Sweden (3.483), and the Netherlands (3.383), with the Dutch sample showing the lowest average risk. When assessing AI-generated news that anchors used to shape public opinion, Czechia (3.910) and the UK (3.905) rated the threat higher than Sweden (3.599) and the Netherlands (3.737); France (3.893) displayed the same pattern relative to these two countries.

No significant cross-national differences emerged for deepfake brand ambassadors in marketing; means clustered tightly between 3.663 (Germany) and 3.786 (UK). For viral deepfakes accusing celebrities of wrongdoing, France (4.114) reported significantly greater concern than Czechia (3.896), Italy (3.966), Sweden (3.863), and the Netherlands (3.972). The UK (4.084) was likewise higher than Czechia and Sweden. Regarding emotion-evoking deepfakes designed to manipulate behaviour, Czechia (4.145) and the UK (4.145) considered the risk significantly greater than Italy (4.016) and Sweden (3.940). The German (4.087), French (4.063), and Dutch (4.040) scores did not differ statistically from the leading pair. On the technological ‘arms race’, where deepfake methods outpace detection, the UK (4.103) voiced significantly higher anxiety than Germany (3.951), Italy (3.852), and Sweden (3.854); France (4.056) exceeded Italy and Sweden as well. The Netherlands (4.013) ranked among the more concerned publics, though its score did not differ significantly from that of the UK. Finally, for the absence of regulation, the participants in the UK (4.234), Italy (4.228), France (4.222), Germany (4.151), and the Netherlands (4.188) scored the risk significantly above Czechia (4.023) and Sweden (4.013). This suggests that Western and Southern European countries are particularly concerned about the regulatory vacuum around deepfake technologies.

Overall, Czechia and especially the UK register consistently high levels of concern across most scenarios, whereas Sweden repeatedly records the lowest. Bonferroni-adjusted comparisons confirm that these cross-national gaps are statistically robust.

We applied the same cross-national procedure to the benefit scenarios. Table 2 reports mean scores on a five-point scale (1 = Very low benefit, 2 = Low benefit, 3 = Moderate benefit, 4 = High benefit, and 5 = Very high benefit) and presents two-sided Bonferroni-adjusted tests that pinpoint where evaluations differ significantly.

Cross-National Comparison of Deepfake Benefits Perception.

| Benefits of Deepfakes | Country | ||||||

|---|---|---|---|---|---|---|---|

| Czechia | United Kingdom | Germany | France | Italy | Sweden | Netherlands | |

| (A) | (B) | (C) | (D) | (E) | (F) | (G) | |

| Emergency preparedness exercises | 3.329 | 3.526A F | 3.539A F | 3.414 | 3.432 | 3.336 | 3.551A F |

| Witness protection through identity masking | 3.471 | 3.479 | 3.405 | 3.366 | 3.374 | 3.386 | 3.427 |

| Interactive political-education tools | 2.932 | 3.057D | 3.010 | 2.903 | 2.965 | 2.927 | 3.058D |

| “Resurrection” exhibits of historical figures | 3.180 | 3.237F | 3.111 | 3.163 | 3.184 | 3.053 | 3.117 |

| Awareness campaigns on urgent social issues | 2.912 | 3.186A F | 3.065A F | 3.139A F | 3.144A F | 2.899 | 3.046 |

| Virtual brand ambassadors for small firms | 2.808 | 2.808 | 2.883F | 2.826 | 2.969A B F G | 2.679 | 2.741 |

| Interactive media hosts/performers | 2.806 | 2.851 | 2.900 | 2.828 | 3.035A B D F G | 2.826 | 2.863 |

| Therapeutic role-play or exposure therapy | 3.078 | 3.124 | 3.143F | 3.050 | 3.181F | 2.978 | 3.264A D F |

| Open-source platforms with ethical safeguards | 3.085F | 3.077 | 3.087F | 2.961 | 3.118D F | 2.939 | 3.120D F |

| Clear labelling of deepfake content | 3.243 | 3.521A D E F G | 3.567A D E F G | 3.280 | 3.310 | 3.270 | 3.344 |

| Results are based on two-sided tests assuming equal variances. For each significant pair, the key of the smaller category appears in the category with the larger mean.Significance level for upper case letters (A, B, C): .051 | |||||||

For emergency-preparedness training, respondents in the Netherlands (M = 3.551), Germany (3.539), and the UK (3.526) judged the benefit significantly higher than their counterparts in Czechia (3.329) and Sweden (3.336). In the witness-protection scenario, average scores varied only marginally; no pairwise differences reached significance. When deepfakes served as interactive political-education tools, the UK (3.057) and the Netherlands (3.058) rated the benefit significantly above France (2.903). Germany’s mean (3.010) exceeded the French figure numerically but not at a significant level. For the ‘digital resurrection’ scenario, the UK respondents (M = 3.237) rated the benefit significantly higher than those in Sweden (3.053). Perceptions in Czechia (3.180), Germany (3.111), France (3.163), Italy (3.184), and the Netherlands (3.117) did not differ significantly from either the UK or Sweden. In awareness campaigns on urgent social issues, the UK (3.186), Germany (3.065), France (3.139), and Italy (3.144) rated the benefit significantly higher than Czechia (2.912) and Sweden (2.899). The Netherlands (3.046) was directionally higher than these two countries, but the difference fell short of significance.

When respondents assessed virtual brand ambassadors, the benefit score in Italy (2.969) exceeded those in Czechia (2.808), the UK (2.808), Sweden (2.679), and the Netherlands (2.741); Germany (2.883) and France (2.826) also rated the scenario significantly higher than Sweden. For interactive-media hosts, Italy again stood out: its mean of 3.035 surpassed those of Czechia (2.806), the UK (2.851), France (2.828), Sweden (2.826), and the Netherlands (2.863). In the therapeutic setting, the Netherlands (3.264) scored significantly higher than Czechia (3.078), France (3.050), and Sweden (2.978); and Italy (3.181) did not differ significantly from the Dutch mean. Germany (3.143) also exceeded Sweden. When evaluating open-source deepfake platforms with ethical safeguards, Italy (3.118) and the Netherlands (3.120) rated the benefit significantly above France (2.961) and Sweden (2.939). Czechia (3.085) and Germany (3.087) were likewise higher than Sweden. Clear deepfakes labelling requirements drew the strongest endorsement: Germany (3.567) and the UK (3.521) rated this measure significantly above every other country in the comparison.

The benefit results extend the picture drawn from the risk analysis and point to a clear West–East gradient in public sentiment toward deepfake technologies. The respondents in the Netherlands, Germany, the UK, and Italy assigned comparatively high value to practical or pro-social applications—simulated crisis drills, public-interest campaigns, and mental-health therapies. This positive outlook is strongest in the Netherlands, which leads the field in four of the ten benefit items, and is shared by the German and British publics, whose support is bolstered when robust labelling rules are introduced. Italians, meanwhile, show remarkable enthusiasm for the commercial and entertainment potential of deepfakes, from virtual brand ambassadors to interactive-media hosts. By contrast, Czech and Swedish respondents consistently give the lowest benefit ratings, particularly in socially or culturally expressive scenarios.

When we place benefits alongside risks, a two-track picture appears: the UK and Czechia rate deepfake dangers highest, Sweden lowest, with Germany, Italy, France, and the Netherlands in between; conversely, benefit optimism is strongest in the Netherlands, Germany, the UK, and Italy, whereas Czech and Swedish publics remain distinctly sceptical. These cross-currents likely reflect national gaps in digital literacy, the stringency of media regulation, and broader cultural attitudes toward emerging technologies. In settings where media-education programmes are well developed, and regulatory debates are advanced—as in the UK, Germany, and the Netherlands—citizens may feel confident enough to endorse constructive uses while still flagging potential harms. In countries with lower institutional visibility or public discussion of deepfakes, such as Czechia and Sweden, the technology elicits less optimism, either because its advantages are less salient or because trust in institutional safeguards is weaker.

The covariance analysis of deepfake technology perceptionsIn the next section, we focus on the covariance analysis of individual questionnaire items. This analysis helps us understand how the statements about the risks and benefits of deepfakes are interrelated, specifically, whether and how the evaluation of one statement changes depending on the evaluation of others. Unlike correlation analysis, which measures the strength and direction of relationships, covariance provides an unstandardised indicator of shared variability, considering the degree of dispersion of individual variables.

Covariance analysis allows us to uncover thematic connections within groups of statements about deepfake technologies' risks and benefits, as well as cross-links between them.

Table 3 presents the covariances between individual questionnaire items. This analysis offers deeper insights into the structural dependencies within the dataset, enabling a more complex interpretation of how perceptions of deepfake risks and benefits evolve dynamically.

Covariance matrix.