Generative artificial intelligence (GenAI) is rapidly reshaping decision-making across multiple domains, including health, law, business, education, and tourism. This study synthesizes the fragmented research on GenAI to provide a comprehensive framework for understanding its role in enhancing decision-making accuracy, efficiency, and personalization. Employing a systematic literature review and thematic analysis, this study categorizes diverse applications, from clinical diagnostics and legal reasoning to financial advisement and educational support, highlighting both innovative practices and persistent challenges. The analysis of 101 articles reveals that, while GenAI significantly improves data processing and decision support, mitigating issues such as inherent bias, misinformation, and transparency deficits requires careful attention. The integration of multi-agent frameworks and human oversight is critical for ensuring ethical and reliable outcomes. Ultimately, this synthesis highlights the transformative potential of GenAI as a decision-making tool by presenting a cross-disciplinary framework that reveals its impact and uncovers gaps across various domains. The study also advocates the development of robust regulatory and technological strategies to harness the benefits and address the limitations of GenAI.

Generative artificial intelligence (GenAI) has emerged as a groundbreaking innovation with the potential to reshape decision-making across multiple domains. Advances in machine learning and natural language processing have enabled models such as ChatGPT to assist in various decision-making processes, ranging from clinical diagnostics and legal adjudication to financial advisement and educational planning (Abdelwahed, 2024; Cardoso et al., 2024). The rapid integration of GenAI into these fields highlights its significance as both a practical tool for enhancing efficiency and an influential force driving transformative change. According to McKinsey, 75 % of professionals expect GenAI to cause significant or disruptive change in their industry within the next three years, underscoring its growing role in strategic decision-making and business innovation (Marr, 2024). In addition, the Deloitte Center for Integrated Research indicates that 79 % of business leaders anticipate that GenAI will catalyze substantial industry changes within the next three years, as it enhances data-driven decision-making through deeper insights and the automation of complex tasks (Deloitte, 2024). GenAI’s ability to process vast amounts of information and generate coherent and contextually relevant outputs offers the promise of improved decision accuracy and operational efficiency, given industries’ increasing reliance on data-driven insights. However, recent studies suggest that, while GenAI models, such as ChatGPT and Gemini, provide powerful generative capabilities, they may still lack the reliability required for high-stakes decision-making, particularly in academic and research contexts (Garg et al., 2024). Moravec et al. (2024) confirm that high digital literacy levels correlate with an increased propensity to utilize ChatGPT, especially for low-risk decisions, such as exploration and enjoyment. Meanwhile, the rapid adoption of GenAI has encouraged a re-examination of existing legal, ethical, and regulatory frameworks, as its ability to generate original content challenges the traditional notions of authorship, ownership, and accountability in decision-making processes (Al-Busaidi et al., 2024).

Despite the potential benefits and expanding research, the existing literature on GenAI in decision-making is highly fragmented. In recent years, numerous studies from diverse research areas have examined different aspects of GenAI applications in decision-making. Some studies have focused on the utilization of GenAI in medical diagnostics and clinical decision-making (Brügge et al., 2024; Miao et al., 2024), while others have explored its role in legal reasoning and ethical frameworks in judicial processes (Cardoso et al., 2024; Perona & de la Rosa, 2024). Other studies have addressed the impact of GenAI on business and financial decision-making as well as its potential in educational and collaborative environments (Bukar et al., 2024a; Jiang et al., 2024; Yadav et al., 2024). This disciplinary dispersion not only makes it challenging for researchers and practitioners to gain a comprehensive understanding of the field, but also raises the risk of duplicative efforts and missed opportunities for cross-disciplinary innovation. Therefore, synthesizing existing research is imperative for advancing the literature and guiding future studies to build on a consolidated knowledge base.

This study primarily aims to integrate and synthesize the diverse body of research on the role of GenAI in decision-making. Following the approach utilized by recent review articles on GenAI-related issues (Dwivedi, 2025; Dwivedi et al., 2024), this study reviews and categorizes studies from various domains to provide a comprehensive analysis that elucidates both the potential and limitations of GenAI-driven approaches in decision-making. Therefore, the study aims to clarify the current state of the field, identify knowledge gaps, and propose directions for future research. This synthesis is intended to serve as a valuable resource for scholars and practitioners in facilitating the development of informed and effective decision-making systems that leverage the strengths of GenAI.

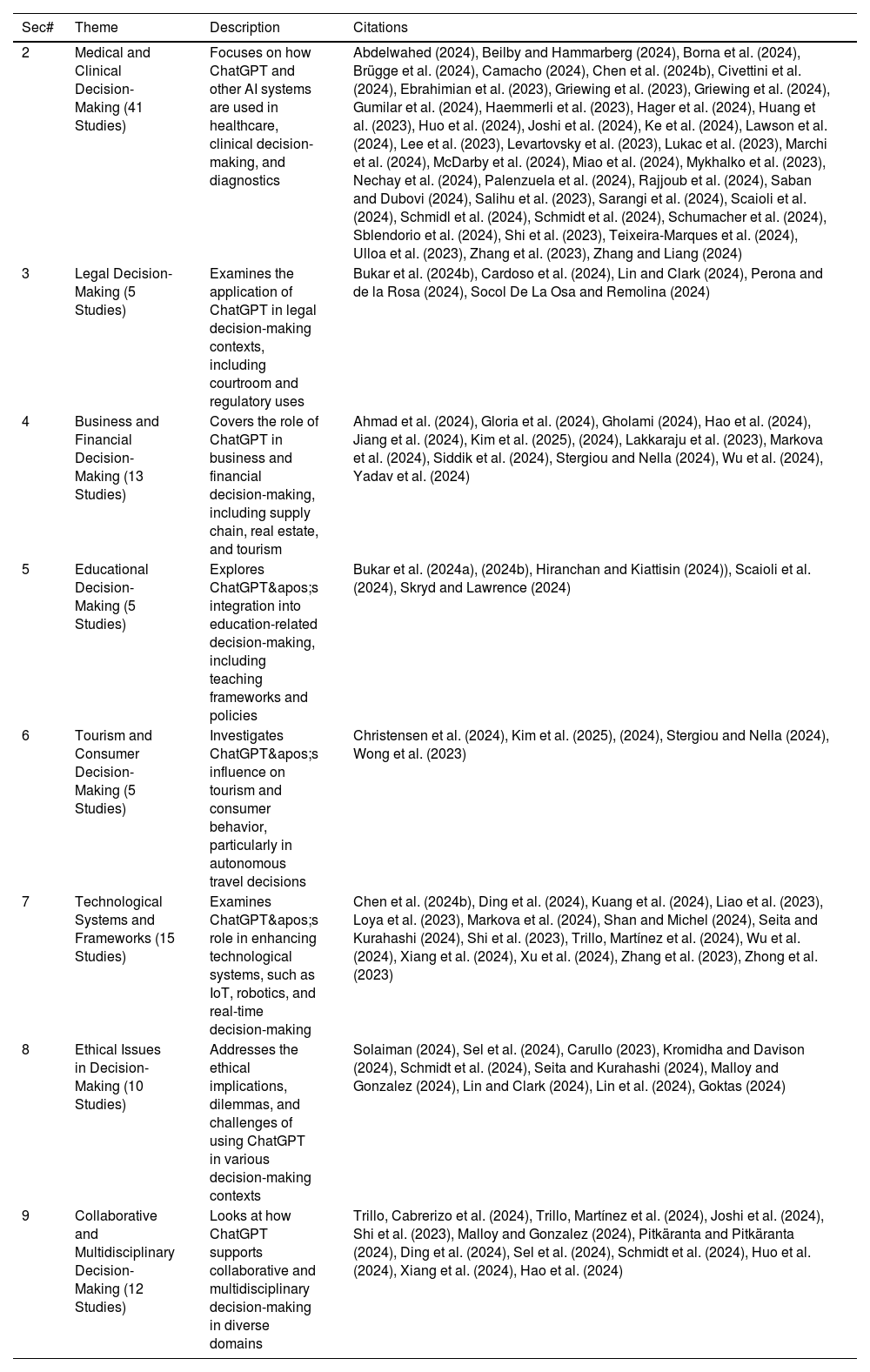

Table 1 provides a structured overview of the literature on GenAI research in decision-making. The studies included in Table 1 were identified through a systematic search approach (Dwivedi, 2025; Dwivedi et al., 2024), using carefully chosen keywords ("Generative AI," "GenAI," "Generative Artificial Intelligence," as well as "Decision Making" and "Decision-Making") in the Scopus database. The initial search results were then manually screened to ensure that only relevant studies were included. These studies were subsequently analyzed and categorized into distinct groups, each representing a thematic focus in decision-making: medical and clinical, legal, business and financial, educational, collaborative and multidisciplinary, tourism and consumer, technological systems and frameworks, and ethical issues in decision-making. Table 1 serves as a concise yet comprehensive map of the current research landscape, offering a clear snapshot of the various domains in which GenAI impacts decision-making. In this paper, the terms GenAI and ChatGPT are used interchangeably to refer to the same underlying AI model and its applications across various contexts.

Generative AI research on decision-making: themes, descriptions, and citations.

Table 1 indicates that the literature on GenAI in decision-making is fragmented and lacks a consolidated framework and adequate understanding of GenAI’s impact across many domains, emphasizing the gap in addressing ethical challenges and sector-specific applications. Therefore, this study seeks to fill this gap and contribute to a better understanding of the role of GenAI in decision-making. Theoretically, it synthesizes scattered research from various fields, including medicine, law, business, and education, into a conceptually structured framework that helps researchers connect fragmented insights and identify key areas for improvement. The study findings can expand theoretical knowledge by highlighting existing patterns and gaps between disciplines, helping in developing a more holistic understanding of GenAI’s impact on decision-making. Practically, this study provides valuable insights for policymakers, professionals, and industry leaders. It identifies concrete pathways for responsible AI integration through improving AI-driven clinical diagnostics and ensuring fairness in legal rulings to enhance financial recommendations. This demonstrates the development of systems that augment human judgment while fostering transparency and trust.

The remainder of this paper is organized as follows. Section 2 deliberates the use of GenAI in diagnosis, treatment planning, and medical education, as well as its drawbacks, such as disinformation and biases. Section 3 examines the legal consequences of incorporating GenAI in court cases, including its potential to improve efficiency and address ethical and transparency concerns. Sections 4 and 5 discuss GenAI's involvement in corporate and financial and educational decision-making, respectively. Section 6 describes GenAI's impact on tourism, while sections 7 and 8 discuss technical systems and AI-driven ethical issues in decision-making. Section 9 illustrates decision-making in collaborative and multidisciplinary environments. Section 10 provides a detailed discussion and insights from the investigated domains. Section 11 outlines the domain-specific propositions and sets directions for future research on the impact of GenAI on decision-making processes. Section 12 concludes by showing the overall impact of GenAI on decision-making, benefits, challenges, and future outlook.

Medical and clinical decision-makingWe found 41 recent studies on the role of GenAI in medical and clinical decision-making that have explored its applications, limitations, and ethical implications across various domains. A common argument is that ChatGPT performs well in structured decision-support tasks, particularly when summarizing medical guidelines (Miao et al., 2024), assisting with licensing and residency exams (Ebrahimian et al., 2023; Scaioli et al., 2024), and answering standardized clinical questions (Lee et al., 2023). Studies in reproductive medicine (Chen et al., 2024a) and colorectal cancer screening (Camacho, 2024) have highlighted the ability of ChatGPT in improving accessibility to medical information, helping both clinicians and patients understand treatment options. However, in areas requiring nuanced clinical reasoning, such as hematopoietic stem cell transplantation (Civettini et al., 2024) and breast cancer tumor board decisions (Griewing et al., 2023, 2024; Ulloa et al., 2023), ChatGPT has demonstrated inconsistencies and a lack of the depth required for patient-specific recommendations. These findings suggest that, while ChatGPT can serve as a valuable tool for summarizing knowledge and offering initial guidance, it remains inadequate for making autonomous medical decisions.

Multiple studies have identified the tendency of ChatGPT to generate misinformation, including fabricated references and misleading treatment recommendations, as a major limitation. Studies on hernia-related clinical decision-making (Nechay et al., 2024) and lumbar spinal stenosis treatment (Rajjoub et al., 2024; Zhang & Liang, 2024) have found that ChatGPT occasionally provided plausible yet inaccurate information, potentially misleading clinicians if not properly verified. Similar concerns were noted in uveitis-related decisions (Schumacher et al., 2024) and emergency plastic surgery management (Borna et al., 2024), where AI-generated responses sometimes conflicted with the established clinical guidelines. In addition, the issue of "hallucinations," where GenAI responses lacked coherence with current evidence-based practice, was observed in studies on surgical decision-making for gastroesophageal reflux disease (Huo et al., 2024) and gynecologic oncology (Gumilar et al., 2024). These findings reveal GenAI’s unreliability, despite its ability to provide medical insights; they also indicate the need for human oversight in the use of GenAI, particularly in high-stakes healthcare environments.

Many studies have highlighted the role of ChatGPT in medical education, particularly in training students and early-career professionals in clinical decision-making. Brügge et al. (2024) demonstrate that AI-generated structured feedback significantly improved medical students’ clinical reasoning. Similarly, studies on nursing education (Saban & Dubovi, 2024) and scoliosis treatment decision-making (Shi et al., 2023) have noted that ChatGPT helped structure clinical knowledge, making it an effective educational tool. Additionally, ChatGPT excels in standardized medical exams, often outperforming human test-takers (Lee et al., 2023; Scaioli et al., 2024). However, ChatGPT has shown limitations in scenarios requiring real-time adaptability, such as interpreting radiology images (Sarangi et al., 2024) and oncology case discussions (Lukac et al., 2023), as it lacks the ability to integrate evolving clinical data. These findings suggest that, while ChatGPT can supplement traditional medical education, it is not a substitute for experiential learning and expert mentorship.

Another challenge is ChatGPT’s susceptibility to cognitive biases in clinical decision-making. Schmidt et al. (2024) note that ChatGPT exhibited intrinsic biases, particularly when analyzing patient cases with misleading but salient features. Ke et al. (2024) explore a multi-agent framework to mitigate these biases and demonstrate that collaborative GenAI interactions improved diagnostic accuracy. In oncology, McDarby et al. (2024) and Schmidl et al. (2024) show that patient demographics influenced ChatGPT treatment recommendations, raising concerns about potential inequities in GenAI-driven medical guidance. Salihu et al. (2023) and Hager et al. (2024) identify inconsistencies in ChatGPT outputs based on prompt phrasing, further highlighting the model’s susceptibility to cognitive distortions. While AI has the potential to improve diagnostic consistency, safeguards are necessary to detect and correct biases to ensure fairness in clinical decision-making.

Several studies have emphasized the role of ChatGPT in patient-centered care and shared decision-making, particularly in increasing access to health information. Research in fertility care (Beilby & Hammarberg, 2024) and colorectal cancer screening (Camacho, 2024) has found that ChatGPT enhanced patients’ understanding of medical options, reducing information asymmetry. Similar findings emerged in neuro-oncology decision support (Lawson et al., 2024) and participatory healthcare technology design (Joshi et al., 2024), in which ChatGPT facilitated patient-clinician discussions and helped to generate patient-centered recommendations. However, concerns persist about misinformation, particularly in studies where ChatGPT’s medical advice does not align with clinical expertise, such as breast cancer management (Griewing et al., 2023; Lukac et al., 2023). Although AI can democratize medical knowledge, integration into patient care requires careful monitoring to ensure its accuracy and reliability.

Despite these limitations, researchers have highlighted the potential of ChatGPT in augmenting clinical workflows by reducing the cognitive load and improving efficiency in certain medical tasks. Studies in hypertension management (Miao et al., 2024) and radiation oncology (Huang et al., 2023) found that ChatGPT effectively summarized guidelines and assisted in treatment planning, demonstrating its value as a decision support tool. However, its inability to function autonomously, where ChatGPT’s recommendations often require human verification, has been evident in studies on acute ulcerative colitis (Levartovsky et al., 2023) and tumor board decision-making (Ulloa et al., 2023). Studies on GenAI transparency and ethical considerations (Sblendorio et al., 2024; Zhang et al., 2023) have emphasized the need for structured regulatory frameworks to ensure that AI-generated outputs align with professional standards. These findings reinforce that ChatGPT deployment must be carefully controlled to avoid over-reliance on AI-generated information in clinical workflows.

In summary, ChatGPT shows significant promise across a variety of domains, including medical decision-making, patient engagement, workflow efficiency, and improving clinical accuracy. Additionally, it supports medical education through structured feedback, helps to reduce cognitive biases in decision-making, and facilitates patient-centered care. ChatGPT’s role in summarizing guidelines and assisting in treatment planning within these domains highlights its value in the healthcare sector. However, its limitations must be addressed before broader clinical adoption. Key areas requiring improvement include GenAI transparency (Zhang et al., 2023), domain-specific training (Gumilar et al., 2024; Marchi et al., 2024), and stronger GenAI-human collaboration to enhance decision accuracy (Miao et al., 2024; Ulloa et al., 2023). Some researchers advocate the integration of ChatGPT as a supplementary tool in clinical workflows (Borna et al., 2024; Sblendorio et al., 2024), while others emphasize the necessity of regulatory oversight to mitigate the risks related to bias, misinformation, and ethical concerns (Hager et al., 2024; Salihu et al., 2023). The consensus across these studies is that ChatGPT’s capabilities should be rigorously validated and carefully implemented to enhance, rather than replace, human expertise in medical and clinical decision-making.

Legal decision-makingIn legal decision-making, five recent studies have investigated the role of GenAI, focusing on its implementation in judicial systems as well as the ethical implications and regulatory frameworks regarding its use. A key theme across multiple studies is the potential of GenAI to enhance efficiency in legal decision-making despite concerns about transparency and fairness. Cardoso et al. (2024) highlight global examples, including Pakistan’s GenAI-assisted court rulings and China’s smart courts, demonstrating the growing role of GenAI in adjudication. Similarly, Perona and de la Rosa (2024) analyze a Colombian case in which a judge explicitly referenced ChatGPT in a court ruling, sparking a debate on GenAI's influence on legal reasoning. However, studies have also emphasized the risks of algorithmic bias and a lack of regulatory clarity: for instance, Socol De La Osa and Remolina (2024) argue that unregulated GenAI use in courtrooms, particularly in jurisdictions such as Colombia, Peru, and India, poses risks to judicial independence and public trust. Therefore, GenAI integration requires structured oversight to ensure fairness and adherence to legal principles.

Another key concern is the ethical implications of using ChatGPT in decision-making frameworks, particularly in balancing GenAI’s benefits with risks, such as misinformation and bias. Bukar et al. (2024) propose a structured decision-making model using the Analytic Hierarchy Process (AHP) to evaluate ethical concerns, highlighting privacy, misinformation, and academic integrity as major issues. Their findings align with those of Socol De La Osa and Remolina (2024), who advocate ex-ante and ex-post safeguards, including algorithmic fairness assessments and iterative audits, to mitigate GenAI-induced biases. Similarly, Cardoso et al. (2024) emphasize the black box problem in GenAI decision-making, in which the lack of transparency in GenAI-generated legal reasoning undermines accountability. The ethical use of ChatGPT in legal decision-making necessitates proactive regulatory mechanisms such as transparency mandates and human oversight to prevent unintended legal and societal consequences.

While most studies focus on GenAI’s impact on judicial decision-making, Lin and Clark (2024) extend the discussion to GenAI’s role in ethical reasoning and public decision-making beyond the courtroom. Their research on how first-year public-speaking students navigate ethical dilemmas when using ChatGPT highlights broader concerns regarding moral intensity, ethical recognition, and behavioral intentions in AI applications. These findings relate to the concerns raised by Perona and de la Rosa (2024) regarding the ethical risks of over-reliance on ChatGPT in judicial settings. The overlap between legal and educational contexts suggests that GenAI’s ethical and regulatory challenges are not confined to courtrooms, but extend to broader decision-making frameworks that shape public discourse and institutional governance. Overall, there is a need for comprehensive GenAI policies that not only address the ethical concerns and regulatory gaps identified across various studies but also ensure the responsible integration of AI tools into decision-making frameworks. This includes the development of transparent, accountable, and fair systems that protect the rights of individuals while promoting the efficient and accurate use of AI in complex contexts such as legal decision-making. Additionally, continuous oversight and reassessment are necessary, as AI technologies evolve to ensure that they align with changing societal and legal expectations.

Business and financial decision-makingRecently, 13 studies on the role of GenAI in business and financial decision-making have illustrated its applications across industries, from investment analysis and supply chain resilience to sustainability and financial advisement. A common theme across multiple studies is the ability of GenAI-driven decision systems to enhance efficiency and optimize business operations. Jiang et al. (2024) and Yadav et al. (2024) demonstrate how GenAI-powered financial and knowledge systems improved data retrieval, filtering, and classification, aiding users in decision-making through sentiment analysis and structured financial insights. Similarly, Gloria et al. (2024) show that domain-specific GenAI models, such as Real-GPT, can enhance real estate investment decisions by outperforming general-purpose models in market analysis. However, while AI enhances data processing and operational decision-making, Lakkaraju et al. (2023) raise concerns about fairness and bias in GenAI-driven financial advisement, arguing that inconsistencies in recommendations across user groups pose ethical and regulatory risks. Although GenAI improves efficiency and accuracy in financial decision-making, mitigating biases and ensuring transparency continue to present critical challenges.

Another major consideration is the impact of GenAI on sustainability and industrial decision-making. Wu et al. (2024) and Gholami (2024) explore GenAI-driven frameworks designed to optimize carbon emission management and sustainable manufacturing and demonstrate how GenAI models improve efficiency through event logs and historical emissions data analyses. Markova et al. (2024) expand this by proposing on-premise GenAI frameworks for real-time industrial automation, highlighting the benefits of local GenAI deployment for predictive maintenance and process optimization. These studies suggest that GenAI contributes significantly to sustainability goals by enhancing operational adaptability and reducing resource consumption. However, challenges such as data security concerns (Markova et al., 2024) and computational costs (Wu et al., 2024) underscore the need for scalable and cost-effective GenAI solutions that align with industry-specific constraints. Collectively, these studies indicate that GenAI has the potential to facilitate sustainable business practices; however, successful implementation requires balancing efficiency gains with cost, security, and compliance considerations.

The role of GenAI in business resilience and risk management is another prominent research area. Ahmad et al. (2024) propose a GenAI-enhanced decision-making framework for supply chain resilience, arguing that GenAI’s ability to anticipate risks and optimize mitigation strategies strengthens SMEs' responses to disruptions. Similarly, Hao et al. (2024) investigate the intersection of human intelligence and GenAI in collaborative business decision-making and found that GenAI reduces cognitive biases and enhances adaptability. By contrast, Kim et al. (2025), (2024) focus on consumer-facing applications, examining how misinformation and AI errors affect trust and decision-making in the financial and tourism sectors. Their findings suggest that AI aids in business continuity and risk mitigation, improving resilience in dynamic business environments. However, it can introduce new vulnerabilities, such as algorithmic biases or misinformation, which may erode user confidence. Therefore, human oversight and structured governance are necessary to maintain trust and mitigate unintended risks.

A final consideration is the influence of GenAI on funding success and market dynamics. Siddik et al. (2024) highlight that investor influence, rather than technological factors, significantly determines funding outcomes for GenAI startups, suggesting that venture capital networks play a more critical role in financial success than GenAI capabilities alone. By contrast, Jiang et al. (2024) and Wu et al. (2024) emphasize the growing importance of GenAI in financial forecasting and sustainability decision-making, indicating that GenAI-driven insights shape investment decisions and market trends. Evidently, GenAI is a valuable tool for financial analysis, which enhances market insights and operational decision-making. This divergence in findings suggests that investor confidence and external economic factors remain dominant in shaping financial success, as broader financial ecosystems, regulatory considerations, and human judgment continue to shape business outcomes.

Educational decision-makingWe reviewed five recent studies that examined the use of GenAI in educational decision-making, focusing on policy formation, instructional assistance, and student learning, alongside ethical and practical challenges. A recurring theme across multiple studies is the need for structured frameworks to guide GenAI integration in education. Bukar et al. (2024) propose a Risk, Reward, and Resilience (RRR) model to balance GenAI’s benefits, such as personalized learning, with the risks being misinformation and academic dishonesty, among others. Furthermore, Bukar et al. (2024b) utilize the Analytic Hierarchy Process (AHP) to prioritize ethical concerns, emphasizing that policies must balance restrictions with regulations to maintain academic integrity. These findings align with those of Hiranchan and Kiattisin (2024)), who advocate structured prompt frameworks to improve the accuracy of GenAI-driven decision-making, particularly in instructional settings. Collectively, these studies indicate that, while GenAI can improve efficiency and personalization in education, its integration must be guided by policies that address ethical concerns and ensure equitable access.

Importantly, the use of GenAI in medical education emphasizes its benefits and drawbacks in clinical training. Skryd and Lawrence (2024) examine ChatGPT’s use in ward-based teaching and found that it effectively supports knowledge reinforcement and team discussions, but struggles in high-stakes, emergency scenarios requiring nuanced reasoning. Similarly, Scaioli et al. (2024) evaluate ChatGPT’s performance on medical residency exams in Italy, demonstrating its strong analytical capabilities, while cautioning against over-reliance owing to its inability to process recent medical advancements. These studies collectively underscore AI function as an effective supplementary educational tool, particularly for reinforcing theoretical knowledge. However, they highlight AI’s limitations in complex decision-making scenarios that require clinical judgment and real-world adaptability and underline the need for structured guidelines and human oversight to ensure the responsible use of GenAI in medical training and assessment.

Despite the potential of GenAI in education, ethical challenges and implementation concerns remain common across the studies. Bukar et al. (2024a) and (2024b) emphasize developing adaptive regulations that can evolve alongside GenAI advancements to prevent misuse and academic inequities. Similarly, Skryd and Lawrence (2024) highlight misinformation risks and biases in GenAI-generated medical responses, reinforcing the need for ethical guidelines in GenAI-driven instruction. Hiranchan and Kiattisin (2024)) add to this discussion by submitting that GenAI effectiveness depends on prompt structuring, which can influence bias, accuracy, and decision relevance. In summary, although GenAI can improve educational decision-making, its efficacy depends not only on performance but also on established frameworks and ethical principles that regulate its application. The successful incorporation of GenAI in education necessitates regulations that harmonize innovation with responsible governance and safeguard personalized learning and academic integrity. These studies illustrate that frameworks such as the RRR model and AHP are essential for resolving ethical issues and directing the proper use of GenAI in diverse educational contexts.

Tourism and consumer decision-makingChatGPT’s ability to enhance the efficiency and personalization of travel decisions is a recurring theme in the five recent studies that analyzed how GenAI affects trip planning, trust in AI recommendations, and misinformation concerns in tourism and consumer decision-making. Wong et al. (2023) highlight how ChatGPT improves decision-making across all travel phases (pre-trip, en-route, and post-trip) by offering customized itineraries, real-time assistance, and content creation. Similarly, Stergiou and Nella (2024) emphasize ChatGPT’s capacity to tailor recommendations based on individual preferences, demonstrating how GenAI enhances travel information accessibility and diagnosticity. However, Kim et al. (2024) caution that trust in GenAI recommendations is contingent on user experience, because exposure to GenAI errors can diminish adoption intention. Although ChatGPT streamlines travel planning and enhances consumer experiences, ensuring reliability and transparency is critical in maintaining user confidence.

The issue of GenAI-generated misinformation presents a significant concern in consumer tourism decision-making. Christensen et al. (2024) and Kim et al. (2025) investigate the impact of GenAI hallucinations, in which ChatGPT produces plausible but inaccurate information, on travelers’ perceptions and behaviors. Christensen et al. (2024) find that, while consumers recognize the risk of GenAI misinformation, they often continue to prefer GenAI-generated itineraries due to perceived impartiality and personalization. Conversely, Kim et al. (2025) demonstrate that incorrect information significantly erodes trust, particularly when it is domain-specific and dominant within GenAI-generated recommendations. These findings highlight a paradox in which GenAI’s convenience and customization drive its adoption despite the impact of misinformation on consumer confidence. Both studies have underscored the need for user education and enhanced transparency measures in mitigating GenAI-induced errors in travel planning.

Despite these challenges, research has suggested that GenAI’s role in tourism decision-making will continue to evolve, provided that its limitations are effectively managed. Kim et al. (2024) and Stergiou and Nella (2024) argue that the effectiveness of ChatGPT depends on balancing user expectations with system reliability. While Kim et al. (2024) report that positive information about ChatGPT increases adoption intention, Wong et al. (2023) emphasize that regulatory oversight and technological advancements are necessary in addressing biases, privacy concerns, and outdated knowledge bases. Therefore, ChatGPT’s success in tourism depends on continuous improvements in AI accuracy, user education, and ethical GenAI deployment to ensure consumers make informed and confident travel decisions. For instance, transparency regulations that require AI platforms to provide explicit disclosures regarding the generation of recommendations enable users to comprehend their limits more effectively. In addition, bias-mitigation techniques must be included in GenAI systems to detect and rectify biases in real time, guaranteeing that recommendations are equitable and fair.

Technological systems and frameworksOptimization of GenAI-driven decision systems to improve efficiency, accuracy, and adaptability in real-time applications is a central theme across 15 recent studies that have investigated the integration of GenAI into industrial automation, robotics, autonomous decision-making, and emergency response. Ding et al. (2024) and Xiang et al. (2024) demonstrate how incorporating multi-modal data integration and reward-shaping mechanisms enables GenAI-enhanced reinforcement learning frameworks to improve decision-making in complex environments, such as the metaverse and Unmanned Air Vehicle air combat simulations. Similarly, Markova et al. (2024) and Wu et al. (2024) highlight the benefits of integrating ChatGPT-driven models into Industry 4.0 systems, particularly for predictive maintenance and carbon emissions management. These studies indicate that GenAI plays an increasingly important role in optimizing high-stakes and real-time decision-making across multiple sectors; however, challenges such as computational demands and system scalability remain areas for further refinement.

Another significant focus is GenAI’s role in knowledge extraction and domain-specific decision frameworks, particularly in improving system reliability and interpretability. Xu et al. (2024) and Zhong et al. (2023) explore how ChatGPT and other large language models (LLMs) can support engineering design and tax-related decision-making to assist in extracting technical knowledge from diverse sources, including science fiction narratives and structured databases. Chen et al. (2024) extend this discussion by integrating knowledge graphs with LLMs to improve emergency response decision-making and ensure that GenAI-generated recommendations adhere to regulatory guidelines. GenAI’s effectiveness in decision-making is enhanced when paired with structured knowledge systems, reducing hallucinations and improving the accuracy of GenAI-generated insights. However, Xu et al. (2024) caution that, while GenAI can efficiently retrieve factual knowledge, its subjective reasoning still lags behind that of human decision-makers, underscoring the need for hybrid GenAI-human collaboration in complex judgment tasks.

Several studies have investigated the integration of GenAI in real-time automation and robotics, in which the combination of LLMs with physical systems is key in improving autonomous functionality. By integrating language processing with visual perception, Liao et al. (2023) and Kuang et al. (2024) explore how ChatGPT-driven decision frameworks enhance robotic grasping tasks and traffic scene comprehension, respectively. Shan and Michel (2024) apply a similar hybrid approach to gaming, combining goal-oriented action planning with ChatGPT to optimize real-time strategic decision-making. These studies suggest that GenAI’s ability to process unstructured data in real time enhances system adaptability across different environments, from industrial robotics to interactive digital applications. However, they highlight technical limitations, such as latency issues (Shan & Michel, 2024) and reduced performance in complex environments with unpredictable conditions (Kuang et al., 2024), underscoring the need for further optimization of GenAI-driven automation.

Finally, multiple studies address the role of GenAI in improving the interpretability and ethical alignment of technological decision systems. Trillo, Martinez et al. (2024) and Seita and Kurahashi (2024) explore the impact of ChatGPT on group decision-making by enhancing consensus-building and reducing bias in decision outputs. Similarly, Zhang et al. (2023) propose a framework that integrates Markov logic networks with LLMs to improve transparency in GenAI-driven medical diagnostics, ensuring that GenAI-generated insights align with evidence-based practices. Although these studies highlight GenAI’s potential to enhance structured decision-making, they also stress the importance of implementing safeguards to prevent over-reliance on GenAI-generated conclusions. Research on technological systems and frameworks suggests that GenAI’s decision-making capabilities are most effective when paired with structured rule-based models, domain-specific adaptations, and continuous human oversight to ensure accuracy, efficiency, and ethical integrity.

Ethical decision-makingGenAI’s effects on transparency, bias, accountability, regulatory challenges, and ethical decision-making across various domains have been addressed in 10 recent studies. A recurring theme is the need for structured oversight and governance to mitigate the risks associated with GenAI-driven decisions. Solaiman (2024) and Carullo (2023) emphasize the lack of clear regulatory safeguards in mental health and public administration, in which GenAI-driven decisions can have serious consequences due to biased outputs and a lack of transparency. Similarly, Lin et al. (2024) assess ChatGPT's performance on ethical judgment tests and find that, although GenAI models, such as GPT-4, can demonstrate high-level ethical reasoning, they lack the accountability required for professional decision-making. Furthermore, Goktas (2024) highlights that challenges related to transparency and explainability in GenAI-driven decision systems have become increasingly prominent, particularly in healthcare and finance, necessitating continuous monitoring and sector-specific ethical guidelines. These studies argue for GenAI deployment guided by robust ethical and regulatory frameworks to prevent unforeseen consequences.

Several studies have explored the significant issue of bias in GenAI-driven decision-making. Schmidt et al. (2024) investigate bias in GenAI-assisted diagnostic decision-making, finding that ChatGPT exhibits intrinsic biases similar to those of human professionals, although it remains unaffected by contextual extrinsic biases. Similarly, Sel et al. (2024) propose a Skin-in-the-Game (SKIG) framework to improve GenAI moral reasoning by incorporating stakeholder perspectives, thereby demonstrating improvements in ethical decision accuracy. Meanwhile, Kromidha and Davison (2024) assess the limitations of GenAI in business decision-making, arguing that GenAI lacks the contextual judgment necessary for fully autonomous ethical choices. Although GenAI can process and structure ethical reasoning, it struggles with context sensitivity, bias mitigation, and moral accountability. Consequently, GenAI decision-making must be supplemented with human oversight and structured interventions such as bias-detection frameworks and ethical alignment strategies to ensure fairness and reliability.

A final area of discussion is the role of GenAI in group-based ethical decision-making and its implications for replacing or supplementing human judgment. Seita and Kurahashi (2024) find that ChatGPT's decision-making closely mirrors human group discussions, raising questions about whether GenAI could serve as an alternative to resource-intensive deliberations. However, Malloy and Gonzalez (2024) argue that GenAI-driven cognitive models require improvements in generalization and predictive accuracy, particularly in high-stakes ethical dilemmas. Lin and Clark (2024) extend this discussion to academic ethics by analyzing how students perceive GenAI-generated content in plagiarism detection and public speaking contexts. Thus, GenAI provides structured reasoning and facilitates ethical decision-making processes. However, its ethical limitations raise concerns about accountability in educational and professional assessments, and thus, its application should be limited to augmenting human judgment rather than replacing it, ensuring that GenAI remains a tool for structured deliberation rather than an autonomous moral authority. Although the ethical limitations of GenAI are predominantly articulated from a Western perspective, ethical frameworks and cultural norms vary across countries. In several non-Western environments, decision-making procedures may incorporate community-based or hierarchical frameworks, influencing the perception and implementation of GenAI's role in ethical decision-making.

Collaborative and multidisciplinary decision-makingWe uncovered 12 recent studies on GenAI in collaborative and multidisciplinary decision-making that address its potential to improve consensus building, cognitive modeling, and complex decision environments. The majority of the studies explore GenAI’s ability to streamline group decision-making processes through structuring discussions and mitigating biases. Trillo, Cabrerizo et al. (2024) and Trillo, Martínez et al. (2024) explore how ChatGPT and other LLMs assist in group decision-making by analyzing sentiments in discussions, reducing the influence of hostility, and adjusting the weight assigned to each expert to foster constructive debates. Similarly, Joshi et al. (2024) demonstrate how AI-driven participatory design tools improve collaborative decision-making in healthcare technology development by enabling non-technical stakeholders to contribute meaningfully. By contrast, Schmidt et al. (2024) examine GenAI’s susceptibility to intrinsic biases in multidisciplinary group decisions, noting that ChatGPT remains prone to cognitive distortions, similar to human experts. These studies suggest that careful oversight over GenAI is required to manage its biases and ensure equitable stakeholder participation.

Moreover, GenAI integration into collaborative environments that require real-time decision support has been highlighted. Xiang et al. (2024) propose a GenAI-enhanced reinforcement learning system that improves Unmanned Air Vehicle air combat decision-making by incorporating LLMs for strategic adjustments. Similarly, Ding et al. (2024) introduce a multi-dimensional optimization framework for metaverse-based decision-making, demonstrating how GenAI can facilitate real-time interactions and autonomous adaptation in dynamic digital environments. Pitkäranta and Pitkäranta (2024) extend these findings to corporate decision-making and develop a RAGADA framework to align GenAI-driven insights with organizational objectives and ethical standards. These studies emphasize GenAI’s ability to enhance decision efficiency and adaptability through GenAI-driven decision systems that improve real-time collaboration across disciplines. Conversely, they highlight the challenges related to computational costs, ethical compliance, and model retraining, and that transparency and reliability remain ongoing concerns.

The role of GenAI in enhancing cognitive modeling and teamwork is another key theme in the literature. Malloy and Gonzalez (2024) propose a generative memory framework to improve cognitive modeling in decision science and found that GenAI-enhanced memory representation and action prediction can improve learning efficiency. Similarly, Hao et al. (2024) investigate human-AI collaboration in organizational decision-making, revealing that GenAI reduces cognitive biases and enhances analytical reasoning, but also increases the risk of over-reliance on AI-generated insights. Shi et al. (2023) explore a related concept in medical decision-making by developing Chat-Orthopedist, a retrieval-augmented GenAI model that supports shared decision-making by integrating structured medical knowledge with ChatGPT. Although GenAI enhances cognitive modeling and structured decision support, human expertise should be balanced with GenAI recommendations to maintain decision quality and prevent automation bias.

Furthermore, ethical considerations and GenAI’s influence on stakeholder alignment refine the discussion on GenAI-driven collaborative decision-making. Sel et al. (2024) introduce the SKIG framework to improve GenAI’s moral reasoning by simulating accountability and stakeholder perspectives, thereby demonstrating its effectiveness in addressing ethical dilemmas. Using sentiment analysis and preference weighting to enhance group coherence, Huo et al. (2024) and Trillo, Martínez et al. (2024) similarly emphasize GenAI’s role in ensuring fairness in multi-stakeholder environments. However, these studies also highlight GenAI’s potential to introduce unintended biases, requiring governance mechanisms to ensure transparency and fairness. GenAI success in facilitating ethical decision-making by structuring stakeholder perspectives depends on well-defined oversight frameworks that prioritize accountability and trust in human-AI collaboration. Sophisticated frameworks, such as RAGADA and SKIG, are promising but would require more empirical validation and testing to confirm their scalability and applicability across other domains. Further research should explore their practical efficacy and address any constraints on applying these frameworks to diverse decision-making contexts to guarantee their widespread application and significant outcomes.

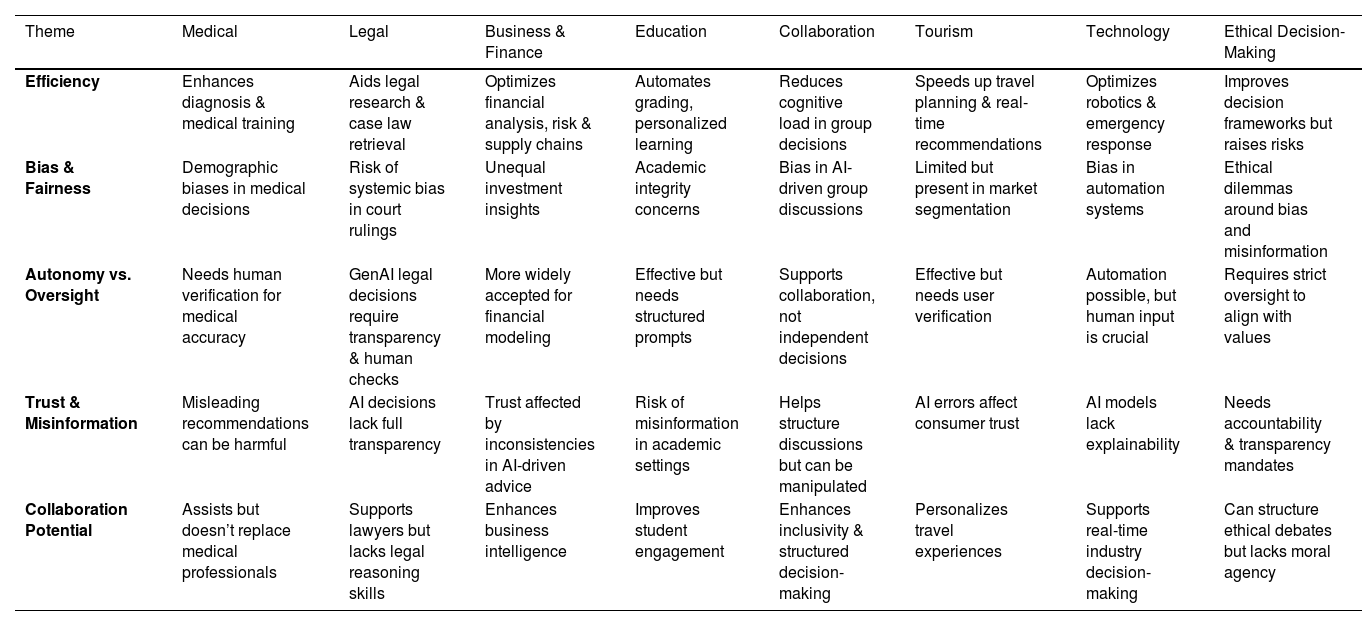

Discussion and insightsTable 2 provides a structured comparison of how GenAI impacts decision-making across the medical, legal, business, education, collaborative, tourism, technological, and ethical decision-making domains. It highlights common themes, such as efficiency, bias, trust, and oversight, while also identifying domain-specific challenges, such as high-stakes risks in healthcare and law, personalization concerns in business and education, and automation biases in technology. Understanding these intersections helps in developing more ethical, transparent, and effective AI applications in various fields.

Comparative analysis of key themes in GenAI-driven decision-making across domains.

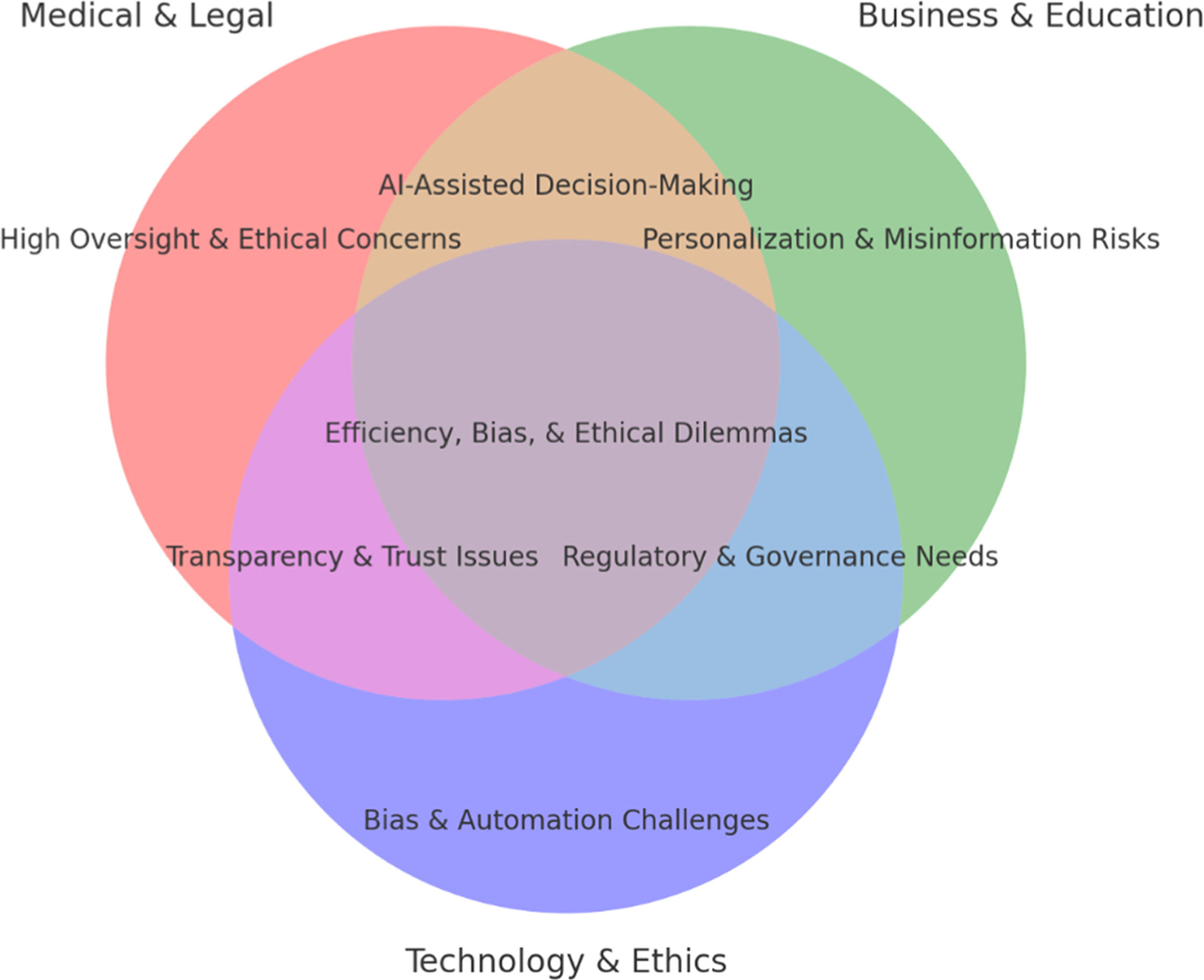

Based on Table 2, the Venn Diagram (Fig. 1) illustrates the overlapping and unique challenges of GenAI in medical and legal, business and education, technology, and ethical decision-making.

This Venn Diagram (Fig. 1) provides a visual representation of the shared and unique challenges in GenAI decision-making across multiple sectors, reinforcing the key themes discussed in Table 2. It demonstrates that all domains benefit from efficiency gains, but share concerns about bias, transparency, and ethical dilemmas. These intersections highlight domain-specific challenges such as high oversight requirements in the medical and legal fields, personalization risks in business and education, and automation challenges in technology and ethics. Understanding these overlaps in GenAI’s roles across different domains allows for a more detailed breakdown of specific categories and their interconnections, as follows:

- •

Three Major Categories:

- ○

Medical & Legal → High oversight and ethical concerns

- ○

Business & Education → Personalization and misinformation risks

- ○

Technology & Ethics → Bias and automation challenges

- ○

- •

Overlapping Areas:

- ○

Medical & Legal + Business & Education → AI-assisted decision-making

- ○

Medical & Legal + Technology & Ethics → Transparency and trust issues

- ○

Business & Education + Technology & Ethics → Regulatory and governance needs

- ○

All Three Domains (Center Overlap) → Efficiency, bias, and ethical dilemmas

- ○

At a higher level, an evident similarity across the domains is the recognition of GenAI's ability to rapidly process large volumes of information, thereby improving decision-making efficiency. For instance, in medical decision-making, ChatGPT supports clinicians by summarizing medical guidelines and assisting with licensing exams (Ebrahimian et al., 2023; Miao et al., 2024), similar to its role in legal contexts in which it aids in case law retrieval and regulatory compliance (Cardoso et al., 2024). Similarly, in the business and financial domain, GenAI enhances data retrieval, investment analysis, and risk assessment (Jiang et al., 2024; Yadav et al., 2024). The efficiency of GenAI-driven decision support is a unifying theme across these sectors.

However, a major contrast lies in the stakes involved in different decision-making scenarios. In medical and legal contexts, GenAI errors can have profound consequences, such as misdiagnoses (Griewing et al., 2023) or biased legal verdicts (Socol De La Osa & Remolina, 2024). Meanwhile, in business and tourism, errors tend to be less severe but still consequential. GenAI-generated misinformation in tourism recommendations (Christensen et al., 2024) or in financial decision-making (Lakkaraju et al., 2023) may not have life-altering implications, such as healthcare or law, but can lead to monetary losses or poor consumer experiences.

A related contrast appears in the extent to which GenAI can operate autonomously. In business and financial decision-making, GenAI is widely accepted as a decision support tool, especially in supply chain optimization and sustainability initiatives (Ahmad et al., 2024; Gholami, 2024). However, in medicine, although GenAI assists in training medical professionals and structuring clinical knowledge, it remains unreliable for direct patient recommendations because of the risks of bias and misinformation (Shi et al., 2023; Zhang et al., 2023). Similarly, in legal settings, GenAI cannot function as an independent decision-maker because of the necessity for judicial interpretation and ethical considerations (Lin & Clark, 2024).

Another recurring theme concerns biases in GenAI-driven decision-making. In medical applications, GenAI-generated recommendations sometimes reflect demographic bias, leading to inequitable treatment options (McDarby et al., 2024; Schmidl et al., 2024). These biases manifest in business and financial contexts as inconsistencies in financial advisement, which can disproportionately affect certain user groups (Lakkaraju et al., 2023). Similarly, in legal decision-making, concerns regarding GenAI reinforcing systemic biases in judicial rulings have been raised (Socol De La Osa & Remolina, 2024). By contrast, the impact of the biases in tourism and consumer decision-making is more related to market segmentation and personalization than to structural inequities (Kim et al., 2024).

At the same time, the role of GenAI in fostering collaboration is significantly different. In collaborative and multidisciplinary decision-making, GenAI is viewed as a facilitator, structuring discussions and enhancing stakeholder engagement (Joshi et al., 2024; Trillo, Cabrerizo et al., 2024), whereas in ethical decision-making, GenAI is more of a challenge to existing norms than a facilitator, as it raises questions about transparency, accountability, and regulatory compliance (Kromidha & Davison, 2024; Solaiman, 2024). Specifically, in collaborative decision-making, GenAI is seen as a positive force for inclusivity, whereas in ethical contexts, its limitations demand scrutiny and caution.

The technological domain significantly overlaps with business and multidisciplinary decision-making but introduces additional complexities regarding GenAI’s real-time adaptability and computational constraints. Studies have highlighted the role of GenAI in enhancing Industry 4.0 systems, robotics, and emergency response decision-making (Kuang et al., 2024; Markova et al., 2024), indicating a greater reliance on GenAI automation compared to other fields. However, this sector faces scalability issues because GenAI-driven frameworks often require high computational power and lack interpretability in decision-making (Xu et al., 2024; Zhong et al., 2023).

Finally, the ethical concerns surrounding GenAI are universal but manifest differently across domains. In law and medicine, ethical considerations revolve around fairness, misinformation, and accountability (Lin et al., 2024; Schmidt et al., 2024), whereas in business and tourism, ethical concerns relate to transparency and consumer trust (Christensen et al., 2024; Kim et al., 2025). The technological domain faces ethical dilemmas concerning GenAI bias in automation and predictive decision-making (Seita & Kurahashi, 2024), whereas in education, GenAI ethics focus on misinformation risks and academic integrity (Bukar et al., 2024a, 2024b). These variations highlight the domain-specific nature of GenAI’s ethical challenges.

Overall, the reviewed studies spanning diverse fields indicate that, although GenAI possesses considerable potential to enhance decision-making efficiency and customization, its implementation must be meticulously managed to mitigate domain-specific hazards. The key pillars, including transparency mandates, risk tolerance standards, and ethical AI within stakeholder frameworks, underscore the need for thorough monitoring and regulatory systems to guarantee the responsible implementation of GenAI. To effectively harness its advantages, GenAI must be supported by human supervision, transparency protocols, and ethical safeguards to guarantee equity, precision, and trust across all domains.

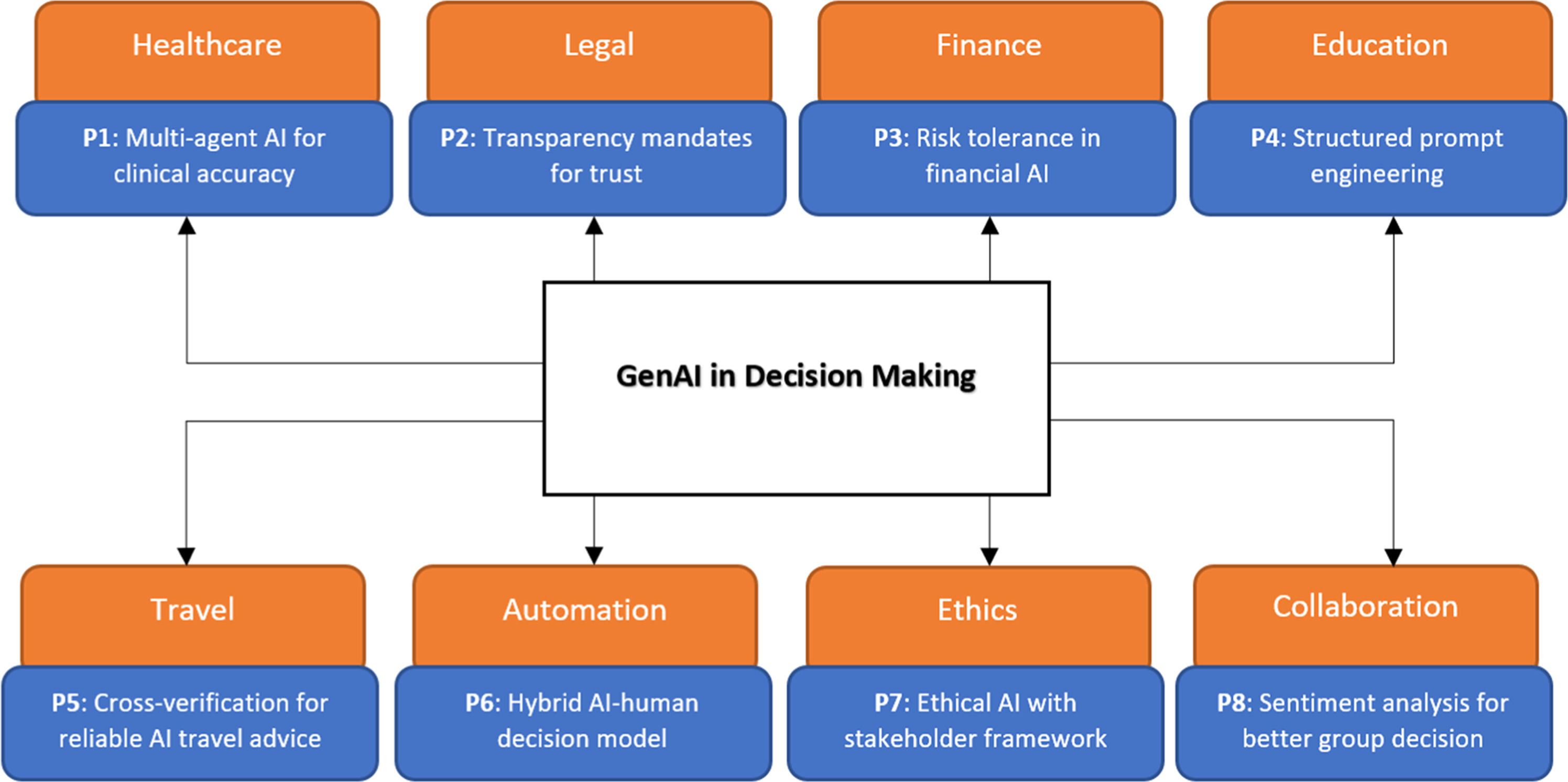

Practical implications and future research directionsBased on this study’s analysis and discussion, Fig. 2 provides a structured overview of the research agenda for GenAI-driven decision-making across various domains. It depicts key propositions in the eight areas: healthcare, legal, finance, education, collaboration, travel, automation, and ethics, each of which addresses a specific challenge and potential solution. The propositions highlight critical aspects, such as improving GenAI reliability through multi-agent frameworks, enhancing transparency in legal GenAI applications, personalizing financial advisement, and implementing structured prompt engineering in education. Additionally, the research agenda emphasizes the importance of sentiment analysis for group decision-making, cross-referencing AI-generated travel recommendations, hybrid GenAI-human models for automation, and ethical oversight frameworks. The propositions depicted in Fig. 2 are discussed and formulated in the remainder of this section.

The integration of multi-agent AI frameworks can significantly enhance the reliability of ChatGPT in medical decision-making by reducing biases and improving diagnostic accuracy. Existing studies have highlighted the strengths of ChatGPT in summarizing medical guidelines and assisting in clinical education (Brügge et al., 2024; Miao et al., 2024). However, ChatGPT’s susceptibility to cognitive biases and misinformation remains a major limitation (Schmidl et al., 2024). Multi-agent AI frameworks, in which multiple AI models engage in collaborative analysis before generating a final recommendation, have been shown to mitigate biases in decision-making (Ke et al., 2024). By incorporating different AI perspectives and cross-referencing data, multi-agent approaches can improve AI-assisted diagnostics and minimize errors. This is especially relevant for high-stakes medical decisions in which GenAI hallucinations and biased outputs can lead to harmful outcomes. Future research should explore how multi-agent frameworks can be refined for various medical applications to ensure that GenAI-driven decisions align with clinical best practices and ethical standards.

Proposition 1 The integration of multi-agent AI frameworks can improve ChatGPT’s clinical decision-making by mitigating biases and enhancing diagnostic accuracy.

The growing role of GenAI in legal decision-making has introduced efficiency benefits, as well as significant concerns about transparency and accountability. One of the most pressing challenges is the black box nature of GenAI-generated legal reasoning, where GenAI’s decision-making process is not fully explainable (Cardoso et al., 2024). This lack of transparency undermines public trust in GenAI-assisted legal rulings and creates ethical dilemmas regarding fairness and bias (Socol De La Osa & Remolina, 2024). Implementing GenAI transparency mandates, such as requiring AI-generated rulings to include explainable reasoning, sources, and audit trails, could improve legal decision-making by ensuring AI outputs remain interpretable and accountable. Regulatory bodies should establish mechanisms for reviewing GenAI-generated legal recommendations to prevent misinformation and bias from influencing judicial decisions. Such transparency measures would not only enhance public confidence in GenAI-assisted legal processes but also provide legal professionals with reliable tools to complement rather than replace human judgment.

Proposition 2 Implementing GenAI transparency mandates can enhance public trust in GenAI-assisted legal rulings.

AI-driven financial advisement has revolutionized decision-making by providing real-time insights and predictive analytics. However, existing research indicates that GenAI-generated financial recommendations can exhibit inconsistencies and biases, which can negatively impact user groups with different investment profiles (Lakkaraju et al., 2023). To address this issue, AI-powered financial advisory systems should incorporate user-specific risk tolerance models that enable personalized decision-making. By tailoring GenAI-driven recommendations to individual investors’ risk appetites, these models can enhance decision accuracy while reducing exposure to biased outputs. Furthermore, incorporating real-time market fluctuations and sentiment analysis can improve the relevance of GenAI-generated financial guidance. This approach aligns with the growing trend of GenAI-driven personalization in financial technology, ensuring that GenAI serves as a reliable and adaptive tool for investment planning. Future research should focus on refining these models to balance the efficiency of GenAI with user-specific financial objectives.

Proposition 3 GenAI-driven financial advisement should incorporate user-specific risk tolerance models to enhance decision personalization.

The effectiveness of GenAI in education depends largely on how the prompts are structured and how GenAI-generated content is interpreted. Studies have observed that poorly designed prompts can lead to biased, misleading, or incomplete educational responses (Hiranchan & Kiattisin, 2024). To optimize GenAI’s role in instructional decision-making, educational institutions should implement structured, prompt engineering frameworks that guide GenAI interactions to produce accurate, unbiased, and pedagogically sound outputs. These frameworks include standardized question formats, cross-referencing of GenAI responses with verified sources, and integrating GenAI feedback mechanisms into learning management systems. Furthermore, structured prompt engineering can enhance GenAI’s ability to adapt to diverse learning needs, making it a more equitable educational tool. Educators should continuously review GenAI-generated content to ensure alignment with curriculum standards and prevent misinformation.

Proposition 4 GenAI-assisted educational decision-making should be guided by structured, prompt engineering frameworks to enhance accuracy and fairness.

ChatGPT and similar GenAI tools have significantly improved travel planning by offering personalized recommendations and real-time assistance (Wong et al., 2023). However, studies have underscored the risks of GenAI-generated misinformation in which users rely on inaccurate or outdated information for travel decisions (Christensen et al., 2024; Kim et al., 2025). To address this issue, GenAI-generated travel recommendations should be cross-referenced with verified sources such as government tourism boards, official hotel websites, and real-time weather and safety data. Implementing automatic verification mechanisms within GenAI-driven travel planning tools can enhance the reliability of recommendations, fostering consumer trust. GenAI models should also be updated regularly to incorporate changes in travel regulations and geopolitical developments.

Proposition 5 GenAI-generated travel recommendations should be cross-referenced with verified sources to enhance trust and accuracy.

GenAI has become a crucial component in automation and real-time decision-making; however, its adaptability remains a significant challenge. In sectors such as robotics, industrial automation, and emergency response, GenAI-driven systems functioning should be reliable in dynamic environments (Kuang et al., 2024; Markova et al., 2024). Hybrid GenAI-human decision-making models offer a promising solution by combining the computational efficiency of GenAI with human adaptability. These models allow human operators to intervene when GenAI systems encounter unfamiliar scenarios or fail to effectively process real-time data. Such an approach improves decision accuracy while ensuring that GenAI does not operate autonomously without oversight. Future research should explore optimal configurations for GenAI-human collaboration to maximize efficiency in real-time applications.

Proposition 6 Hybrid GenAI-human decision-making models can enhance the adaptability of GenAI to real-time automation tasks.

Ethical concerns surrounding GenAI-driven decision-making often stem from bias, lack of accountability, and transparency issues (Goktas, 2024; Solaiman, 2024). These concerns can be addressed by incorporating stakeholder-aligned ethical frameworks into GenAI decision-making systems. For instance, SKIG framework aligns GenAI-generated decisions with stakeholder values, improving fairness and accountability (Sel et al., 2024). By integrating similar ethical oversight mechanisms, GenAI models can minimize the risk of unintended consequences in high-stakes decisions. Policymakers and industry leaders should collaborate to establish ethical GenAI guidelines that promote transparency and equitable outcomes across sectors.

Proposition 7 Ethical GenAI decision-making should incorporate stakeholder-aligned frameworks to ensure fairness and accountability.

Effective decision-making in collaborative environments often requires balancing diverse perspectives while mitigating biases and conflicts. GenAI-enhanced sentiment analysis can play a crucial role in facilitating constructive discussions by identifying and addressing hostility in group decision-making processes (Trillo, Cabrerizo et al., 2024). Current research indicates that AI’s ability to detect sentiments and adjust discourse can significantly reduce groupthink and polarization, thereby improving consensus building (Joshi et al., 2024). By integrating GenAI-driven sentiment analysis into collaborative platforms, decision-makers can create more inclusive environments that prioritize evidence-based discussions. This approach can be particularly beneficial in corporate, medical, and policy-driven decision-making settings in which stakeholder alignment is essential. However, the effectiveness of GenAI in moderating discussions must be continuously evaluated to prevent algorithmic biases from influencing outcomes.

Proposition 8 GenAI-enhanced sentiment analysis can improve group decision-making by mitigating hostility and fostering constructive discussions.

GenAI has transformed decision-making across multiple sectors; however, the current research landscape remains fragmented. This literature analysis reveals that GenAI’s applications span medical diagnostics and legal adjudication to business analytics and educational frameworks, each demonstrating benefits such as enhanced efficiency, rapid data processing, and improved operational accuracy. However, these advancements are tempered by significant challenges, including pervasive biases, the risk of misinformation, and the need for robust regulatory oversight. The divergent results across various domains highlight the necessity for integrative research that consolidates scattered insights into a unified framework capable of harnessing the potential of GenAI while mitigating its inherent risks. As decision-making processes become increasingly data-driven and GenAI-augmented, maintaining a balance between technological innovation and ethical accountability is paramount. We highlight several issues to guide future research in this domain: How can future advances in GenAI redefine the core principles of decision-making in high-stakes environments? What innovative governance mechanisms can be established to ensure that GenAI’s influence on decision-making remains transparent, fair, and resilient against bias and misinformation? In sum, this work contributes to the growing body of knowledge by demonstrating how GenAI transforms decision-making through enhanced decision accuracy, greater efficiency, and personalized solutions. For instance, GenAI streamlines complicated decision-making processes, particularly in healthcare and business, by improving decision accuracy. Quick data processing and operational improvements demonstrate GenAI’s efficiency, particularly in judicial adjudication and teaching. GenAI customization provides personalized solutions, especially in healthcare and tourism; however, strong ethical measures must be implemented to ensure fairness and minimize bias.

DisclosureThis manuscript has been edited with the assistance of GPT-4o for grammar correction and stylistic improvements. All content, intellectual contributions, and final interpretations remain the responsibility of the author, who has thoroughly reviewed and approved the final version of the manuscript.

CRediT authorship contribution statementMousa Albashrawi: Writing – review & editing, Writing – original draft, Validation, Supervision, Project administration, Methodology.