The rapid rise of generative artificial intelligence (AI), particularly Chat Generative Pre-trained Transformer (ChatGPT), is transforming how knowledge is produced and innovation unfolds across disciplines. To capture this shift, we analyze 13,942 peer-reviewed publications with Latent Dirichlet Allocation, identifying 120 distinct research topics and grouping them into nine thematic clusters. Drawing on these clusters, we propose a four-lane conceptual model of “ChatGPT-driven value creation,” which portrays the AI pipeline as a sequential, feedback-linked process of knowledge conversion: (i) emerging technologies, (ii) data engineering and algorithmic development, (iii) perception-and-compliance filtering, and (iv) domain adoption. The model reveals where generative AI currently fuels innovation, most prominently in education, healthcare, and business, and where unresolved issues persist, including ethics, security, and misinformation. It also uncovers regional differences in research priorities, signaling diverse innovation dynamics across continents. Overall, by coupling large-scale topic mapping with a theoretically grounded architecture, this study offers scholars, policymakers, and practitioners a coherent framework for harnessing ChatGPT’s potential while addressing governance demands and quality constraints. Effectively, the conceptual model provides both a diagnostic lens for research and a roadmap for responsible, knowledge-intensive deployment of generative AI.

Artificial intelligence (AI) is one of the most rapidly evolving technological domains, with generative AI attracting particular interest for its potential to transform various sectors (Dwivedi et al., 2023; Deng et al., 2025). Popular attention to generative AI truly took off on November 30, 2022, when OpenAI introduced the Chat Generative Pre-trained Transformer, or ChatGPT, based on the GPT-3.5 model. It gained unprecedented popularity, reached one million users at unprecedented adoption rate of merely five days. This rapid uptake catalyzed an immediate and highly heterogeneous research response across disciplines, sectors, and regions.

Since then, ChatGPT has established itself as one of the most widely used generative AI tools, with applications in diverse academic and professional fields from linguistics, psychology, and education (Kohnke et al., 2023; Suriano et al., 2025) to medicine (Sallam, 2023; Tan et al., 2024) and management (Wamba et al., 2024; Budhwar et al., 2023). In particular, its versatility and user-friendly interface have facilitated its extensive adoption, making it a central focus of scientific exploration and practical innovation. Yet, the resulting scholarship is fragmented: Studies differ in theoretical lenses, methodological rigor, domain-specific metrics, and regulatory contexts, making cumulative knowledge development difficult. Integrative cross-domain syntheses are scarce. Moreover, the literature rarely links technical capability trajectories to organizational adoption, governance pressures, or domain outcomes. These relationships are central to socio-technical systems (STS) theory, diffusion of innovations (DoI), technology-organization-environment frameworks, and emerging Responsible/Trustworthy AI research (Wamba et al., 2024; Agrawal, 2024).

Still, the breadth and depth of ongoing research on ChatGPT and its evolving ecosystem are vast. As generative AI technologies become increasingly embedded in everyday practices, an integrative, data-driven mapping that can also support theory building is urgently needed (Ma, 2024; Abadie et al., 2024). Fragmentation across disciplines, geographical regions, and impact levels hampers our ability to understand how large language model (LLM) technologies diffuse, are governed, and generate downstream educational, clinical, and managerial outcomes.

In this study we move beyond description: We assemble a large cross-disciplinary corpus of ChatGPT-related publications; identify latent topics using Latent Dirichlet Allocation (LDA); enrich them with metadata on disciplinary subject areas, geographic authorship, topic size, and citation impact; and synthesize the results into systematic framework. We first apply large-scale topic modelling to the ChatGPT research corpus, allowing previously uncharted themes and relationships to inductively surface. Drawing on those empirical patterns, we construct a four-lane conceptual model that treats the generative-AI pipeline itself as a knowledge-conversion mechanism: Curated data become algorithms, algorithms become quality-assured artefacts, and those artefacts translate into domain-level capabilities through perception-and-compliance filters. We concentrate the discussion on the most influential themes and linking each lane to concrete managerial levers, such as model-card transparency, inter-lane hand-over artefacts, and feedback metrics. Through this, we demonstrate how emergent knowledge can be systematically converted into sustained innovative capacity.

Recent research has also begun anchoring the study of ChatGPT adoption and use within well-established theoretical frameworks, providing a more systematic basis for understanding this rapidly evolving phenomenon. Among the multiple theoretical perspectives, we highlight the most relevant ones to our research focus as they capture both the technological and socio-organizational dynamics of ChatGPT adoption. Drawing on DoI theory, Abdalla et al. (2024) demonstrate that ChatGPT adoption is shaped by perceived relative advantage, compatibility with user needs, and the ease with which its benefits can be observed, emphasizing the interplay between individual utility and peer influence. Complementing this, design science research (DSR) has been applied to create generative AI–based artefacts for organizational health and safety, showing how LLMs can serve as experimental workbenches to test and validate new methodologies (Falegnami et al., 2024). From a broader STS perspective, Liu et al. (2024) highlight that adoption outcomes are contingent on the interaction between technical affordances and social environments, wherein affective and cognitive responses of users critically mediate behavioral engagement.

Other scholars have extended classical technology adoption models. Technology Acceptance Model (TAM) studies reveal that, beyond perceived usefulness and ease of use, trust, social influence, and perceived risks are central in shaping user intentions towards technologies (Camilleri, 2024). Similarly, Unified Theory of Acceptance and Use of Technology (UTAUT) research underscores that performance expectancy, effort expectancy, and facilitating conditions remain relevant. Studies also reveal that user anxiety can significantly hinder adoption, with variations across cultural contexts (Budhathoki et al., 2024). Given the complementarity of these frameworks, approaches to synthesize their insights into an integrative model that connects the technological, organizational, and human dimensions of ChatGPT adoption should be considered. Together, these theoretical perspectives illustrate that ChatGPT research is moving beyond descriptive accounts toward more structured, theory-driven inquiry.

The literature review presented in the following section is based on the systematic search strategy described in Section 2 (Methodology), which relied on the Scopus database, with publications identified using the keywords “ChatGPT” and “Generative AI”.

Literature reviewThe rapid advancement of generative AI, particularly LLMs like ChatGPT, has sparked a growing literature across various academic disciplines. Known for their ability to generate coherent and contextually relevant text, the applications of these models have been explored in fields ranging from education and healthcare to business and the arts (Zhu et al., 2024; Lo et al., 2024). While extant studies address various levels of ChatGPT functioning, their scope and depth are limited (Deng et al., 2025). A significant number of studies examine ChatGPT’s use in education and science. Some, such as Dwivedi et al. (2023) or Ma (2025), suggest that ChatGPT currently has the greatest and most transformative impact on conducting scientific research. These authors emphasize that on the one hand, AI can support researchers on many levels, and accelerate and improve research processes. On the other hand, it can be misused for unethical behaviors. Hence, the scholars emphasize that it is imperative to enact new laws to govern these tools. Similarly, Ray (2023) suggests that ChatGPT has already played a pivotal role in advancing scientific research and holds substantial potential to further revolutionize it. By addressing the challenges and ethical concerns associated with its use, researchers can responsibly harness the power of AI to push the boundaries of human knowledge and understanding. However, Lo (2023) highlights several risks associated with the large-scale use of ChatGPT in education, and suggests its careful and well-considered implementation. A further review of studies on ChatGPT’s impact on education and science shows that the authors agree and indicate that (Lund & Wang, 2023; Kohnke et al., 2023; Grassini, 2023; Montenegro-Rueda et al., 2023; Alfiras et al., 2024; Song et al., 2024; Heung & Chiu, 2025; Suriano et al., 2025):

- •

ChatGPT can significantly increase the efficiency of both scientists and students.

- •

However, legitimate concerns remain about the accuracy and reliability of the content generated, especially in education and research.

- •

Therefore, further research on integrating ChatGPT and modern technologies in the academic environment is recommended.

- •

Guidelines and standards for the use of AI in education and science need to be developed.

García-López et al. (2025) highlight the importance of adopting a proactive approach based on the continuous updating of policies and promotion of additional research. The proposed frameworks should be flexible and adaptable to diverse educational contexts. Therefore, continued research on AI’s effectiveness in education is essential to meet the dynamic challenges and changing societal needs (Kalla et al., 2023). Ultimately, the goal is to create a balance between the innovative use of AI and maintaining ethical standards while providing effective support tools for researchers and students.

Another widely researched area is the impact of ChatGPT on healthcare. Several authors note the increasing importance of AI in improving healthcare (Padovan et al., 2024; Xiao et al., 2023; Patrinos et al., 2023; Neha et al., 2024). Sallam (2023) emphasizes that ChatGPT will inevitably have widespread use in medicine. However, the author argues for a cautious and informed approach to its use. Similarly, Gande et al. (2024) recognize that ChatGPT’s presence in the medical literature is rapidly growing across various specialties; however, concerns related to safety, privacy, and accuracy persist. More research is needed to assess its suitability for patient care and implications for non-medical use. Tan et al. (2024) note that due to its unreliability, AI still a long way to go before ChatGPT can be truly implemented in clinical practice. Xiao et al. (2023) and co-authors indicate that while ChatGPT is a promising tool, its development and implementation requires extraordinary precision, thoughtful analysis, and ongoing vigilance. In turn, Wang et al. (2023) describe the ethical challenges posed by ChatGPT’s use in relationships with patients, emphasizing, among other things, the lack of regulations on legal liability in the event of patient harm caused by ChatGPT and potential violations of patient privacy related to data collection by AI.

Several studies also highlight the challenges that ChatGPT poses in various aspects of running a business. Wamba et al. (2023) claim that ChatGPT is transforming business processes and models in virtually all types of industries, and improves their efficiency. Studies also emphasize an urgent need for further research to better understand ChatGPT adoption and diffusion across industries in operations and supply chain management (Wamba et al., 2024). According to Richey et al. (2023), as society progresses further into the digital age, supply chain and logistics is poised for a profound technological transformation driven by AI advancements, exemplified by applications such as ChatGPT. This evolution unveils a vast and largely unexplored landscape of research opportunities, inviting rigorous academic inquiry into diverse aspects of supply chain processes, operational practices, structural reconfiguration, inter-organizational coordination, and innovation. Next, Budhwar et al. (2023) examine the impact of ChatGPT on human resource management (HRM), emphasizing that AI can revolutionize traditional HRM processes, and offer new tools and methods in areas such as recruitment, training, and employee evaluation. Paul et al. (2023), Tan et al. (2025), and Carvalho and Ivanov (2024) claim that ChatGPT can revolutionize consumer research and customer service. The authors also emphasize that the prospective research agenda is extensive, offering a rich array of theories, models, and frameworks which may be leveraged across disciplines, including marketing science, statistics, psychology, economics, sociology, and the natural sciences. Similarly, Sirithumgul (2023) and Abadie et al. (2024) present examples of many industries, including marketing and advertising, retail, banking and finance, news and media, e-commerce, logistics, gaming, software engineering, social media, legal services, and telecommunications, that are likely to be affected by ChatGPT. Wu et al. (2024) claim that ChatGPT can enhance corporate innovation capabilities and improve corporate performance on several levels. Beckmann and Hark (2024), Talaei-Khoei et al. (2024), Khan et al. (2024), and Wamba et al. (2024) emphasize the lack of research on the impact of ChatGPT’s effect on various business models. This is particularly important because, as Leea et al. (2023) point out, ChatGPT, with other AI technologies and diverse systems, can create new business models and services.

Regardless of whether the issues discussed concern healthcare, education, or broadly understood business or scientific research, scholars largely agreed that further in-depth discussion among stakeholders is necessary to outline future research directions. and establish a code of ethics to guide the use of LLMs in healthcare, business, or academia. Moreover, this literature review allows us to formulate the thesis that further research on ChatGPT and generative AI in various applications and challenges is particularly needed. While researchers emphasize the significant potential of this technology, they also highlight numerous areas that require further analysis and development (AlQershi et al., 2025). Although many studies on ChatGPT cover diverse issues, including its potential, challenges, applications, ethics, impact on society, and risks, the topic is still relatively young and rapidly developing. Therefore, the vast majority of official journals, publishers, and institutions still hold a conservative attitude towards ChatGPT’s application and carefully restrict its use in scientific research (Tan et al., 2024). Moreover, ChatGPT research is extremely interdisciplinary and involves various scientific disciplines, from computer science, medicine, and management to sociology and law, which makes it an exceptionally broad and little-explored research field.

Overall, ChatGPT is certainly an extremely intensively developed tool that requires further exploration to fully understand its impact on society, which seems to be increasingly broader and difficult to predict in the long term. One can certainly agree with scientists such as Dwivedi et al. (2023), Roumeliotis and Tselikas (2023), Mondal et al. (2023), or Bahroun et al. (2023) who recognize that there are still more questions than answers in ChatGPT research and the potential of ChatGPT has not been fully explored. In addition, the rapid development of AI will pose increasing challenges to the world of science and business (Currie, 2023). Therefore, continuous research on this dynamically developing and extremely interesting concept is needed to fill the research gaps, ask new research questions, and keep up with the changing reality (Ali et al., 2024). In particular, the following research topics need in-depth investigations: long-term effects of using AI in education, psychological effects of the long-term use of ChatGPT, dynamics and collaboration processes between ChatGPT and human collaborators (Abadie et al., 2024), or adapting AI to the specificity of local cultures, norms, and values.

Research gapThe literature focused on generative AI, particularly ChatGPT, and its applications across various domains, including education, healthcare, and business, is rapidly growing (Dwivedi et al., 2023; Wamba et al., 2023; Xiao et al., 2023). Yet, we lack a comprehensive overview that systematically maps and characterizes the evolution and structure of research on ChatGPT. The field’s interdisciplinary natures, spanning multiple scientific disciplines, poses challenges in identifying key thematic areas and assessing their development over time (Deng et al., 2025).

Traditional review methods such as systematic literature reviews (SLR) and bibliometric literature reviews (BLR) have provided valuable insights into ChatGPT’s role in specific domains. However, they also present limitations when analyzing a rapidly evolving research landscape. SLRs often focus on a narrower set of studies, which may overlook emerging topics and trends (Lo, 2023). Meanwhile, BLRs, despite capturing broad publication and citation trends, lack the ability to identify latent themes shaping the field (Sallam, 2023). These gaps indicate the need for a more systematic, data-driven approach to understanding the research structure of ChatGPT. Extant reviews and domain-specific commentaries remain largely descriptive and siloed, offering limited integration across disciplinary, impact, and geographic dimensions of ChatGPT scholarship.

Beyond methodological challenges, the extant ChatGPT literature also has notably theoretical fragmentation. Several studies have already applied established frameworks, such as DoI (Abdalla et al., 2024), DSR (Falegnami et al., 2024), STS theory (Liu et al., 2024), TAM (Camilleri, 2024), and UTAUT (Budhathoki et al., 2024). However, these perspectives are typically examined in isolation. Each framework highlights distinct adoption drivers, ranging from relative advantage and peer influence (DoI) to experimental prototyping of AI-based artefacts (DSR), the socio-technical interplay of user behavior (STS), perceived usefulness and risks (TAM), or facilitating conditions and anxiety effects (UTAUT). However, the literature offers little effort to synthesize these complementary insights into a higher-order framework. Importantly, the specific research topics uncovered in large-scale mappings of ChatGPT scholarship are likely to more closely align with particular theoretical lenses, such as innovation-related topics with DoI, organizational design topics with DSR, or user-behavioral themes with TAM, UTAUT, or STS. By systematically charting the research landscape, one can not only identify latent themes but also connect them to their most relevant theoretical perspectives, thereby creating opportunities to bridge these frameworks. Such an integration can move beyond parallel theorizing toward a unified conceptual model that captures the technological, organizational, and human dimensions of ChatGPT adoption and use, enabling cumulative theoretical development across domains.

Given the complexity and scale of ChatGPT applications, novel methodological approaches, such as topic modeling based on machine learning techniques like LDA, provide an opportunity to analyze large datasets and uncover latent topics in real time. To address this shortcoming, we combine large-scale LDA topic modeling with disciplinary subject-area, citation-impact, and geographic metadata to build an integrative evidence base for cross-domain comparison. These tools have been successfully used in other disciplines, including supply chain management and business research (Wamba et al., 2024; Richey et al., 2023). However, they remain underutilized in holistically mapping ChatGPT research. Recent studies suggest that the integration of AI-powered literature analysis can reveal unexplored research directions and better systematize knowledge in this domain (Ma, 2024; Abadie et al., 2024).

To address this gap, this study primarily seeks to create a comprehensive scientific map that captures the ChatGPT research landscape. This map can not only identify existing topics but also highlight those with the greatest potential for future growth and systematization. Finally, we synthesize the empirical patterns into a socio-technical framework that moves the contribution beyond description toward cumulative theoretical development. To achieve this goal, the research is structured around the following key research questions:

- •

RQ1: How has research on generative AI and ChatGPT evolved, and what is its structural composition?

- •

RQ2: What are the latent topics related to ChatGPT and how can they be holistically characterized?

- •

RQ3: How can the empirical patterns identified in RQ1 and RQ2 be synthesized into a higher‑order conceptual framework that organizes ChatGPT‑related research across technical, organizational, societal/governance, and application domains, and supports theoretical development?

By addressing these research questions, this study seeks to provide a more structured understanding of ChatGPT's research landscape, identifying its key themes, trends, and gaps. The findings can support scholars, policymakers, and practitioners in navigating the rapidly evolving field of generative AI, ensuring that research efforts are aligned with emerging challenges and opportunities.

MethodologyData acquisitionThe data were obtained from the Scopus database. Our intention was to obtain abstract records related to the defined research topic. Together with the relevant metadata, these data were subjected to further analyses: geographical analysis, analysis of the number of articles, analysis of the number of citations, analysis of latent topics and their trends, and analysis of the most significant topics. For the most representative results, we needed many documents that are part of our research topic. Therefore, the first step was to correctly define the search query. Our search query was defined as follows: (chatgpt OR "chat gpt") OR ("gpt-3″ OR "gpt3") OR ("gpt-3.5″ OR "gpt3.5″) OR ("gpt-4″ OR "gpt-4.0″ OR "gpt4") OR ("gpt-4o" OR "gpt4o"). We applied this query to the search criteria: Article title, Abstract, and Keywords. We executed the search query on November 7, 2024, obtaining 16,954 documents.

Besides the data itself (document abstracts), the dataset also contained other metadata used in the analytical process: Article title, authors, keywords, citation count, year of publication, source title, and other auxiliary information.

Subsequently, these data were further linked to a dataset that contained categories of individual source titles into 26 subject areas defined in Scopus, wherein each source title could be classified into more than one subject area.

Data preprocessingBefore the actual data and metadata analysis, we performed a dataset filtering. This was because the dataset contained certain inconsistencies and incomplete observations. Hence, we modified the input dataset, which contained 16,954 records, as follows: We removed records that did not have a completed Authors field (386 records removed), were in a language other than English (440 records removed), had an abstract defined as "[No abstract available]" (2178 records removed), had a document type set to retracted (1 record removed), had one of the following strings in the Title: "Retracted | retracted | RETRACTED | Retraction | RETRACTION | retraction" (2 records removed), and had the word Erratum in the Title (5 records removed). The resulting dataset had 13,942 records after the modifications, which we used for further analyses.

Latent Dirichlet allocationLDA was chosen to identify latent topics from a text corpus of abstracts. It is an unsupervised machine learning method that can identify latent topics from a data corpus. It works on the principle of probabilistic clustering of documents into a set of topics (Blei et al., 2003).

A corpus is a set of multiple documents. Therefore, each document a mixture of multiple topics, with topics being represented in individual documents with a certain degree of probability. At the same time, the following must apply (Blei et al., 2003):

where θdi is probability of topic i in document d.Each individual topic is a mixture of multiple words, with the words occurring in individual topics with a certain probability (Blei et al., 2003).

Let D be a text corpus, which in our case includes M documents. Let K be the number of hidden topics that we defined for extraction. Let w be the set of all words w={w1,w2,…wn} defined in the corpus. Let zdn denote the topic number to which the n-th word in document d was assigned; i.e. zdn=k (each word can be assigned to only one topic). Let θd be the topic distribution for document d. θdi is the probability that topic i occurs in document d. Let ϕk be the word distribution for topic k. ϕkn is the probability that word n occurs in topic k.

If we proceed from the principles defined in Blei et al. (2003) and after considering the latent variable ϕk, according to Blei and Lafferty (2009) and Ponweiser (2012), the generative LDA model can be theoretically written as follows:

1. For every topic, do:

- a.

Choose a distribution of words in topic, ϕk∼Dir(β).

2. For every document d, do:

- a.

Choose a distribution of topics in document, θd∼α(Dir);

- b.

For every word n in document d, do:

- i.

Choose a topic zdn based on θd:

- ii.

Based on the chosen topic, choose a word wdn based on ϕzdn.

- i.

A graphical representation of the LDA model is shown in Fig. 1. The model hyperparameters are shown in black, latent (hidden) variables in green, and observed variable in red.

Graphical representation of the LDA model (adopted from Blei & Lafferty (2009); Ponweiser, 2012).

The joint distribution of all variables—latent (topic mixtures for documents θ, word mixtures for topics ϕ, word assignments to topics z) and observed (word set w)—can be defined as follows, subject to the defined hyperparameters α and β (Ponweiser, 2012):

where p(zdn|θd)=θdi for the topic which was assigned to the word; i. e., zdn=k (Blei et al., 2003; Ponweiser, 2012).The distribution θd follows a Dirichlet probability distribution, which is defined as follows (Ponweiser, 2012):

The distribution ϕk has a Dirichlet probability distribution, which is defined as follows (Ponweiser, 2012):

where ϕk,v represent a probability that a word v was chosen if topic k was chosen, and where . is short hand for the sum operator for every possible value of k (Ponweiser, 2012).We get the marginal distribution of a document by summing over all possible values of z and by integrating the joint distribution for all possible values of latent θ and ϕ (Blei et al., 2003; Ponweiser, 2012):

Finally, according to Blei et al. (2003) and Ponweiser (2012), the probability of the corpus D, comprising M documents, can be expressed as follows by taking the product of individual marginal probabilities of all documents in corpus D:

Corpus preprocessingBefore identifying the topics, we performed corpus preprocessing. We edited the corpus, which was created from the abstracts, using standard text processing procedures. These procedures included, among others: converting characters to ASCII format, transforming all characters to lowercase, removing single digits, removing stopwords defined in the vector stopwords ("english") in the tm package in R, and removing extra spaces.

We removed some additional words that would be too generic or general for our from the corpus to improve its quality. We defined these words through past research and word frequency analysis. Specifically, we parsed through the most frequent words; this is where there was the highest chance that an inappropriate word could distort our results. We also removed words that were part of the search query, as their frequency is often very high and can significantly distort the creation of latent topics.

After preprocessing, we transformed the corpus into a document-term-matrix. This was the input form for the LDA modeling. The original dimension of the dtm matrix was 13,942 × 36,298. Since the number of unique words was very high, we decided to remove some words for processing speed. We assumed that removing words with low frequencies in the corpus would not significantly impact the results. We set the sparsity parameter to 0.999; that is, we removed words that occur in under 0.1 percent of documents. After this reduction, the matrix had 3077 columns. Since some documents had zero frequencies for all words, we removed four observations. The resulting dtm matrix had dimensions of 13,938 × 3077. We then performed LDA experiments with this modified dtm matrix.

Identifying latent topicsThe final LDA modeling was preceded by an experimental phase. Since the number of topics is a hyperparameter, the appropriate number of topics can change with the corpus structure. However, some approaches allow one to experimentally determine the appropriate number of topics. In our case, we performed experiments where we monitored four metrics: Arun (Arun et al., 2010), Cao (Cao et al., 2009), Devaud (Devaud et al., 2014), and Griffiths (Griffith & Steyvers, 2004). Subsequently, the average function was calculated from the given values, while we monitored when it reached its maximum. Since some metrics were minimizing, inverting them to maximal ones was necessary. We also normalized the metrics. We performed experiments for the number of topics k = {10, 20, 30, …200}. In addition, we tested all options on the interval 〈110; 130〉. Based on this methodology, we identified the appropriate number of topics as k = 120.

In the experiments and final LDA modeling, we used Gibbs sampling for parameter estimation with the following parameter values: burning = 0, thin = 200, and number of iterations = 2000. We replicated each experiment and the final solution five times, while always considering only the best solution out of the five. For replicability, we used the following seeds (145,227,9,872934,1315).

The final LDA modeling was then performed with k = 120 topics. The methodological framework for topic modeling is shown in Fig. 2.

ResultsChatGPT has experienced an unprecedented rise in popularity in recent years, with previous generative models failing to attract a comparable level of public interest. A key reason is its simpler and more user-friendly interface, which enables direct interaction with the model. While traditional generative tools were often limited by low reliability or a less accessible user interface, ChatGPT quickly gained traction even among non-experts.

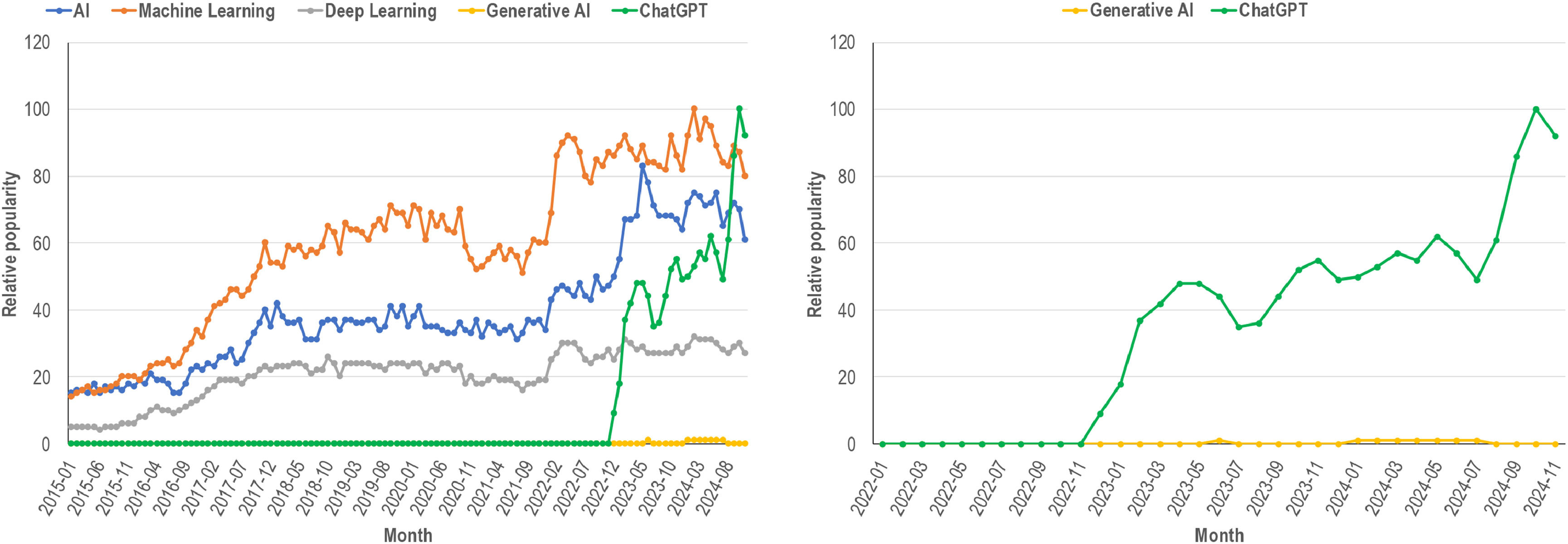

The scale of this success is also reflected in various marketing and social events, as ChatGPT has become one of the key symbols of progress in generative AI in the eyes of the general public. Compared to the traditional interest in broad concepts such as AI, machine learning, deep learning, or even generative AI itself, ChatGPT is capturing an increasingly larger share of attention. This growing interest can be analyzed using Google Trends. The results in Fig. 3 (left) show that while interest in AI, machine learning, and deep learning has been steadily increasing, interest in ChatGPT skyrocketed at the end of 2023 and now surpasses the aforementioned topics.

This trend is particularly intriguing given that the category of generative AI (Fig. 3, right), which also includes ChatGPT, has seen only a negligible increase in interest. Therefore, one can assume that, among the general public, ChatGPT is becoming almost synonymous with AI, leading to a potential conflation of the brand name (ChatGPT) with the broader category (generative AI).

Beyond public interest, ChatGPT has also gained significant attention among researchers. Analyzing the evolution of publications in the Scopus database that include AI, machine learning, deep learning, generative AI, and ChatGPT reveals notable differences, as illustrated in Fig. 4. Research in the broad fields of AI, machine learning, and deep learning has been growing continuously; these represent remarkably large research domains. Fig. 4 (left) also illustrates the fraction of this research dedicated to ChatGPT. However, this "fraction" should not be underestimated, as it comprises thousands of research articles focused on ChatGPT or Generative AI; see Fig. 4 (right). The rate of scientific interest in ChatGPT is growing at a much faster pace than that of broader concepts such as AI, machine learning, and deep learning, further underscoring ChatGPT’s unique disruptive potential.

The difference between how ChatGPT has impacted the general public and scientific community can be explained by the very nature of science and research. Science typically builds on a gradual, evolutionary development of knowledge, relying on previous findings. Meanwhile, the general public tends to primarily focus on the outcomes of this research, one of which is the ChatGPT model itself, often overlooks the broader context.

The development of scientific documents related to ChatGPTThe nature of research and its publication follows specific patterns. A certain amount of time is required before a topic gains recognition in the scientific community. This is because systematic research is typically time-consuming and the peer-review process further extends the timeline. Therefore, some delay understandably occurred between ChatGPT’s introduction and its emergence in the scientific literature. Now, ChatGPT’s disruptive potential in science has become increasingly evident. Fig. 5 illustrates the evolution of the number of documents related to ChatGPT. The left and right sides present an overview of the annual number of documents and citations, and subject areas, respectively. Clearly, research on language models such as ChatGPT has a promising future, with its significance expected to continue growing in both academic and practical contexts. Besides the overall growth in publications, articles were also analyzed based on their thematic focus. The Scopus database includes 28 subject categories, with most sources (e.g., journals, conference proceedings, and books) classified into one or more of these categories. Therefore, the dataset of ChatGPT-related articles was examined across disciplines to determine which research areas are most heavily concentrated.

As expected, the majority of publications originate from computer science (COMP). Language model technology is closely tied to this discipline. Social sciences (SOCI), medicine (MEDI), and engineering (ENGI) are also key research areas, each examining different aspects and potentials of language models within their respective contexts. ChatGPT’s significance is also steadily growing in management (BUSI). Although it has fewer publications compared to computer or social sciences, its strategic importance is undeniable. ChatGPT provides managers with tools to enhance data analysis, support decision-making processes, and automate repetitive tasks, thereby improving organizational efficiency and flexibility. This increasing relevance is also reflected in the rising number of citations in management.

The concentration of citations in social sciences highlights ChatGPT’s substantial impact on the study of social and cultural aspects. While computer science maintains a strong position in the technical development of language models, medicine and management are gaining increasing attention. This suggests that ChatGPT has growing potential to influence diverse practical applications. Thus, ChatGPT is not merely an academic concept but is also establishing itself as a valuable tool in real-world scenarios.

Identification of latent topics related to ChatGPTBased on the processed dataset, which included 13,942 documents, we identified the main research topics related to ChatGPT applications. Each document contained an abstract that served as the basis for topic extraction using latent topic analysis (LDA). After applying the selected methods and optimizing the model, the optimal number of topics was 120. Each document was assigned a single topic based on the highest probability of its content belonging to that topic. Documents with similar distributions of terms were thematically related, and thus, categorized into the same research area. Using the most frequently occurring terms identified by the LDA algorithm, we characterized each topic’s content and assigned it a name based on its dominant terms. The frequencies of these terms were determined using a probabilistic model.

To visualize the results, we used a multi-level circular chart, which provides an overview of all 120 topics. Each chart level presents different characteristics related to the individual topics.

Segment A: It presents key information about the topics and their interrelations. It comprises two main levels:

- •

Level 1 (relationships between topics) – It illustrates the intensity and connections between individual topics through linking lines. Each line represents a relationship between two topics. Only the strongest relationships are displayed, clearly visualizing key interactions.

- •

Level 2 (topic numbers) – The identification numbers of the topics are shown to ensure their clear distinction. Each number represents a specific topic, arranged in a circular order around the center of the graph.

Segment B: It provides a detailed overview of topic characteristics, including two key levels:

- •

Level 3 (thematic areas) – It displays the thematic areas to which individual topics belong. It is visualized using proportional color blocks, with each block representing one of the seven most frequent areas (COMP – Computer Science, SOCI – Social Sciences, MEDI – Medicine, ENGI – Engineering, BUSI – Business and Management, MATH – Mathematics, and ENVI – Environmental Sciences). The composition of these blocks characterizes each topic’s internal “structure” (e.g., some topics are more dominated by COMP, while others by MEDI).

- •

Level 4 (topic name) – It outlines the topic names, defined based on the most frequent terms identified using LDA.

Segment C: It visualizes the geographical distribution of research article origins and comprises one level:

- •

Level 5 (continent) – It presents the proportional continental distribution of documents assigned to individual topics. Each color block represents a specific continent from which the documents’ first authors originate. The distribution by continent helps identify which geographic regions contribute the most to research in a given topic.

Segment D: It provides insights into metrics related to the individual topic’s impact and size. It comprises two levels:

- •

Level 6 (CpP – citations per paper) – It displays the average number of citations per document assigned to a given topic. The CpP indicator (Madzík et al., 2024) is represented by the height of the column; a higher column indicates a higher average number of citations, reflecting a greater scientific impact of the topic.

- •

Level 7 (topic size) – It shows the number of documents belonging to a given topic. Topic size is visualized by the height of the colored blocks; the higher the block, the greater the number of documents in that topic.

Fig. 6 illustrates this multi-level circular graph. It provides a clear overview of the thematic structure of research on ChatGPT applications and relationships between individual topics. It also identifies thematic areas with the highest number of publications and citations, offering deeper insights into current trends and the significance of various research fields.

Thus, one can not only visually gain an overview of the research’s thematic structure but also conduct a detailed analysis of key insights from various perspectives. The following section focuses on identifying and describing the most significant findings presented in the chart.

Topics, their research impact, and research interestThe topics were named based on the most frequently occurring terms (Segment B, Level 4). The names reflect the highest-frequency keywords. The full set of 120 topics was first explored at an aggregate level (Fig. 6). However, a closer inspection of each topic’s composition and relative prominence is necessary to understand the substantive differences across fields. Therefore, in Fig. 7, we zoom in on Segment B (Level 4) to show the detailed topic names alongside their subject‐area membership (Level 3). Each bar in the stacked‐bar view corresponds to one topic (Topic ID on the x-axis), while the color blocks indicate the proportion of documents in each subject area (COMP, SOCI, MEDI, ENGI, BUSI, ARTS, and MATH).

Building on this, Fig. 8 examines Segment D (Level 6 & 7) by plotting each topic’s CpP (green bars) in relation to its absolute size (pink bars). The smallest topics N

PPincluded T-110: Online Resources (30 documents), T-76: Selection Criteria (36 documents), and T-43: Quality Assessment (36 documents).

Meanwhile, the largest topics were T-2: Undergraduate Education (360 documents), T-14: Teacher Education (348 documents), T-9: Python Programming (293 documents), T-5: Medical Consultation (271 documents), T-17: Security Threats (262 documents), and T-11: Educational Curriculum (246 documents). The average number of documents per topic was 116.15, with a median of 99.5, first quartile of 74.75, and third quartile of 136.75. The ratio between the first and third quartiles was 1.83, indicating only slight differences in the size range of individual topics.

One of the largest identified topics is education, particularly T-2: Undergraduate Education and T-14: Teacher Education. These topics are likely extensive because education is a crucial area for applying new technologies. ChatGPT offers significant potential for modernizing teaching by facilitating access to and processing of information. Teachers can utilize its capabilities for content creation and preparing clear explanations for students. Higher education spans multiple disciplines that require flexibility and quick access to up-to-date information, which may explain why ChatGPT finds broad applications in this domain.

The topic T-9: Python Programming is also notably large, possibly due to the continuous evolution of programming as a field and its need for extensive technical support. Both students and professionals frequently require quick and practical answers whether for learning the basics or solving specific problems. ChatGPT's ability to generate code and identify errors makes it highly valuable, particularly for beginners seeking to understand fundamental principles and advanced users looking to streamline their workflow.

In T-5: Medical Consultation, ChatGPT can provide personalized responses to medical inquiries based on verified information, thereby helping patients more effectively navigate healthcare topics. Its ability to clearly explain complex concepts can be beneficial not only for patients but also in telemedicine, where it can serve as a support tool for quickly preparing responses or educational materials. Additionally, it can help make medical knowledge more accessible to the public, thereby promoting health awareness and disease prevention.

T-17: Security Threats is likely related to the increasing demands of cybersecurity. Digital infrastructure risks are constantly evolving, making trend analysis and threat identification crucial. ChatGPT can assist in processing large volumes of information and recognizing critical patterns that experts may overlook, making it a valuable tool for decision support and preliminary risk analysis.

Finally, T-11: Educational Curriculum suggests that ChatGPT can play a significant role in simplifying curriculum development. Designing course syllabi requires extensive time and careful adaptation to current needs. Here, ChatGPT can quickly process information and propose structured, clear content. This likely explains why this topic is highly relevant: it saves educators time while ensuring students receive well-organized, up-to-date learning materials.

Among all identified topics, eight achieved a high CpP value exceeding 20 (Segment D, Level 6): T-15: Data Annotation (CpP = 92), T-28: Domain Fine-Tuning (CpP = 33), T-68: Prompt Capabilities (CpP = 29), T-56: Pretrained Transformers (CpP = 28), T-10: Exam Evaluation (CpP = 24), T-11: Educational Curriculum (CpP = 23), T-89: Plagiarism Detection (CpP = 21), and T-119: Historical View of Bots (CpP = 20). Some are critical for AI’s further development and applications. T-15: Data Annotation is likely highly cited because properly annotated data are the foundation for training machine learning models. High-quality annotation is crucial for achieving model accuracy, making it significant in both research and practice. T-28: Domain Fine-Tuning and T-56: Pretrained Transformers are likely the focus of intensive research, as they enable the optimization of AI models for specific tasks. These techniques enhance language model efficiency and performance, making them relevant both in academia and across industries relying on personalized solutions. T-68: Prompt Capabilities reflects interest in how to effectively interact with models like ChatGPT to obtain the most accurate and useful responses. This topic is likely cited because it helps improve the understanding of how to structure inputs to achieve high-quality outputs, which is essential for diverse users. T-10: Exam Evaluation likely attracts attention due to its potential to increase objectivity and efficiency in student performance assessments. Automating this process can save teachers time while providing fast and consistent feedback to students, making it an attractive field for both research and practical implementation. T-11: Educational Curriculum, which was already mentioned in the context of topic size, reflects strong interest in improving the creation of curricula and provision of modern educational materials. T-89: Plagiarism Detection may be popular due to the growing need to ensure academic integrity and fairness. AI’s ability to detect plagiarism helps improve transparency in both academic and professional settings. T-119: Historical View of Bots may be highly cited because it provides valuable insights into AI’s evolution. Studying these bots can help understand which technologies and approaches have been effective, and how they may influence current research.

Topics in specific subject areasThe analysis of the thematic areas (Fig. 7) reveals that individual areas have varying degrees of representation in the identified topics.

Medicine (MEDI) dominates, with a 50 % share in a total of 18 topics. The most significant topics are T-25: Oncology and Urology (94.53 %), T-6: Surgical Procedures (90.16 %), T-4: Disease Diagnosis (84.36 %), T-1: Patient Assessment Scores (81.28 %), T-32: Radiology and Diagnostics (76.80 %), T-50: Reproducibility Issues (71.43 %), and T-3: Patient Care (70.83 %). Together, these and 11 other topics exceeding 60 % demonstrate that medicine is not only dominant in terms of the number of research topics but also has a significant interdisciplinary reach. This dominance may be a consequence of ChatGPT’s high applicability in medicine.

AI models like ChatGPT can process large volumes of complex medical data and support analysis, which is crucial in areas such as diagnostics, treatment, and patient care. Additionally, medicine often requires quick and accurate responses whether in clinical decision-making or producing research outputs. The possibility of using ChatGPT to streamline administration and educate healthcare professionals may be another factor explaining the field’s dominance.

Social sciences (SOCI) are another prominent area, dominating in three topics. For instance, T-14: Teacher Education (53.52 %) reflects educational aspects, while T-96: Web Chemistry (51.35 %) and T-109: Technology Literacy (54.55 %) focus on disseminating technological knowledge and education through modern technologies. This suggests that ChatGPT has significant potential to improve access to education and enhance technological literacy. Topics such as teacher education and technology literacy reflect the need to adapt to digitalization and education’s changing nature. Social sciences, which study the interaction between technology and human society, benefit from AI’s ability to enhance the understanding and integration of these technologies into everyday life. These areas may also be popular because ChatGPT’s application in education is relatively accessible and can rapidly affect society.

Engineering (ENGI) is dominant in only one topic, T-7: Global Forecasting (58.82 %). This emphasizes the technical aspects of predictive models and their application in research. However, this category includes not only traditional engineering disciplines but also broader fields related to technologies, industrial processes, and applied research. Due to this generic nature and broad scope, this topic can encompass many other specific topics, thereby gaining dominance and covering areas that might otherwise be divided into multiple narrowly focused categories.

Indeed, engineering’s interdisciplinary role is crucial, especially in technically oriented research. The global forecasting topic may be popular because it involves applying predictive models to real-world problems, such as climate change or economic trends. ChatGPT can assist with rapid data analysis and result summarization, thereby facilitating the interpretation and communication of these complex predictions. While engineering does not have many standalone topics, its connection with other fields, such as data and analytics, makes it an essential part of interdisciplinary research.

Other areas, such as COMP, BUSI, ARTS, and MATH, did not exceed a 50 % share in any analyzed topic. However, their contributions play a significant supporting role in interdisciplinary research, wherein they combine with more dominant areas. Although these fields do not lead in the number of research topics, their importance lies in supporting other disciplines. For instance, MATH provides the foundation for the algorithms and models that ChatGPT uses, while COMP encompasses the development and optimization of language models. ARTS can leverage ChatGPT for creative projects, such as text generation, scriptwriting, or visual design. Meanwhile, BUSI can benefit from AI’s capabilities in improving customer experiences or predicting market trends. While these areas are smaller, their interdisciplinary contributions are crucial. Overall, these findings highlight the diversity of research themes related to ChatGPT applications.

Topics and their geographical distributionAnalyzing topic distribution from a geographical perspective (Fig. 9) provides valuable insights into regional trends and areas of scientific interest. The distribution of individual topics was assessed based on the share of documents attributed to specific continents, thereby identifying key regions in terms of research interest. To narrow the dataset, the 95th percentile was used as the threshold value. Any values exceeding this level were considered significant and further interpreted, as they belong to the top 5 % within the examined dataset.

Asia clearly dominates in the number of publications focused on ChatGPT, with the highest representation of topics compared to other continents. The most significant topics include T-14: Teacher Education (176 documents), T-2: Undergraduate Education (145 documents), and T-5: Medical Consultation (137 documents). Other notable topics include T-59: Sentiment Analysis (109 documents), T-11: Educational Curriculum (108 documents), and T-17: Security Threats (103 documents).

Here, T-14: Teacher Education is frequently studied in the context of using ChatGPT for lesson planning, material development, and assessment. Additionally, research focuses on AI integration into education, enhancing teachers' digital skills, and adapting technologies to cultural and linguistic needs (Kohnke et al., 2023). This focus may reflect efforts to address the region’s technological challenges. T-2: Undergraduate Education research in Asia emphasizes using ChatGPT to improve student learning, research, and assessment (Perkins, 2023). Compared to other regions, Asian research often highlights measuring ChatGPT’s effectiveness in the different stages of education, such as evaluating student performance and curriculum development. Studies also explore how ChatGPT can support academic integrity and innovative learning methods, emphasizing its potential for enhancing international competitiveness (Chan & Hu, 2023). T-5: Medical Consultation focuses on medical education, diagnostics, clinical decision-making, licensing exams, and medical image processing. Research often concentrates on specific areas such as geriatrics and oncology, addressing challenges like inaccuracies, ethical issues, and the need for expert oversight (Chan & Hu, 2023). These aspects may be crucial given the importance of healthcare in the region.

T-59: Sentiment Analysis covers diverse applications, including education, marketing, healthcare, and finance. In Asia, particular emphasis is placed analyze public opinion on social media, comparing the performance of language models in different natural language processing tasks, and applying ChatGPT to less widely spoken languages. Additionally, research explores ethical considerations, including AI regulation and its potential risks and benefits (Sudheesh et al., 2023). T-11: Educational Curriculum focuses on personalized learning, automated assessment, interactive learning, and increasing student engagement. It discusses challenges such as ethical concerns, data privacy, and academic integrity, emphasizing the importance of responsible AI usage in education and its potential to transform traditional teaching methods (Tlili et al., 2023). T-17: Security Threats investigates the risks associated with LLMs, including adversarial attacks, phishing, and malware generation. Asian research focuses on cybersecurity applications, vulnerability detection, and system protection, highlighting the ethical and privacy risks. Studies also propose mechanisms to mitigate these threats, reflecting the need for solutions in rapidly developing digital infrastructure (Pa et al., 2023).

Overall, Asia stands out for its dynamic technological infrastructure development, and increasing focus on adapting AI to local linguistic and cultural needs. The extensive research may reflect efforts to establish technological leadership, and integrate modern tools into education and healthcare.

North America primarily utilizes ChatGPT in healthcare and technology. The dominant topics include T-1: Patient Assessment Scores (116 documents), T-9: Python Programming (114 documents), and T-2: Undergraduate Education (98 documents). Other significant topics are T-6: Surgical Procedures (94 documents), T-24: Student Exercise (85 documents), and T-17: Security Threats (85 documents).

T-1: Patient Assessment Scores may reflect an effort to improve public access to medical information. This is particularly relevant in the region given high healthcare costs. Studies focus on response accuracy (Yeo et al., 2023), patient material readability (Li et al., 2023), and comparisons with traditional sources such as WebMD and the Mayo Clinic (McCarthy et al., 2023). This can enhance healthcare efficiency, potentially reducing the pressure on physicians and helping patients better understand their health conditions. The high popularity of T-9: Python Programming may be related to the region’s technological orientation and innovation leadership. Discussions include automating development processes, code generation and refactoring, applications in medicine, robotics, and technology, and ethical issues related to AI misuse. This topic underscores the need for regulation and enhanced security in the technology sector (Feng et al., 2023). T-2: Undergraduate Education suggests efforts to modernize educational systems, particularly in technical and medical fields, with a focus on inclusive and effective learning. Research examines ethics, academic integrity, and support for marginalized groups (Akiba & Fraboni, 2023). This can drive changes in educational approaches to better align with labor market needs and AI developments.

Next, T-6: Surgical Procedures focuses on surgeon training, generating clinical recommendations, improving informed consent processes, and providing patient information. Comparisons with other AI tools and discussions on personalized medicine highlight efforts to enhance efficiency and safety in healthcare (Samaan et al., 2023). This indicates a stronger push for AI adoption in medicine, requiring clear ethical frameworks. T-24: Student Exercises explores ChatGPT’s integration into education, particularly in science, technology, engineering, and mathematics (STEM) fields, to support personalized and experiential learning. Challenges such as diminished critical thinking and AI dependency are also discussed (Ding et al., 2023). This suggests a need to balance AI use with the development of students’ cognitive skills. T-17: Security Threats addresses security risks in LLM models, including adversarial attacks, phishing, and generating vulnerable code. Discussions also cover their potential to enhance cybersecurity and privacy protection (Gupta et al., 2023). AI’s rapid development may necessitate robust regulatory measures and better user education to minimize misuse risks.

Overall, North America focuses on AI commercialization and innovative applications in technology and healthcare. The region's research dynamics may reflect its role as a global leader in technology and efforts to address practical challenges, such as high healthcare costs.

Europe ranks as the third-largest region, characterized by diverse topics. Here, education and technical fields are dominant. The most significant topics include T-2: Undergraduate Education (80 documents), T-14: Teacher Education (78 documents), T-9: Python Programming (72 documents), T-21: Dental Education (67 documents), and T-24: Student Exercises (63 documents), while T-11: Educational Curriculum (56 documents) also has a relatively strong presence. T-2: Undergraduate Education has significant implications for the future of higher education in Europe. Here, ChatGPT can promote academic integrity and equal access to educational tools, reflecting European efforts to democratize technology. Personalized learning and interdisciplinary collaboration can contribute to a more efficient and equitable education system. Technological integration also influences educational policy development, and requires responsible and ethical AI usage (von Garrel & Mayer, 2023). T-14: Teacher Education explores the use of ChatGPT in teacher training, and suggests the need to rethink pedagogical methods to address new digital competency and critical thinking requirements. The focus is on AI integration into language and STEM education to support the development of 21st-century skills (Küchemann et al., 2023). T-9: Python Programming reflects the growing demand for technological skills, particularly in programming. Using generative AI for programming education and code analysis has implications for preparing students for the labor market. Moreover, standards and frameworks are needed to evaluate AI-generated content, and ensuring accuracy, security, and ethical responsibility. This is crucial in the European regulatory environment (Hellas et al., 2023).

Overall, Europe emphasizes ethical regulation and responsible technology usage, shaped by frameworks such as General Data Protection Regulation (GDPR). Efforts to democratize technology reflect the values of inclusion, equity, and interdisciplinary collaboration.

Notably, the remaining regions of Africa, South America, and Australia (Oceania) exhibit significantly lower publication volumes compared to Asia, North America, and Europe. Therefore, we focus on the three most dominant topics in each region. Each topic reflects specific regional challenges, particularly in education and technology adoption.

In Africa, the dominant topics include T-11: Educational Curriculum (15 documents), T-24: Student Exercises (13 documents), T-2: Undergraduate Education (11 documents), and T-14: Teacher Education (11 documents).

T-11: Educational Curriculum highlights the significance of ChatGPT in personalized education. Discussions revolve around plagiarism, technological dependence, and ethical challenges, particularly in developing countries where digital transformation can improve access to education (Mhlanga, 2023). T-24: Student Exercises emphasizes ChatGPT’s potential in distance learning, writing support, and interactive learning. This can be especially relevant in Africa for regions with limited educational capacity (Sevnarayan & Potter, 2024). T-2: Undergraduate Education explores how ChatGPT enhances critical thinking and academic skills, ultimately improving education quality and preparing students for local challenges (Essel et al., 2024). T-14: Teacher Education demonstrates ChatGPT’s role in lesson planning and instruction, increasing accessibility, and improving education quality.

A distinctive feature of Africa is its focus on addressing practical educational challenges, likely driven by infrastructure limitations and a shortage of qualified teachers. Digital technologies such as ChatGPT can help in overcoming these barriers and improving access to education.

South America, like Africa, is among the regions with the lowest number of scientific publications. This may be due to limited research resources or infrastructure. However, publications concentrate on topics like education and technology, likely reflecting the region’s challenges. The most significant topic is T-2: Undergraduate Education (13 documents), followed by T-29: Writing and Revision (10 documents) and T-24: Student Exercises (9 documents).

T-2: Undergraduate Education emphasizes ChatGPT’s use to enhance critical thinking and academic performance. Its benefits include supporting educational material development and interdisciplinary collaboration, which improves teaching quality and fosters innovation (Grágeda et al., 2023). T-29: Writing and Revision focuses on improving academic writing, particularly for non-native English-speaking authors, with an emphasis on plagiarism detection and maintaining originality (Giglio & da Costa, 2023). T-24: Student Exercises examines AI integration into education, highlighting personalized learning, instant feedback, and the risks of academic dishonesty (Mosaiyebzadeh et al., 2023).

Overall, South American research strongly emphasizes social inclusion and reducing regional disparities through improved education quality. The focus on interdisciplinary collaboration and innovation suggests a growing recognition of technology’s role in infrastructure development.

Australia and Oceania are among the regions with the lowest number of publications. However, healthcare and education remain key research areas. The most significant topics include T-6: Surgical Procedures (16 documents), T-2: Undergraduate Education (13 documents), T-11: Educational Curriculum (12 documents), and T-17: Security Threats (12 documents). Despite the lower research output, Australia and Oceania focus on specific high-impact topics, reflecting efforts to innovate and adapt to modern technologies.

T-6: Surgical Procedures investigates the use of AI, such as ChatGPT, in surgical training, procedure planning, and medical record generation while emphasizing accuracy, regulatory compliance, and further research needs (Xie et al., 2023). T-2: Undergraduate Education focuses on personalized learning and overcoming barriers, highlighting student isolation and the necessity of ethical frameworks (Sullivan et al., 2023). T-11: Educational Curriculum emphasizes AI integration into curricula, supporting critical thinking, creativity, and data protection (Gašević et al., 2023). T-17: Security Threats addresses cybersecurity, vulnerability detection, and misinformation prevention, advocating for ethical AI implementation. These topics reflect the region’s technological preparedness and its focus on improving quality of life through innovation (Al-Hawawreh et al., 2023).

The region places a strong emphasis on effective technology use to enhance the quality of life, particularly in healthcare and education. AI innovations are crucial in this region to overcome geographical barriers and improve access to services in rural and remote areas.

Table 1 presents each region’s most significant research topics, reflecting their geographical focus and priority research areas in the context of ChatGPT applications.

The most important topics and their continental distribution.

The interrelations between topics (Fig. 10) identified using the LDA model are displayed in the graph’s inner circle. The 2 most prominent of the 20 strongest connections were selected. These links can be considered marginal, as individual topics exhibit only a low degree of shared concepts or objectives. However, they inevitably overlap in certain aspects. The connection between Technical Projects (T-116) and User Interaction (T-27), for instance, may reflect efforts to incorporate user experience into project implementation, making technical solutions more human-centered. Thus, developers can be influenced by user feedback, allowing them to adapt products and services to real-world needs. Similarly, the relationship between Safety Summarization (T-71) and Quality Assessment (T-43) suggests that a focus on safety naturally aligns with qualitative evaluation. Every preventive measure or risk summary requires subsequent assessment to determine whether it meets established standards and quality requirements. Although these correlations are weak, they indicate partial thematic connections and shared elements in ChatGPT application research.

Conceptual model of ChatGPT‑driven value creation across the socio‑technical stackStarting with an LDA extraction of 120 research topics on ChatGPT and related generative-AI work, we thematically grouped the topics into nine clusters according to semantic proximity and domain affinity (e.g., Healthcare & Biomedical Applications, Core AI & Computational Methods, and Ethics & Governance). The three largest topics are shown in each cluster/block. These clusters served as the building blocks for a higher-order conceptual architecture. We subsequently interlaced the architecture with four well-established theoretical lenses, such as DSR (Bonnet & Teuteberg, 2025), STS (Kikuchi et al., 2024), UTAUT/TAM (Budhathoki et al., 2024) and DoI (Raman et al., 2024), to obtain a single, integrative model that explains how value is created, validated, and diffused in the contemporary ChatGPT research landscape.

Fig. 11 visualizes the outcome: A four-lane pipeline that traces the left-to-right progression from raw technological possibility to domain-level impact, while retaining crucial feedback cycles. The directional links reflect empirically derived relationships among the nine clusters; they enable the model to function as a dynamic roadmap rather than a static taxonomy.

The first entry lane (Innovation Feedstock) contains the Emerging Technologies & Future Capabilities (ET) cluster. This encompasses the “idea reservoir” where disruptive architectures, novel sensors, or fresh data sources originate. The flow shows that ET supplies the data foundations to Data Engineering & Knowledge Representation (DK), whereas another flow indicates that ET enables novel models in Core AI & Computational Methods (AI). Conceptually, the lane represents DSR’s problem identification phase and sets the stage for subsequent engineering work.

The second lane (Algorithmic Manufacturing Line) is an engineering factory formed by DK, AI, and Evaluation, Benchmarking, & Validation (EV). DK curates and structures data, feeding the model training. AI performs learning and inference but needs validation through EV. EV builds user confidence for Human–AI Interaction (HI). Within DSR, DK and AI correspond to the build cycle, and EV to the evaluate cycle; the tight loop exemplifies STS claims that artefacts and quality regimes co-evolve.

Technical adequacy does not guarantee adoption. Therefore, Lane 3 (Perception & Compliance Gateway) mediates between computational performance and social legitimacy. HI studies how users perceive and interact with ChatGPT interfaces, while Ethics, Security, & Societal Governance (EG) formalizes the normative constraints. Flow shows that HI influences normative rules in EG, translating empirical trust findings into policy discourse. Conversely, EG sets the governance conditions for the application clusters. The lane embodies UTAUT/TAM constructs (performance expectancy, effort expectancy, and facilitating conditions) and anchors ethical principles in concrete design features.

The right-most lane (Domain Adoption & Impact Zone) hosts Healthcare (HC), Industry (IN), and Education (ED). These are the sectors where ChatGPT delivers tangible value. HI facilitates adoption within these domains, while EG ensures regulatory compliance. Once deployed, the domains feed back the requirements to AI and strengthen datasets for DK, thereby closing the loop and pushing the frontier of ET inputs. In DoI terminology, the lane represents the implementation and confirmation stages of innovation diffusion.

The model unifies the four lanes into a closed socio-technical circuit that simultaneously expresses:

- •

Forward momentum (left → right): How the innovation feedstock is refined into validated artefacts, filtered through perception-compliance gateways, and ultimately, embedded in specific domains.

- •

Cyclic momentum (right → left): How real-world deployment regenerates new data and problem statements, thereby fueling continuous improvement.

By nesting the nine topic clusters within DSR, STS, UTAUT/TAM, and DoI, the framework explains why certain bottlenecks appear (e.g., data quality, explainability, and regulation) and how they can be strategically addressed. For practitioners, it pinpoints leverage points. For instance, investing in transparent interfaces (Lane 3) or robust evaluation pipelines (Lane 2) accelerates diffusion (Lane 4). For researchers, it provides a comprehensive map of current knowledge in ChatGPT research, linking granular topical evidence to overarching theoretical constructs. Thus, the model offers both explanatory depth and actionable guidance, capturing the state of the art in ChatGPT research and charting a path for future inquiry.

DiscussionTheoretical implicationsGenerative AI, exemplified by ChatGPT, brings fundamental impulses to the theoretical frameworks of various disciplines and calls for a reexamination of existing paradigms. Our findings, which identified diverse topics (e.g., T-2: Undergraduate Education, T-14: Teacher Education, T-5: Medical Consultation, T-17: Security Threats, and T-9: Python Programming), validate that ChatGPT extends into various fields from management and medicine to education and scientific research (Dwivedi et al., 2023; Deng et al., 2025; Lo, 2023). This wide-ranging reach forces disciplines to fuse technical, social, and economic insights into a single analytical lens (Ma, 2024; Ray, 2023).

According to extant research, the intertwining of technological, ethical, and social layers is more pronounced than anticipated (Dwivedi et al., 2023; Bahroun et al., 2023). Theoretically, this implies that the social sciences must consider the technical specifics of LLMs, while computer science and engineering cannot disregard human interaction and ethical implications (Wamba et al., 2024; Carvalho & Ivanov, 2024). The prevalence of medical topics (e.g., T-1: Patient Assessment Scores, T-4: Disease Diagnosis, and T-6: Surgical Procedures) illustrates how models like ChatGPT may influence healthcare processes in ways that traditional medical theories have not fully accounted for (Sallam, 2023; Tan et al., 2024).

Our nine-cluster model pinpoints actionable leverage points for developers, regulators, and sector specialists. Technically, the concentration of topics T-9 (Python Programming) and T-80 (Graph Ontology) in the DK cluster signals an urgent need for domain-specific data contracts and ontological alignment before fine-tuning ChatGPT models, particularly in safety-critical fields such as T-5 (Medical Consultation). Regulatorily, the convergence of T-17 (Security Threats) and T-108 (Legal Logic) within the EG cluster implies that model cards and continuous evaluation reports should become mandatory artefacts, mirroring pharmacovigilance in drug approval. Pedagogically, the pairing of T-12 (Prompt Design) with T-11 (Educational Curriculum) highlights a shift from content delivery to prompt-engineering literacy as a core graduate attribute. Finally, sector-specific patterns, such as T-54 (Product Manufacturing) co-occurring with T-81 (Workflow Optimization), suggest that firms should treat ChatGPT not only as an automation tool but as a collaborative process co-designer, re-structuring value chains rather than merely accelerating existing tasks.

Meanwhile, the process of creating scientific knowledge is transforming alongside a redefinition of concepts such as creativity and authorship (Suriano et al., 2025; Alfiras et al., 2024; Song et al., 2024). In the humanities and social sciences, text originality, data interpretation, and argumentation have formed the basis of scholarly work. However, since ChatGPT can process and synthesize vast amounts of information, it raises questions about the boundary between human and machine contributions to outcomes (Grassini, 2023). Issues of responsibility and trust in AI-generated content also arise (Heung & Chiu, 2025). Consequently, ChatGPT evolves from a mere tool into a co-authoring agent, challenging long-standing assumptions about the origins and legitimacy of scholarly output (Lo, 2023; Ali et al., 2024).

The four‑lane architecture derived from the nine clusters also extends existing theories. First, by inserting an Evaluation lane (EV) between technical development and user acceptance, the model complements TAM/UTAUT with a new construct—evaluation readiness—that moderates the path from perceived usefulness to behavioral intention. Second, the STS perspective is enriched through an explicit Perception & Compliance gateway. It demonstrates that ethical and governance factors (EG) are not exogenous constraints but endogenous co‑producers of system functionality alongside HI. Third, DoI theory gains granularity: Our Domain Adoption lane highlights sector‑specific diffusion velocities, driven by cluster‑level topic densities (e.g., HC versus IN). Together, these insights propose a multi‑layered “ChatGPT Adoption Framework” wherein technical quality, evaluative transparency, and normative alignment form a sequential yet iterative logic. This offers a refined theoretical lens for future empirical testing. From an operations research perspective, our four-lane architecture operates as a closed-loop multi-stage flow shop. Lane 1 acts as a stochastic input stream, Lane 2 as a transformation line with in-process inspection stations (EV), Lane 3 as a reliability gate, and Lane 4 as a service subsystem equipped with feedback channels. Evaluation readiness functions as an inter-stage buffer that limits re-work downtime, whereas endogenous ethics extends classic operations research quality-control theory by adding normative parameters. This mapping provides a clear theoretical contribution: The model is ready for formal queueing or discrete-event simulation analyses widely used in operations research.

Within the education sector, including topics such as T-2 (Undergraduate Education), T-14 (Teacher Education), and T-11 (Educational Curriculum), classic pedagogical models need to be reconsidered. Textbooks and instructors are no longer the sole source of content, as generative AI can produce, personalize, and, in some cases, even evaluate educational materials (Kohnke et al., 2023; Montenegro-Rueda et al., 2023). While multiple studies demonstrate the effectiveness of this learning approach, they also highlight ethical risks, potential plagiarism, and possible student dependency on intelligent tools (Sallam, 2023; Heung & Chiu, 2025). This expands pedagogical theories to encompass new concepts regarding human–AI collaboration, the development of critical thinking, and refined assessment methods (Song et al., 2024). Topics like T-10 (Exam Evaluation) and T-89 (Plagiarism Detection) ultimately demonstrate how academic integrity and the authenticity of student work are undergoing dramatic changes (Suriano et al., 2025).

Another key shift is evident in technology adoption theories. Traditional models such as TAM, UTAUT, and DoI have focused on factors like usefulness, ease of use, and social pressure (Wamba et al., 2023; Paul et al., 2023). However, with ChatGPT, questions of trust, ethics, and potential manipulation gain prominence (Tan et al., 2025; Gande et al., 2024). Topics T-17 (Security Threats), T-15 (Data Annotation), and T-28 (Domain Fine-Tuning) indicate that data quality, model fine-tuning for local conditions, and preventive measures against misuse significantly influence whether and how AI is incorporated into everyday practice (Wang et al., 2023; Khan et al., 2024). Meanwhile, clearer legislative and ethical frameworks are increasingly needed to define the limits of human and machine responsibility (Neha et al., 2024).