Background/Objective: Criteria-Based Content Analysis (CBCA) is the tool most extensively used worldwide for evaluating the veracity of a testimony. CBCA, initially designed for evaluating the testimonies of victims of child sexual abuse, has been empirically validated. Moreover, CBCA has been generalized to adult populations and other contexts though this generalization has not been endorsed by the scientific literature. Method: Thus, a meta-analysis was performed to assess the Undeutsch Hypothesis and the CBCA checklist of criteria in discerning in adults between memories of self-experienced real-life events and fabricated or fictitious memories. Results: Though the results corroborated the Undeutsch Hypothesis, and CBCA as a valid technique, the results were not generalizable, and the self-deprecation and pardoning the perpetrator criteria failed to discriminate between both memories. The technique can be complemented with additional reality criteria. The study of moderators revealed discriminating efficacy was significantly higher in filed studies on sexual offences and intimate partner violence. Conclusions: The findings are discussed in terms of their implications as well as the limitations and conditions for applying these results to forensic settings.

Antecedentes/Objetivo: El Criteria-Based Content Analysis (CBCA) constituye la herramienta mundialmente más utilizada para la evaluación de la credibilidad del testimonio. Originalmente fue creado para testimonios de menores víctimas de abuso sexual, gozando de amparo científico. Sin embargo, se ha generalizado su práctica a poblaciones de adultos y otros contextos sin un aval de la literatura para tal generalización. Método: Por ello, nos planteamos una revisión meta-analítica con el objetivo de contrastar la Hipótesis Undeutsch y los criterios de realidad del CBCA para conocer su potencial capacidad discriminativa entre memorias de eventos auto-experimentados y fabricados en adultos. Resultados: Los resultados confirman la hipótesis Undeutsch y validan el CBCA como técnica. No obstante, los resultados no son generalizables y los criterios auto-desaprobación y perdón al autor del delito no discriminan entre ambas memorias. Además, se encontró que la técnica puede ser complementada con criterios adicionales de realidad. El estudio de moderadores mostró que la eficacia discriminativa era significativamente superior en estudios de campo en casos de violencia sexual y de género. Conclusiones: Se discute la utilidad, así como las limitaciones y condiciones para la transferencia de estos resultados a la práctica forense.

The credibility of a testimony, primarily the victim's and in particular in relation to crimes committed in private (e.g., sexual offenses, domestic violence), is the key element determining legal judgements (Novo & Seijo, 2010), affecting an estimated 85% of cases worldwide (Hans & Vidmar, 1986). Though an array of tools for evaluating credibility have been designed and tested (Vrij, 2008), Criteria-Based Content Analysis [CBCA] (Steller & Köhnken, 1989) remains the technique of choice, enjoys wide acceptance among the scientific community (Amado, Arce, & Fariña, 2015), and is admissible as valid evidence in the law courts of in several countries (Steller & Böhm, 2006; Vrij, 2008). Though the technique was initially designed to be applied to the testimony of victims of child abuse sexual, its application has been extended to adults, witnesses, offenders, and other case types by Forensic Psychology Institutes in judicial proceedings (Arce & Fariña, 2012). The meta-analysis of Amado et al. (2015) found that the technique underpinning the Undeutsch Hypothesis (Undeutsch, 1967) that contends that memories of self-experienced events differ in content and quality to memories of fabricated or fictitious accounts, was equally valid in other contexts and age ranges up to the age of 18 years. Prior to the present review, empirical studies had already contrasted the validity of the Hypothesis in adult populations and in different contexts (Vrij, 2005, 2008). Moreover, as the Hypothesis was grounded on memory content, it had been theoretically advanced that the Hypothesis would be equally applicable to adults and contexts different to sexual abuse (Berliner & Conte, 1993).

CBCA consists of 19 reality criteria which are grouped into two factors: cognitive (criteria 1 to 13), and motivational (criteria 14 to 18). According to the original formulation, both factors are underpinned by the Undeutsch Hypothesis, but Raskin, Esplin, and Horowitz (1991) have underscored that only 14 conform to the aforementioned Hypothesis (14-criteria version).

CBCA has encompassed additional categories, some applicable to all contexts (Table 1) (Höfer, Köhnken, Hanewinkel, & Bruhn, 1993), and others for specific cases (Arce & Fariña, 2009; Juárez, Mateu, & Sala, 2007; Volbert & Steller, 2014), which may be combined with other techniques with diverse theoretical underpinnings such as memory attributes (Vrij, 2008).

Additional criteria.

| • Reporting style (is long-winded when interviewee described irrelevant aspects that were not asked). |

| • Display insecurities (uncertainty about the description of an item). |

| • Providing reasons lack memory (express reasons for not being able to give a detailed description). |

| • Clichés (expressions or utterances that introduce delays into the report). |

| • Repetitions (elements already described were repeated without additional details). |

CBCA is extensively used in forensic practice as a tool for discriminating the memories of adults of self-experienced and fabricated events in different case types. However, due to the numerous inconsistencies in the literature (e.g., designs failing to meet the requirements for applying CBCA, conclusions of non-significant effects not substantiated by the data given the poor statistical power of the studies, 1-β<.80), and the contradictory use of CBCA in adults, a meta-analysis was performed to assess the Undeutsch Hypothesis in an adult population; the discriminating efficacy of CBCA and additional reality criteria; and the effect of the context (case type), lie coaching effect, witness status, and the research paradigm.

MethodLiterature searchAn extensive scientific literature search was undertaken to identify empirical studies applying content analysis to adult testimony in order to discriminate between self-experienced and fabricated statements, be they deliberately invented or implanted memories. The literature search consisted of a multimethod approach to meta-search engines (Google, Google Scholar, Yahoo); world leading scientific databases (PsycInfo, MedLine, Web of Science, Dissertation Abstracts International); academic social networks for the exchange of knowledge in the scientific community (i.e., Researchgate, Academia.edu); ancestry approach (crosschecking the bibliography of the selected studies); and contacting researchers to request unpublished studies mentioned in published studies. A list of descriptors was generated for successive approximations (i.e., the descriptors of the keywords in the selected articles were included): reality criteria, content analysis, verbal cues, verbal indicators, testimony, CBCA, Criteria Based Content Analysis, credibility, adult, statement, allegation, deception, detection, lie detection, truthful account, Statement Validity Assessment, SVA. These descriptors were used to formulate the search algorithms applied to the literature search.

Inclusion and exclusion criteriaThough reality criteria are mainly applied in judicial contexts to ensure a victim's testimony is admitted as valid evidence, a review of the literature reveals they have been also applied to both witnesses and offenders so both populations were included as the studies were numerically sufficient for performing a meta-analysis. The concept of adult in the judicial context is associated with being 18 years of age, and the vast majority of studies endorsed this legal age; notwithstanding, in a few studies the legal age was set at 17 years. Since this difference in age has no effect on the capacity to give testimony either on cognitive or legal grounds, the studies with 17-year-old adult populations were included. The inclusion criteria for primary studies were that the effect sizes of the reality criteria analysed for discriminating between truthful and fabricated statements were reported, or in their absence, the statistical data allowing for them to be computed, including studies with errors in data analysis that nonetheless enabled the effect sizes to be computed.

The exclusion criteria were data derived from a unit of analysis which was not the statement, or when two CBCA criteria were combined into one new criterion (failing the ‘mutual’ exclusion requirement for creating methodic categorical systems). As for the additional criteria, data that were not formulated as additional to CBCA or were specific to only one context were excluded. Likewise, the duplicate publication of data was eliminated, but not the piecemeal (independent data).

Finally, 39 primary studies fulfilling the inclusion and exclusion criteria were selected. Total CBCA score was calculated using 31 effect sizes, whereas as for the individual criteria, the effect sizes ranged from 5 for criteria 10 and 19, to 35 for criteria 3 and 8.

ProcedureThe procedure observed the stages in meta-analysis by Botella and Gambara (2006). Having performed the literature search and selected the studies for the present meta-analysis, these were coded according to variables that have been found to have a moderating role i.e., previous studies (Fariña, Arce, & Real, 1994; Höfer et al., 1993; Raskin et al., 1991; Volbert & Steller, 2014; Vrij, 2005); previous meta-analysis with a child population (Amado et al., 2015); the research paradigm (field vs. experimental studies) under the US law of precedence (Daubert v. Merrell Dow Pharmaceuticals, 1993); compliance with the Daubert standard publication criterion (DSPC) i.e., peer-reviewed journals for evidence to be admitted as scientifically valid legal evidence; the lie coaching condition in reality criteria; and the version of the categorical system (full reality criteria vs. 14-criteria version). Having applied a procedure of successive approximations for the coding of the primary studies (Fariña, Arce, & Novo, 2002), the following moderators were detected: status of the declarant i.e., testimony target (victim, offender, or witness); event target (self-experienced events or video-observed events/witness), judicial context i.e., case type.

As some researchers had renamed the original criteria (Steller & Köhnken, 1989), a Thurstone style evaluation was used consisting of 10 judges who evaluated the degree of overlapping between the original and reformulated criterion. When the interval between Q1 and Q3 was within the region of criteria independence it was considered additional criteria, whereas when it was in the region of dependence with the original, it was considered original criteria.

The coding of the studies and moderators carried out by two independent researchers showed total coincidence (kappa=1).

Data analysisThe effect sizes were taken directly from the primary studies when these were disclosed, or the effect size d was computed using the means, and standard deviations/standard error of the mean (Cohen's d when N1=N2 and Glass's Δ when N1≠N2), the t value, or the F value. When the results were expressed as proportions the effect size δ (Hedges & Olkin, 1985) was equivalent to Cohen's d, whereas when they were expressed in 2X2 contingency tables, the phi obtained was transformed into Cohen's d.

The meta-analysis was performed in accordance with the procedure of Hunter and Schmidt (2015), the unit of analysis (n) was the number of statements, the effect sizes were weighted for sample size i.e., the number of statements (dw), and effect sizes were corrected for criterion reliability (δ).

The differences between effect sizes were estimated using the difference between correlations (q statistic; Cohen, 1988), by transforming the effect sizes into correlations. In the study of moderators the average criteria for each moderator was computed.

In order to estimate the practical utility of the results of the meta-analysis in forensic settings, three recommended statistics were employed (Amado et al., 2015): U1, the Binomial Effect Size Display (BESD), and the Probability of Superiority (PS).

Criterion reliabilityNot all of the primary studies provided data on inter-rater reliability, or agreement for the reality criteria and for the total CBCA score. Moreover, the informed reliability coefficients varied among studies, and in some studies, several were reported, in which case those approximating the results obtained by Anson, Golding, and Gully (1993) and Horowitz et al. (1997) were taken. Owing to the lack of data on coding reliability in studies on specific criteria, average reliability was estimated for the criteria and for the total CBCA score, bearing in mind that reliability is different for the criteria than for the instrument (Horowitz et al., 1997). Reliability was estimated on the basis of reliability coefficients, since agreement indexes do not measure reliability. Thus, on the basis of 172 reliability coefficients of CBCA criteria in the primary studies, the average reliability for CBCA reality criteria was r=.61 (EEM=.020, 95%CI=0.57, 0.65); and for the total CBCA score the Spearman-Brown prediction formula obtained an r=.97. Moreover, the average reliability for the proposed additional reality criteria was calculated using 7 reliability coefficients with an r of .74 (EEM=0.041, 95%CI=0.66, 0.82). The low average reliability observed was sometimes considered as a methodological weakness of the system. Nevertheless, this potential methodological deficiency is corrected for criteria unreliability in Hunter and Schmidt's (2015) meta-analytical procedure.

Results1Study of outliersAn analysis and initial control of outliers was carried out in each of the reality criteria, and the total CBCA score and conditions. The criterion chosen was the ±3*IQR (extreme cases) of the simple size weighted mean effect size, given that the results of more conservative criteria such as ±1.5*IQR or ±2SD, eliminated more than 10% of the effect sizes, indicating they were more probably moderators than outliers (Tukey, 1960).

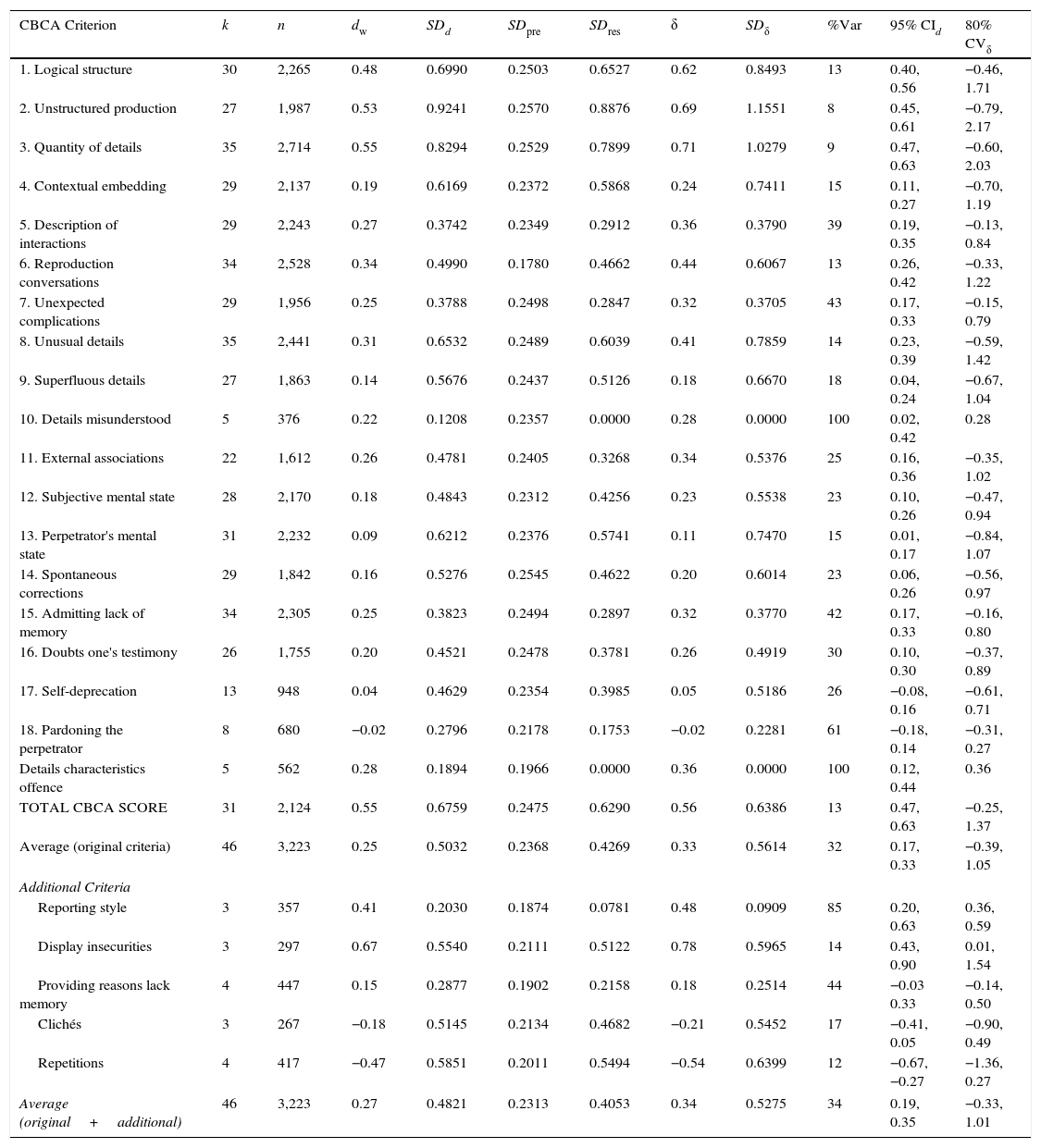

Global meta-analysis of reality criteria in adultsThe results (Table 2) show a positive (between criteria presence and statement reality), and significant (when the confidence interval had no zero, indicating the effect size was significant) mean true effect size (δ) for the CBCA reality criteria, with the exception of ‘self-deprecation’ and ‘pardoning the perpetrator’ criteria, and the total CBCA score. Nevertheless, these results are not generalizable (criteria 10 and 19 were affected by a second order sampling error, so the results were invalid for this estimate) to future samples (when the credibility interval had zero, indicating the effect size was not generalizable to 80% of other samples). For the additional criteria (Höfer et al., 1993), the meta-analysis revealed a positive, significant and generalizable mean true effect size for ‘reporting style’ and ‘display of insecurities’ criteria. The mean true effect size for the ‘repetitions’ criterion was negative and significant, confirming it was not reality criteria, but no generalizable. As for the ‘providing reasons for lack of memory’ and ‘clichés’ criteria, a non-significant mean true effect size was found. The criteria repetitions and clichés were related to fabricated events, that is, they were not reality criteria in themselves, so they were not included in successive analyses. The 75% rule and the credibility interval (Hunter & Schmidt, 2015) warranted the study de moderators.

Results of global meta-analysis for individual reality criteria and total CBCA score, and additional criteria.

| CBCA Criterion | k | n | dw | SDd | SDpre | SDres | δ | SDδ | %Var | 95% CId | 80% CVδ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Logical structure | 30 | 2,265 | 0.48 | 0.6990 | 0.2503 | 0.6527 | 0.62 | 0.8493 | 13 | 0.40, 0.56 | −0.46, 1.71 |

| 2. Unstructured production | 27 | 1,987 | 0.53 | 0.9241 | 0.2570 | 0.8876 | 0.69 | 1.1551 | 8 | 0.45, 0.61 | −0.79, 2.17 |

| 3. Quantity of details | 35 | 2,714 | 0.55 | 0.8294 | 0.2529 | 0.7899 | 0.71 | 1.0279 | 9 | 0.47, 0.63 | −0.60, 2.03 |

| 4. Contextual embedding | 29 | 2,137 | 0.19 | 0.6169 | 0.2372 | 0.5868 | 0.24 | 0.7411 | 15 | 0.11, 0.27 | −0.70, 1.19 |

| 5. Description of interactions | 29 | 2,243 | 0.27 | 0.3742 | 0.2349 | 0.2912 | 0.36 | 0.3790 | 39 | 0.19, 0.35 | −0.13, 0.84 |

| 6. Reproduction conversations | 34 | 2,528 | 0.34 | 0.4990 | 0.1780 | 0.4662 | 0.44 | 0.6067 | 13 | 0.26, 0.42 | −0.33, 1.22 |

| 7. Unexpected complications | 29 | 1,956 | 0.25 | 0.3788 | 0.2498 | 0.2847 | 0.32 | 0.3705 | 43 | 0.17, 0.33 | −0.15, 0.79 |

| 8. Unusual details | 35 | 2,441 | 0.31 | 0.6532 | 0.2489 | 0.6039 | 0.41 | 0.7859 | 14 | 0.23, 0.39 | −0.59, 1.42 |

| 9. Superfluous details | 27 | 1,863 | 0.14 | 0.5676 | 0.2437 | 0.5126 | 0.18 | 0.6670 | 18 | 0.04, 0.24 | −0.67, 1.04 |

| 10. Details misunderstood | 5 | 376 | 0.22 | 0.1208 | 0.2357 | 0.0000 | 0.28 | 0.0000 | 100 | 0.02, 0.42 | 0.28 |

| 11. External associations | 22 | 1,612 | 0.26 | 0.4781 | 0.2405 | 0.3268 | 0.34 | 0.5376 | 25 | 0.16, 0.36 | −0.35, 1.02 |

| 12. Subjective mental state | 28 | 2,170 | 0.18 | 0.4843 | 0.2312 | 0.4256 | 0.23 | 0.5538 | 23 | 0.10, 0.26 | −0.47, 0.94 |

| 13. Perpetrator's mental state | 31 | 2,232 | 0.09 | 0.6212 | 0.2376 | 0.5741 | 0.11 | 0.7470 | 15 | 0.01, 0.17 | −0.84, 1.07 |

| 14. Spontaneous corrections | 29 | 1,842 | 0.16 | 0.5276 | 0.2545 | 0.4622 | 0.20 | 0.6014 | 23 | 0.06, 0.26 | −0.56, 0.97 |

| 15. Admitting lack of memory | 34 | 2,305 | 0.25 | 0.3823 | 0.2494 | 0.2897 | 0.32 | 0.3770 | 42 | 0.17, 0.33 | −0.16, 0.80 |

| 16. Doubts one's testimony | 26 | 1,755 | 0.20 | 0.4521 | 0.2478 | 0.3781 | 0.26 | 0.4919 | 30 | 0.10, 0.30 | −0.37, 0.89 |

| 17. Self-deprecation | 13 | 948 | 0.04 | 0.4629 | 0.2354 | 0.3985 | 0.05 | 0.5186 | 26 | −0.08, 0.16 | −0.61, 0.71 |

| 18. Pardoning the perpetrator | 8 | 680 | −0.02 | 0.2796 | 0.2178 | 0.1753 | −0.02 | 0.2281 | 61 | −0.18, 0.14 | −0.31, 0.27 |

| Details characteristics offence | 5 | 562 | 0.28 | 0.1894 | 0.1966 | 0.0000 | 0.36 | 0.0000 | 100 | 0.12, 0.44 | 0.36 |

| TOTAL CBCA SCORE | 31 | 2,124 | 0.55 | 0.6759 | 0.2475 | 0.6290 | 0.56 | 0.6386 | 13 | 0.47, 0.63 | −0.25, 1.37 |

| Average (original criteria) | 46 | 3,223 | 0.25 | 0.5032 | 0.2368 | 0.4269 | 0.33 | 0.5614 | 32 | 0.17, 0.33 | −0.39, 1.05 |

| Additional Criteria | |||||||||||

| Reporting style | 3 | 357 | 0.41 | 0.2030 | 0.1874 | 0.0781 | 0.48 | 0.0909 | 85 | 0.20, 0.63 | 0.36, 0.59 |

| Display insecurities | 3 | 297 | 0.67 | 0.5540 | 0.2111 | 0.5122 | 0.78 | 0.5965 | 14 | 0.43, 0.90 | 0.01, 1.54 |

| Providing reasons lack memory | 4 | 447 | 0.15 | 0.2877 | 0.1902 | 0.2158 | 0.18 | 0.2514 | 44 | −0.03 0.33 | −0.14, 0.50 |

| Clichés | 3 | 267 | −0.18 | 0.5145 | 0.2134 | 0.4682 | −0.21 | 0.5452 | 17 | −0.41, 0.05 | −0.90, 0.49 |

| Repetitions | 4 | 417 | −0.47 | 0.5851 | 0.2011 | 0.5494 | −0.54 | 0.6399 | 12 | −0.67, −0.27 | −1.36, 0.27 |

| Average (original+additional) | 46 | 3,223 | 0.27 | 0.4821 | 0.2313 | 0.4053 | 0.34 | 0.5275 | 34 | 0.19, 0.35 | −0.33, 1.01 |

Note. k=number of studies; n=total sample size; dw=effect size weighted for sample size; SDd=observed standard deviation of d; SDpre=standard deviations of observed d-values corrected from all artifacts; SDres=standard deviation of observed d-values after removal of variance due to all artifacts; δ=effect size corrected for criterion reliability; SDδ=standard deviation of δ; %Var=variance accounted for by artifactual errors; 95% CId=95% confidence interval for d; 80% CVδ=80% credibility interval for δ.

The study of moderators (criteria average as dependent variable; Table 3) showed a positive and significant mean true effect size, but not generalizable, in all of the moderators analysed. As for the magnitude of the effect sizes, excluding the witness condition with a medium effect size (δ>0.50), all were small (0.20>δ<0.50). Arce and Fariña (2009) have suggested (and designed) the specifications of categorical systems based on bottom-up rather than ‘top-down’ procedures to ensure only categories that effectively discriminate between memories of experienced events and fabricated memories form part of the system. This maximizes the efficacy of the resulting categorical system by eliminating the noise produced by non-discriminating ‘top-down’ categories. Thus, the meta-analyses were repeated with the categories of content analysis with significant effect size i.e., the confidence interval for d did not contain zero. The results (Table 3) revealed a significant increase in the effect size of field studies, qc=.119, p<.05 (one-tailed; a larger effect size was expected with significant criteria), thus the effect size was significantly larger with significant criteria. Moreover, for significant criteria, the results (not all of the reality criteria were generalizable) became generalizable (the credibility interval had no zero). As for the experimental studies on the remaining moderators, the results did not corroborate the Hypothesis as the reality categories had been initially or subsequently screened to eliminate the non-significant ones.

Results of the meta-analysis of moderators.

| Moderator | k | n | dw | SDd | SDpre | SDres | δ | SDδ | %Var | 95% CId | 80% CVδ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CBCA significant criteria (17) | 46 | 3,223 | 0.27 | 0.5187 | 0.2380 | 0.4433 | 0.36 | 0.5835 | 31 | 0.19, 0.35 | −0.39, 1.11 |

| 14-criteria version | 45 | 3,143 | 0.28 | 0.5567 | 0.2394 | 0.4906 | 0.36 | 0.6465 | 25 | 0.22, 0.34 | −0.47, 1.19 |

| Daubert standard publication criterion | |||||||||||

| All criteria (22) | 35 | 2,256 | 0.20 | 0.4575 | 0.2407 | 0.3733 | 0.26 | 0.4786 | 39 | 0.12, 0.28 | −0.35, 0.87 |

| Self-experienced events | |||||||||||

| All criteria (22) | 34 | 2,277 | 0.26 | 0.4647 | 0.2371 | 0.3879 | 0.33 | 0.5022 | 40 | 0.18, 0.34 | −0.31, 0.97 |

| Non self-experienced events (witness) | |||||||||||

| All criteria (13) | 11 | 625 | 0.39 | 0.5835 | 0.2707 | 0.5032 | 0.51 | 0.6548 | 65 | 0.23, 0.55 | −0.33, 1.35 |

| Offenders | |||||||||||

| All criteria (21) | 11 | 1,067 | 0.27 | 0.4662 | 0.2024 | 0.3743 | 0.35 | 0.4975 | 41 | 0.15, 0.39 | −0.29, 0.99 |

| Victims | |||||||||||

| All criteria (18) | 11 | 840 | 0.27 | 0.4781 | 0.2355 | 0.4012 | 0.35 | 0.5221 | 35 | 0.13, 0.41 | −0.32, 1.02 |

| Field studies | |||||||||||

| All field studies (18) | 6 | 422 | 0.34 | 0.4948 | 0.2385 | 0.4153 | 0.45 | 0.5404 | 35 | 0.14, 0.54 | −0.24, 1.14 |

| Significant criteria (10)a | 6 | 422 | 0.53 | 0.4774 | 0.2458 | 0.3834 | 0.69 | 0.4989 | 42 | 0.33, 0.73 | 0.05, 1.33 |

| Sexual and IPV field studies | |||||||||||

| All criteria (17)b | 5 | 263 | 0.67 | 0.3587 | 0.2871 | 0.1957 | 0.87 | 0.2459 | 72 | 0.41, 0.92 | 0.55, 1.18 |

| Significant criteria (15)c | 5 | 263 | 0.74 | 0.3654 | 0.2892 | 0.2134 | 0.96 | 0.2478 | 72 | 0.48, 0.99 | 0.64, 1.28 |

| Experimental studies | |||||||||||

| All criteria (22) | 39 | 2,721 | 0.25 | 0.4497 | 0.2336 | 0.3934 | 0.32 | 0.4933 | 37 | 0.17, 0.33 | −0.31, 0.95 |

Note.

The meta-analytical technique does not take into account the theoretical foundations or the reliability of the studies included in the original theories, that is, all of the studies on categories of reality are included. Moreover, the experimental designs of studies on witnesses are not really on witnesses of self-experienced events, but on non-self-experienced events i.e., video-observed events (watched on video, not involving self-experienced events) that do not fulfil the original theory hypothesizing that reality criteria discern between memories of self-experienced real-life events and fabricated or fictitious memories. Only one of the studies on witnesses involved self-experienced events (Gödert, Gamer, Rill, & Vossel, 2005), and for the total reality criteria, were found to discriminate significantly real witness from real offenders giving false testimony, d=0.59, 1-β=.78, and from uninvolved participants, d=0.83, 1-β=.96. Nevertheless, reality criteria also discriminated between both memories of video-observed events and fabricated events. The only study (Lee, Klaver, & Hart, 2008) comparing memories of self-experienced events (truth condition) and video-observed events (lie condition) found CBCA reality criteria, and the total CBCA score discriminated significantly between both memories in line with the Undeutsch Hypothesis.

The high observed variability in effect sizes in field studies, which was mostly due to one study alone, suggested differences in experimental design (the crime context in this study was found to be different to the other studies). As the effect of context has been hypothesized (Köhnken, 1996; Volbert & Steller, 2014), and found (Arce, Fariña, & Vilariño, 2010; Vilariño, Novo, & Seijo, 2011) to mediate the discriminating efficacy of reality categories, the meta-analysis was repeated in field studies on sexual offences and intimate partner violence (IPV) cases (crimes committed in the privacy of one's home according to the categorization of Arce & Fariña, 2005). The results showed a positive, significant and generalizable (not generalizable in all field studies) mean true effect size for studies under this condition. Moreover, the magnitude of the effect sizes were significantly larger in sexual offences and IPV cases than in all field studies in all the reality criteria (0.45 for all field studies vs. 0.87 for sexual offences and IPV cases), qc=.199, p<.01 (one-tailed; a higher effect size was expected in specific criminal contexts), and in the significant criteria, qc=.168, p<.05 (0.69 vs. 0.96). Likewise, reality criteria were significantly more efficacious, qc=.2622, p<.01, in sexual offences and IPV cases than in all other types of cases (0.32 vs. 0.87).

Results (meta-analysis could not be performed because ks and ns were insufficient and research designs incomparable) for the comparison between statements of participants instructed to lie (lie coaching condition) with truthful statements were inconclusive2 in relation to the effectiveness of reality criteria to discriminate between truthful and false statements.

DiscussionThe following conclusions may be drawn from the results of this study. First, the results confirmed the Undeutsch Hypothesis, that is, reality criteria discriminated between memories of self-experienced and fabricated events [File Drawer Analysis (FDA): to bring down this hypothesis to a trivial effect (McNatt, 2000), .05, for the average of the CBCA criteria, it would be necessary 184 studies with null effect; Hunter & Schmidt, 2015. It is unlikely to happen]. Besides fulfilling the DSPC, this Hypothesis was also valid for memories of victims/claimants and offenders (for witness of self-experienced events further research is required); and robust in both experimental studies (high internal validity), and field studies (high external validity). Notwithstanding, the reality criteria also discriminated between memories of video-observed events i.e., non-self-experienced events, and fabricated events for which the Hypothesis was not formulated, and research findings are inconclusive as to the validity of the Hypothesis with lie coached subjects. Second, though the results validated CBCA as a categorical system based on the Undeutsch Hypothesis, neither were all of the criteria validated, nor were they generalizable, and some even contradicted the Hypothesis. Thus, these criteria can be used neither in all types of contexts, nor indiscriminately. Both versions of the CBCA (all criteria or 14 criteria) were exactly the same (δ=0.36) in discriminating between memories of self-experienced and fabricated events. Though the results open the door to the inclusion of new reality criteria, additional criteria have been proposed that fail to fulfil the Undeutsch Hypothesis (significant negative effect sizes i.e., not reality criteria), so they cannot be included in the CBCA. Third, in field studies the discriminating power of reality criteria was significantly higher in sexual offences and IPV cases (FDA: to bring the results in sexual offences and IPV cases down to a trivial effect, it would be necessary 62 and 69 studies with null effect for all criteria and significant criteria, respectively. It is unlikely to occur) in comparison to other types of contexts (FDA: to reduce the efficacy of the reality criteria to discriminate between real and fabricated memories in any context of field studies to a trivial effect it would be necessary 35 studies with null effect. It is unlikely to happen). Succinctly, the areas of both populations do not overlap in 54% (U1=0.54), that is, they were totally independent, thus the efficacy of the reality criteria in discriminating between memories of self-experienced and fabricated events in sexual and IPV cases was total in 54% of the evaluations of credibility. Moreover, 75% of statements of self-experienced events contained more reality criteria than fabricated events (probability of superiority, PS=0.75), the probability of false positives was 28% (BESD). These results were highly robust i.e., not only establishing a positive and significant relation between reality criteria and true statements, but were also generalizable to all types of sexual offences and IPV cases, and were homogeneous (i.e., subject to little variability since the correlation between the effect sizes was .72).

As for the implications for forensic practice, the results of the present meta-analysis reveal that the reality criteria were statistically effective for discriminating between memories of self-experienced and fabricated events, but this does not imply they are directly generalizable to forensic practice. Even under the best discriminating conditions i.e., field studies in sexual and IPV cases, the probability of false positives may reach .22, whilst this probability must be zero in forensic settings (Arce, Fariña, & Fraga, 2000). In general, only significant reality criteria i.e., scientifically attested evidence, were admissible for forensic practice (see note of Table 3), since the results were generalizable, whereas for all criteria they were not. However, as the credibility interval lower limit was 0.05, the practical utility of these categories was almost negligible (PS=.51), that is, in only 51% of true statements there were more reality criteria than in false statements, and under what specific conditions this contingency occurred remains unknown. However, the credibility interval lower limit of the reality criteria applied to cases of sexual offences and IPV, which were also generalizable both in terms of all the criteria and the significant criteria, was larger, PS=.73 and .75 (Hedges and Olkin's δ=0.59 and 0.65, test value=.51), for all the reality criteria and the significant criteria, respectively. However, these conclusions are not directly applicable to forensic practice as the decision criteria which in the forensic context must the ‘strict decision criterion’ in which a type II error (classify a false statement as true) is not admissible i.e., must be equal to zero. Regarding the strict decision criterion, Arce et al. (2010) found up to 13 CBCA reality criteria in fabricated statements of IPV cases, which means that at least 14 reality criteria would have to be detected in a statement to conclude that the testimony was true, with a correct classification of true positives (true statements classified as such) of 36%. Succinctly, the CBCA reality criteria were a poor tool for assigning the credibility of IPV victim testimony. Thus, to enhance efficacy, CBCA reality criteria must be complemented with additional criteria. In this line, Arce and Fariña (2009), Vilariño (2010) and Vilariño et al. (2011) combined CBCA and SRA criteria, memory attributes, and additional reality criteria specific to IPV cases derived from real statements (judicial judgements as ground truth), to create and validate a categorical system specific for IPV cases, including sexual offences, with a strict decision criterion to reduce the rate of false negatives to 2%. In any way, only results with a strict decision criterion can be translated into forensic practice.

In terms of future research, the results of the present meta-analysis underscored the need for further studies with experimental designs assessing the efficacy of reality criteria in discriminating between memories of self-experienced events and video witnessed non-self-experienced events; between self-experienced witnessed events vs. fabricated events; between memories of participants coached to lie and honest; and research driven to find new reality categories (bottom-up), mainly for a specific context i.e., crime victimization.

This meta-analysis is subject to the following limitations. First, previous publications have biased the results in that the non-significant results or predictably inefficacious categories were eliminated (favouring the validation of the Undeutsch Hypothesis). Second, the feigning methodology (experimental studies) had no proven external validity (Sarwar, Allwood, & Innes-Ker, 2014), but only ‘face validity’ (Konecni & Ebbesen, 1992). Third, for some experimental literature, statements are insufficient material for reality content analysis (Köhnken, 2004), which favours the rejection of the Undeutsch Hypothesis. Fourth, there was no control on the effects of the interviewer on the contents of the statement, or on the reliability of the interviews, which were often carried out by poorly trained interviewers. Fifth, few studies comply with SVA standards that are a requirement for applying CBCA. Sixth, the results of some meta-analysis may be subject to a degree of variability, given that Ns<400, did not guarantee stability in sample estimates (Hunter & Schmidt, 2015). Seventh, primary studies did not estimate the reliability of the codings, thus results’ reliability is uncertainty.

FundingThis research has been sponsored by a grant of the Spanish Ministry of Economy and Competitiveness (PSI2014-53085-R).

Indicates the primary studies included in the meta-analysis.

Additional results and resources at http://www.researchgate.net/profile/Ramon_Arce

Conclusions in the primary studies about non-significant results are inconclusive as the statistical power, 1-β<.80, is insufficient to conclude (d=−0.44, 1-β=.41, Bogaard, Meijer, & Vrij, 2013; d=0.37, 1-β=.26, Vrij, Akehurst, Soukara, & Bull, 2002; d=0.11, 1-β=.06, Vrij, Kneller, & Mann, 2000).